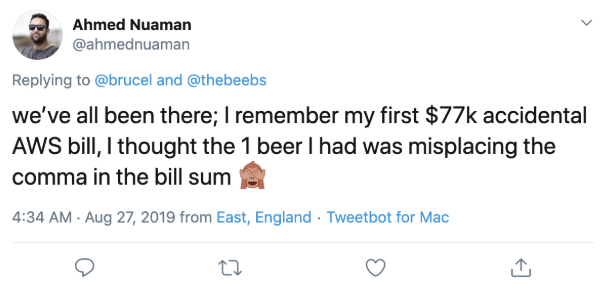

It is no secret that it is not that hard to accidentally spend a boatload of money on AWS. This Twitter user told the story of his $77,000 mistake with a monkey emoji (because what’s funnier than spending the price of an entry level Tesla by accident).

The scary part is that many of us have been there. If you are not looking at your cloud bill every day, it is easy to let the costs run up quickly, then suffer from bill shock when it arrives a month later. That is why CloudZero ingests cost data and alerts on trends within minutes. The goal here is to turn that $77k mistake into a $7 dollar one so that you’re not wasting time explaining how such a thing could have happened.

The challenge, of course, is If you are part of a busy engineering team focused on getting products and features out the door, you don’t have time to stare at your bill all day. If you are at a company who spends tens or hundreds of thousands (not to mention millions), this requires even more effort.

So, we set out to design a solution that would surface key cost insights, without requiring users to dedicate time to building dashboards, setting custom alerts, or manually tuning rules. To solve this challenge, we turned to the power of machine learning algorithms and good old fashioned data science. The result was that CloudZero is the cost anomaly detection system for AWS that can catch the mistakes that happen during development, before your code makes it to production—not to mention well before the bill makes it to finance for review.

Statistical Modeling to Solve a Time-Series Problem

When we were designing our AWS cost monitoring platform, we had a few key requirements:

- We wanted to ensure that we caught small changes to cost early, to prevent end-of-month bill shock.

- We had to ensure that we were not creating noise for engineering teams.

- We needed something that could also forecast costs.

- We wanted to account for recurring events (seasonalities) that happen on a daily, weekly, or monthly basis.

To address these challenges and more we took a statistical modeling approach that can work against normalized time series data at ultra high time granularities, and built our machine learning algorithms and code on top of Facebook Prophet and Stan—a powerful probabilistic programming language. Consider this example: if you run a nightly S3 backup or a daily compute-intensive job that gets kicked off at 7 am, our algorithm will pick up on all of these patterns as we build and update our models, which can then make predictions for what will happen in the future. Anything that does not align with these predictions is a potential anomaly. Since every company’s version of “anomalous” is different, we’ve also refined our algorithms for companies of various sizes, usage patterns, and resource needs.

Layering In Human Knowledge

Statistically significant results are not always business significant. Not every anomalous event needs to be brought to the manager’s attention. In other words, we only care about anomalies that cost more dollars than the human time used to investigate it. To strike that balance, we applied knowledge of AWS services to tune thresholds for when and how often customers should be notified of anomalies. With a combination of rules and machine learning algorithms, we have trained our system to accurately find the metaphorical “needle in the haystack”. Our engine also continues to learn with every customer we onboard.

Our Data Is Awesome

Last but not the least, a model is only as good as the data. Practically every data scientist has been given the hard task of creating accurate prediction models off dynamic, transactional data piled high in a S3 bucket somewhere and no time or resources to set up a good data warehouse for analytical purposes.

When CloudZero was founded, we designed our AWS cost monitoring platform with an eye towards solving the problems DevOps teams face with a very operational point of view. With a founding team steeped in data engineering, the data model was at the center of our strategy to solve the problems we wanted to solve. We wanted to enable engineers to make better, informed decisions.

Thus, we built a strong foundation with a system that extracts all available metadata and processes the data at the smallest time-and AWS service-granularities. Processing aggregated data sometimes loses valuable information. For example, our competitors look at the daily granularities, and treat every dollar spent from one service to the next as if they were identical. Without this attention to detail, shorter anomalies would be shrouded in the larger organizational spend and false positives (or negatives) would plague the system.

Conclusion

Software companies want to move fast and experiment, and CloudZero helps them do so, while ensuring they have visibility if that experimentation drives up the bill. We would love for you to take our product for a spin and see what sorts of mistakes we can ensure you never make, because after all, that 70k would be better spent on more engineering headcount and features for your customers.