Whoever thought we’d see the day when cloud cost management (CCM) seemed easy?

CloudZero just released FinOps In The AI Era: A Critical Recalibration, an annual report on the state of cloud and AI costs. The report surfaced what looks like a paradox: FinOps maturity is accelerating, but organizational cloud efficiency is plummeting.

72% of organizations now have formal cloud cost management (CCM) programs. That’s nearly double what we saw in our last survey (39%). Budget assignment jumped to 87% from 73%. Even chargeback adoption, one of the trickier FinOps capabilities, rose to 64% from 45%.

And yet: Cloud Efficiency Rate (CER) dropped across every segment and every quartile. CER measures how much of a company’s revenue it sends to its cloud providers. Our last survey found top-quartile companies sent just 8% of revenue. This time? 15% — almost double.

Why, you ask?

An answer as simple as it is very, very complex: AI.

40% of surveyed companies now spend $10M or more a year on AI. 47% spend $10M or more on cloud — and the cloud had a 13-year head start. AI costs are accelerating with no ceiling in sight, and they’re eating into profitability.

Which, if done strategically, isn’t necessarily a bad thing. AI has introduced a slew of new FinOps challenges, but the fundamentals haven’t changed: Every engineering decision is still a buying decision, and buying decisions still need unit economic justification. Below, I’ll cover some of the more telling findings from the report and explain how engineering leaders can set themselves up for long-term AI efficiency.

Why Is AI Cost Reporting So Complex?

That AI spend is accelerating isn’t surprising. Neither is the fact that companies haven’t yet figured out how to make it profitable. We’re in the very early days of a technology whose disruptive potential is probably vaster than any of us can appreciate. Right now, companies care most about building the best stuff. Losing a race to a competitor because they didn’t want to invest that extra $5M would be a strategic catastrophe.

This mindset is both strategically necessary and existentially threatening. Applied in the extreme, it convinces leaders that any spend is good spend, any growth is healthy growth, and any restraint is stinginess.

I hear it constantly from peers: “AI is our fastest-growing cost center, we have no idea what we’re getting from it, and there’s no way we’re going to slow it down.”

If AI customers don’t care how much they’re spending, AI providers won’t feel any pressure to show them. And they’re not. Thus, the full problem: It’s not just that AI spend is accelerating. AI billing complexity is accelerating too.

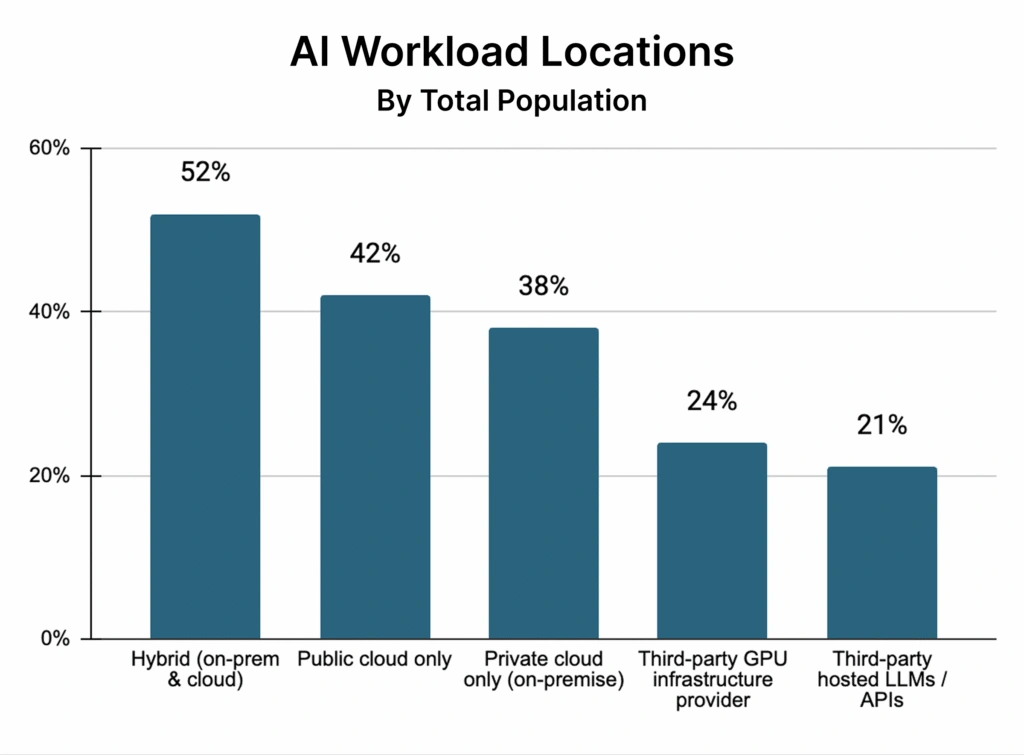

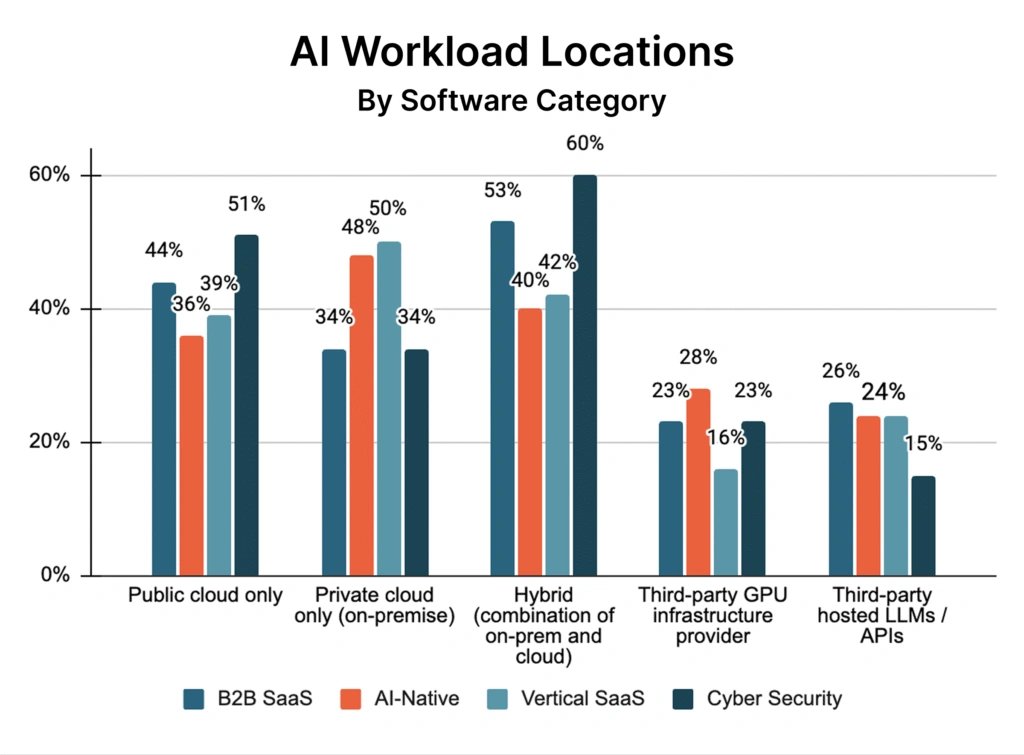

With cloud costs, the vast majority of spending goes to the big three public cloud providers: AWS, Azure, GCP. With AI, it’s not that simple. Organizations host AI workloads across many different providers: public cloud, private cloud, third-party GPU providers, third-party LLMs and APIs. Most companies use more than one.

You’ve got AWS Bedrock for certain workloads, direct API relationships with OpenAI and Anthropic for others, GPU providers for training, and multiple LLM vendors depending on which productivity hacks individual team members have figured out.

In other words: many different bills, in many different formats, appearing in your proverbial mailbox at many different times. I’d call it “complex,” but that’s too kind. A better term for the current state of AI billing is “dumpster fire.”

And given where we are in the AI disruption cycle, no one is putting any pressure on vendors to douse those flames. Every attempt to understand AI costs becomes a bespoke, unrepeatable activity. As often as not, AI costs pile up on a single executive’s credit card (I know because I’m one of them) and the numbers increase exponentially.

For a while, this status quo will persist. We saw it with the early internet, we saw it with the cloud: Growth-at-all-costs reigns until it can’t anymore. At the risk of being a buzzkill, I have to remind fellow engineering leaders that the party always ends. The math has to math. Revenue has to justify costs. The party may not end tomorrow, but we’re heading toward it inexorably.

What Can Engineering Leaders Do About It?

So let’s assume that, like me, you’re one of those engineering leaders who bravely volunteered their credit card for Claude. What can you do right now to stop AI from destroying your margins and prepare for that inevitable future state where your groundbreaking innovations have to turn a profit?

The answer: set up basic cost observability that helps you answer one question: “Was what we spent on AI worth it?”

This takes three steps:

- Determine the strongest indicator of business value. Figure out which cost center is most indicative of the value of your innovations. Maybe you’ve got a few flagship products; target cost per product. Maybe you’ve got experimental engineering teams; target cost per team. Or cost per customer. Or cost per service. Whatever it is, pick the most important one and run with it.

- Figure out what it costs. Set up tooling — this might require third-party software — to calculate what that business value indicator actually costs. And don’t make the mistake of breaking it down in terms of infrastructure. Nobody cares what you spent on Opus 4.5 vs. Sonnet 3 — that’s a completely meaningless metric. The question isn’t what you spent. The question is what you spent it on.

- Figure out what you got in return. Did your investment in that product/team/customer/service make you money? Did it drive up user activity? Did it carve off a bigger slice of market share? Determine the return that matters most to your business and quantify it alongside your costs. If your product team flipped from Sonnet to Opus and the costs changed one way while the output changed another — was it worth it?

These steps won’t necessarily curtail your spending. But they will give you at least a crude ability to answer the question, “Was it worth it?” When your investors interrogate rising SageMaker costs, you’ll be able to point to specific initiatives and cite specific returns that give you credibility as the person responsible for those costs.

Don’t police every penny. Get a clear idea of whether the investment is paying off.

Every Engineering Decision Is A Buying Decision

For a long time, I’ve said that every engineering decision is a buying decision. That’s because engineers are the ones responsible for incurring cloud costs.

With AI, it’s not just engineers — it’s everyone. And it’s not just your cloud infrastructure — it’s everything.

AI companies are not just monetizing your IT infrastructure. They’re monetizing almost every thought that almost anyone in your company has. We’ve applied a technology that attaches a tax to every cogitation, and the till has transcended the engineering organization. Product, marketing, sales, HR, and anyone else using LLMs for input on their most important decisions is now triggering spend.

The point: AI cost chaos has just begun. As wild-west as it feels right now, the dumpster fire will only continue to rage, and the peril for those without cost observability will only increase.

What you can do now: Set up basic parameters to answer the question, “Was it worth it?”

When the spending-frenzy chickens come home to roost, I can assure you, it will have been.