If your SaaS organization is experimenting with OpenAI, your cloud bill just got a new line item. And unless you know exactly what drives it, that line item can go from manageable to margin-killer, fast.

It’s also worth clarifying that OpenAI is not the same as ChatGPT. ChatGPT is the familiar end-user app with a flat monthly subscription. OpenAI, meanwhile, is the platform behind it — a mix of models, features, and usage-based pricing that shifts depending on what you build.

Now, the last thing you want is to pay for something you didn’t even realize you were using, or didn’t need.

In this guide, we’ll explain how OpenAI pricing works, break down costs by model, highlight the factors that influence your bill, and share proven ways to optimize spend.

How Does OpenAI Pricing Work?

OpenAI pricing is usage-based, meaning you’re billed for the resources your application consumes. Costs scale directly with the amount of text, audio, or image data you process through OpenAI APIs.

At its core, the system comes down to tokens. A single token is roughly one word or number that the model processes. Every request consumes input tokens, and every response consumes output tokens.

One API call might cost just a fraction of a cent, but multiplied across thousands of daily users, the spend adds up quickly.

Understanding the pricing levers upfront can be the difference between a predictable budget line item and a surprise bill that eats into your gross margins.

Related: SaaS Companies Are Reporting Weaker Margins Than They Need To — Here’s Why

Start by understanding your OpenAI cost components.

What Factors Influence Your OpenAI Bill?

Several factors can push your costs up or down, often in ways that surprise many SaaS teams:

- Model choice: For example, GPT-5 commands a higher per-token price than GPT-3.5.

- Context windows: A bigger context window (now at 128K tokens) gives models more “memory” per request, but also multiplies your token usage and bill.

- Feature set: Beyond text models, OpenAI charges separately for embeddings (semantic search, personalization), image generation (DALL·E), and audio models (Whisper, text-to-speech).

- Volume and concurrency: Scaling from a pilot to thousands of concurrent users can turn pennies into thousands of dollars overnight.

- Deployment type: API access is metered per token. For example, ChatGPT Enterprise offers per-seat pricing.

These are the knobs that tune your OpenAI bill. And since each model and feature is priced differently, the next step is understanding what those costs actually look like across OpenAI’s lineup.

OpenAI Pricing By Model (Or Service)

Here is a quick table to help you compare OpenAI’s tiered capabilities side-by-side.

Model / Feature | Input Price (per 1M tokens) | Cached Input Price | Output Price (per 1M tokens) | Best For |

GPT‑5 (flagship) | $1.25 | $0.125 | $10.00 | High-complexity tasks (coding, reasoning, agentic workflows) |

GPT-5 Mini | $0.25 | $0.025 | $2.00 Mid-level complexity, high-volume use cases | |

GPT-5 Nano | $0.05 | $0.005 | $0.40 Low-cost summarization/classification | |

GPT-4.1 (fine-tuning) | $3.00 | $0.75 | $12.00 Legacy fine-tuning pricing (phase‑out in favor of GPT‑5) | |

GPT-4.1 Mini (fine-tuning) | $0.80 | $0.20 | $3.20 Legacy fine-tuning tier | |

GPT-4.1 Nano (fine-tuning) | $0.20 | $0.05 | $0.80 Legacy fine-tuning tier | |

GPT-4o (real-time API) | $5.00 | $2.50 | $20.00 Multimodal (text, image, audio) workflows | |

GPT-4o Mini (real-time API) | $0.60 | $0.30 | $2.40 Cheaper multimodal tasks | |

o1-Pro | $150.00 | — | $600.00 Highest accuracy, heavy reasoning workflows (legacy) | |

GPT-4.5 (legacy) | $75.00 | — | $150.00 High-compute use cases, phased out |

All pricing reflects August 2025 API rates and includes OpenAI’s general caching discounts where applicable.

Now, let’s translate that pricing table into real SaaS use-cases, so you can see how OpenAI pricing works in practice.

OpenAI Pricing Examples For SaaS Teams

We’ve based these examples on conservative token estimates and the official pricing rates for GPT-5 as of the date of this post.

Example 1. Customer support bot

Picture a support bot handling 10,000 queries a day. Each interaction takes about 500 tokens in the prompt and 500 in the response. Over a month, that adds up to roughly 300 million tokens split evenly between input and output.

Costs by model:

- GPT-5 flagship would cost about $1.69M/month

- GPT-5 Mini would cost about $337.5K/month, and

- GPT-5 Nano would come to about $67.5K/month

Using GPT-5 Mini instead of the flagship reduces your cost by 80%, while still delivering reliable customer service.

Example 2. Analytics assistant for SaaS users

Now imagine an analytics assistant where 2,000 users each generate about 10 reports per month. Say a single report averages 2,000 input tokens and 1,000 output tokens. All told, that’s around 60 million tokens (40M input + 20M output) processed each month.

The costs would be:

- GPT-5 flagship: $250K/month

- GPT-5 Mini: $50K/month

- GPT-5 Nano: $10K/month

Structured reporting doesn’t always require top-tier reasoning power, of course, so Nano or Mini could do here.

Related: How CloudZero’s OpenAI Integration Provides Unprecedented AI Unit Economic Insights

Example 3. Semantic Search With Embeddings

Embedding 10 million documents, at an average of 500 tokens each, means processing about 5 billion tokens. That’s a one-time indexing cost of around $500.

After that, query traffic is light by comparison. Even a million monthly queries averaging 1,000 tokens each come in at about $100.

Example 4. Multimodal app with image generation

Consider a SaaS design tool that generates 100,000 images per month. With GPT-Image-1 priced at approximately $0.04 per 512×512 image, the monthly bill comes to around $4,000.

Example 5. Voice-enabled SaaS feature (Whisper API)

For a mid-sized SaaS company, you may need to transcribe dozens of weekly customer calls, sales demos, or internal meetings, so 500 hours per month is a realistic estimate. That’s about 125 one-hour meetings per week, across departments. For a smaller SaaS, it might be lower. For a large enterprise SaaS, it could easily top 2,000 hours.

Those 500 hours of recorded meetings each month work out to 30,000 minutes of audio. At roughly $0.006 per minute with the Whisper API, the total comes to about $180.

Now, not every workload needs the horsepower (and the cost) of GPT-5. If you want to optimize your OpenAI spend, see our in-depth OpenAI cost optimization strategies guide here for over a dozen ways to do exactly that, but here’s the quick version.

How To Reduce And Optimize OpenAI Costs

At CloudZero, we believe in smart optimization, not cutting AI or cloud costs at all costs. So, the best place to start is by tuning your stack for efficiency using the very levers that drive your bill.

For engineers, this means right-sizing context windows, intelligently mixing models, and leaning on embeddings wherever possible, instead of calling GPT-5 for every search or classification task, pre-compute embeddings. They’re far cheaper and scale beautifully for workloads like personalization or semantic search. You can also take advantage of OpenAI’s caching discounts by reusing system prompts and repeated instructions.

For CFOs and FinOps, the focus is alignment. Model choice should track customer lifetime value (CLV). Spending GPT-5 flagship tokens makes sense when the feature directly drives retention, revenue, or competitive advantage.

Take the Next Step: Turn Your OpenAI Spending Into A Strategic Advantage

The key is to align OpenAI costs with value — then adjust accordingly. That starts with visibility: understanding the people, products, and processes driving your AI costs. With this insight, you can choose the right model for the right task and make OpenAI a growth enabler, not a margin-killer.

That’s where CloudZero comes in.

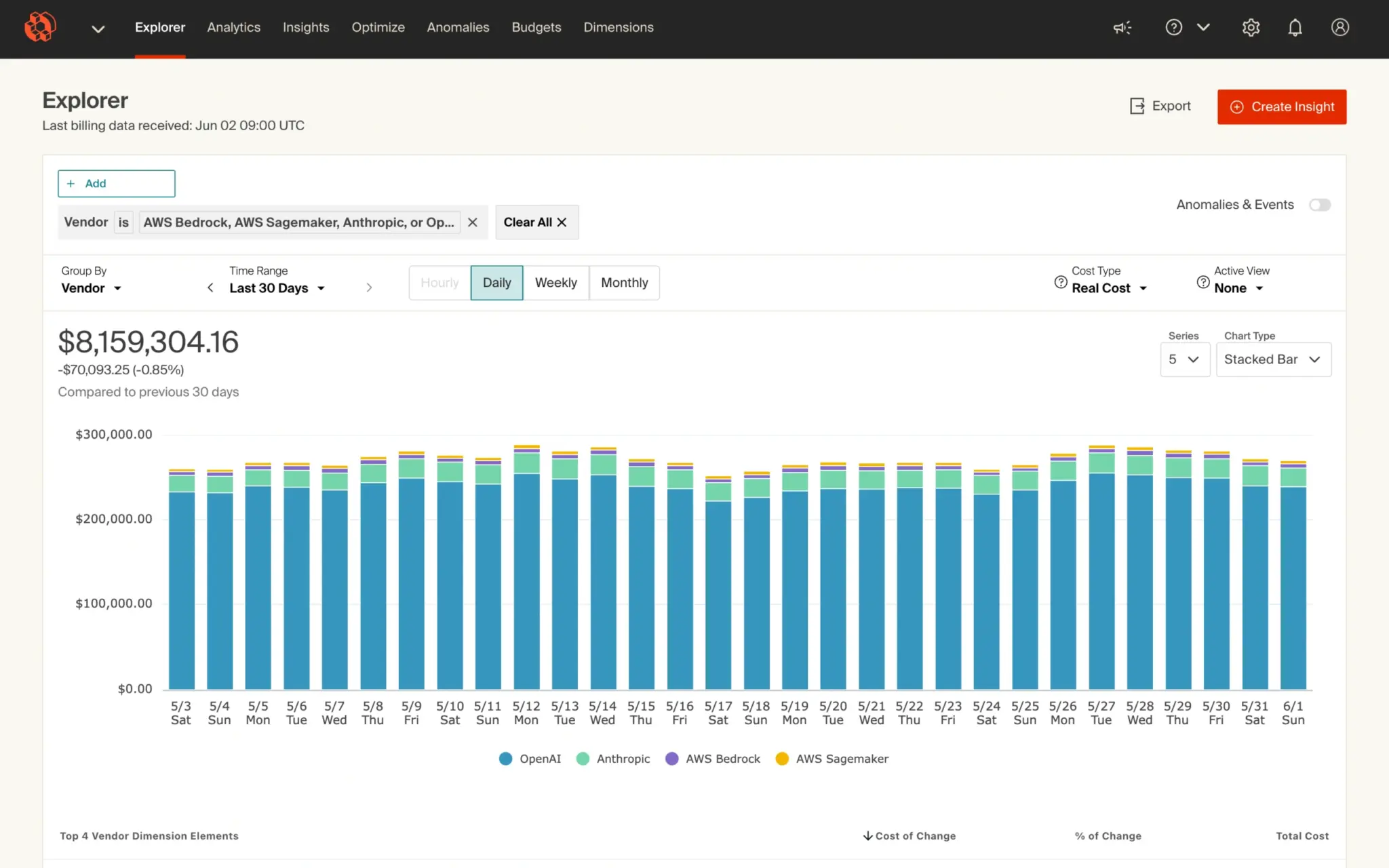

We give you real-time visibility into your OpenAI costs, broken down by AI model, feature, customer, deployment, environment, and more. Instead of guessing what’s driving your bill, you’ll know exactly where your AI budget is going, and how to optimize it.

Ready to take control of your OpenAI costs?  and see how teams like Helm.ai, Grammarly, and Skyscanner keep spend efficient and sustainable with CloudZero.

and see how teams like Helm.ai, Grammarly, and Skyscanner keep spend efficient and sustainable with CloudZero.