The promise of cloud computing has always been about flexibility and cost-effectiveness. Yet many organizations find themselves trapped in a cycle of unpredictable costs and underutilized/overutilized resources.

The culprit?

A lack of understanding about the power of scaling within platforms like Amazon’s Elastic Kubernetes Service (EKS).

This article aims to demystify the concept, showcasing how proper scaling strategies can lead to significant cost savings, optimized performance, and a more streamlined cloud experience.

Introduction Into Scaling And EKS

EKS is a managed service that allows users to run Kubernetes on Amazon Web Services (AWS) without the need to install, operate, and maintain their own Kubernetes control plane or nodes. It’s designed to provide flexibility of Kubernetes with reliability, security, and scalability of AWS.

One of the standout features of EKS is its ability to auto-scale, ensuring optimal resource utilization and cost management. There are three primary autoscaling strategies and tools within EKS:

- Horizontal Pod Autsocaling (HPA)

- Vertical Pod Autoscaling (VPA)

- Cluster Autoscaling

Let’s spend some time going over each of these options.

Horizontal Pod Autoscaling

Horizontal Pod Autoscaling is a feature within Kubernetes that automatically adjusts the number of pod replicas in a deployment or replica set. It operates based on observed metrics such as CPU utilization, memory consumption, or custom metrics defined by the user.

When the observed metric crosses a user-defined threshold, HPA will either increase or decrease the number of pod replicas to ensure that the application can handle the current load.

The primary purpose of HPA is to ensure that applications can efficiently handle varying amounts of traffic.

For instance, during peak times, HPA can automatically scale out (increase replicas) to accommodate the increased demand. Conversely, during periods of low traffic, it can scale in (decrease replicas) to conserve resources.

This dynamic adjustment ensure that resources are not wasted on over-provisioned pods, leading to cost savings and optimized performance.

How Can We Begin Using HPA On An EKS Cluster?

Prerequisites

- Kubectl is installed and connected to your EKS cluster

- You have Metrics Server installed

- You’ve deployed an application within your cluster

Since HPA is a standard API that is part of any Kubernetes cluster deployment, leveraging HPA is as simple as running the following command to create an HPA resource:

kubectl autoscale deployment <DeploymentName> --cpu-percent=50 --min=1 --max=10

The above command will scale the specified deployment when CPU utilization crosses the 50% threshold, and will operate within a range of 1-10 replicas at any given time.

Vertical Pod Autoscaling

Vertical Pod Autoscaling automatically adjusts the CPU and memory resource requests and limits of individual pods in a Kubernetes deployment.

Unlike Horizontal Pod Autoscaling (HPA) which scales the number of pod replicas, VPA focuses on scaling the resources of existing pods. It operates by continually analyzing the consumption of resources and based on this analysis, reallocates them to ensure that pods have the resources they need without over-provisioning.

The primary purpose of VPA is to optimize resource utilization for individual pods. By dynamically adjusting resource requests and limits, VPA ensures that:

- Pods receive the necessary resources to function optimally.

- Resources are not wasted on over-allocated pods, leading to cost savings.

- The risk of pod evictions due to resource constraints is minimized.

How Can We Begin Using VPA On An EKS Cluster?

Prerequisites

- Kubectl is installed and connected to your EKS cluster

- You have Metrics Server installed

- You’ve deployed an application within your cluster

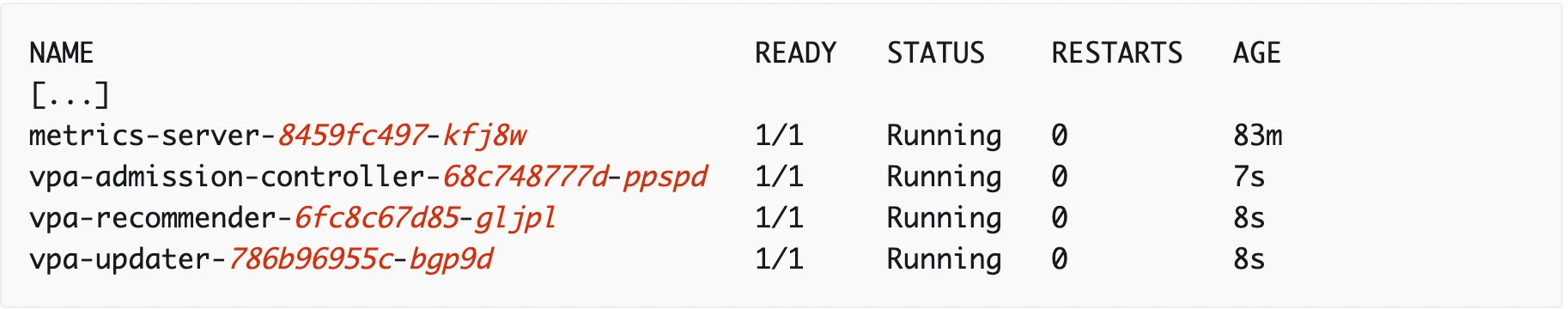

The process of using VPA is a little more complicated than with HPA, and requires the installation of the tool through the command line.

1. Clone the kubernetes/autoscaler GitHub repository

git clone https://github.com/kubernetes/autoscaler.git

2. Change to the vertical-pod-autoscaler directory

cd autoscaler/vertical-pod-autoscaler/

3. Deploy the Vertical Pod Autoscaler to your cluster with the following command

./hack/vpa-up.sh

4. Verify that the Vertical Pod Autoscaler Pods have been created successfully

kubectl get pods -n kube-system

Cluster Autoscaling

Cluster Autoscaling is a feature that adjusts the size of the cluster, meaning it adds or removes nodes based on resource requirements and constraints. If there are pods that fail to run in the cluster due to insufficient resources, CA will add more more nodes.

Conversely, if nodes are underutilized and their pods can be placed elsewhere, CA will consider removing these nodes.

The primary purpose of Cluster Autoscaling are:

- Resource Optimization – Ensure that the cluster has enough resources to meet the demands of the workloads without over-provisioning, which can lead to unnecessary costs.

- Improved Availability – By automatically adding nodes when required, CA ensures that applications have the resources they need to run, leading to better availability and performance.

- Cost Efficiency – By removing underutilized nodes, CA helps in reducing costs associated with idle or underused resources.

How Can We Begin Using CA On An EKS Cluster?

Out of all of the scaling options we’ve gone over, CA is by far the most complicated to begin using. You’ll need a combination of IAM roles and policies, Service Accounts, and ConfigMaps deployed within the cluster begin using the tool.

Refer to this document for step-by-step directions to install CA within your cluster.

Limitations Within Scaling Options

HPA limits

The ability to dynamically adjust the number of pod replicas based on utilization is incredibly powerful, but its reactive nature means it scales based on current conditions rather than predicting future needs.

This can lead to a slight delay in scaling during sudden traffic spikes, potentially causing service degradation.

Additionally, HPA relies on resource requests and limits set on pods. If these are not set appropriately, HPA might not function as expected, leading to over-provisioning and increased costs.

Continuously monitoring metrics and making scaling decisions can also introduce overhead, especially in large clusters, impacting cost efficiency.

VPA limits

A rather significant limitation of VPA is that when memory and CPU limits and requests are adjusted, the pod needs to be restarted, leading to brief service disruptions. This can be even more problematic for stateful workloads, like databases, which require persistent connections.

Additionally, VPA makes decisions based on historical usage, which might not always align with future demands. This reactive approach can sometimes result in over-allocation of resources, leading to unnecessary costs.

Combining VPA and HPA can also lead to conflicts, as both try to scale resources based on different criteria, complicating cost optimizations strategies.

CA limits

While CA focuses on ensuring that workloads have the necessary resources, it can sometimes lead to suboptimal scaling decisions. For instance, adding an entire node to host a small pod can be overkill, leading to underutilized resources and increased costs.

There’s also a delay between when resources are needed and when CA adds nodes, which can impact performance and user experience.

Additionally, frequent scaling actions can lead to unpredictable costs, especially if nodes are added and removed often. In scenarios with multiple node groups of varying instance types and sizes, CAs scaling decisions can become more complex, potentially impacting cost optimization.

How CloudZero Can Help Mitigate These Issues

CloudZero is a cloud cost intelligence platform that provides insights and analytics into cloud spending, making it a valuable platform for organizations leveraging EKS’ scaling options. While HPA, VPA, and CA offer dynamic resource adjustments, they can sometimes lead to unpredictable costs and suboptimal resource allocations, as noted above.

CloudZero addresses these challenges by offering real-time visibility into cost drivers, helping organizations understand the financial impact of their scaling decisions. By analyzing and correlating cost data with scaling events, CloudZero can highlight inefficiencies, such as over-provisioned resources or frequent scaling actions that lead to increased costs.

CloudZero can also provide recommendations for more cost-effective scaling strategies, ensuring that organizations not only meet their performance needs but also optimize their cloud spending within EKS environments.

Conclusion

Amazon’s Elastic Kubernetes Service offers a suite of scaling options, including Horizontal Pod Autoscaling, Vertical Pod Autoscaling, and Cluster Autoscaling, each designed to enhance resource utilization and cost management.

However, as detailed in this article, each comes with its own set of challenges that can inadvertently lead to cost spikes and resource inefficiencies. Luckily, CloudZero provides us with the functionality needed to mitigate against these pitfalls, providing the insights and analytics necessary to navigate the complexities of EKS scaling.

By bridging the gap between dynamic resource adjustments and cost predictability, organizations can harness the full potential of EKS, ensuring a balanced, cost-effective, and high-performance cloud environment.