Think of a stateful application like a conversation with a barista who remembers your order every time you walk in. They know what you had yesterday, how you like it prepared, and what you’ll probably want next.

That memory makes the experience smoother, but it also means that if that barista isn’t around, your experience can break down entirely.

A stateless application, on the other hand, is similar to ordering from a self-service kiosk. Each time you enter your request, it’s from scratch, like it’s the first time. No handing off required here.

In software, this same distinction, stateful vs. stateless, shapes how applications behave in the cloud.

For engineers, this choice affects performance and resilience. For SaaS leaders and finance teams, it influences cost predictability, budget variance, and how your SaaS margins move.

Here, we’ll share where stateful vs. stateless applications differ in plain language, compare their trade-offs, and break down why this architectural decision plays a much bigger role in cloud cost optimization than most teams realize.

We’ll focus specifically on how state management changes scaling behavior, failure recovery, infrastructure utilization, and cost elasticity in real cloud environments — not just in theory.

What Is a Stateful Application?

Before diving into definitions, it’s important to understand that “stateful vs. stateless” is not just a software design choice — it directly affects how cloud resources are allocated, scaled, and paid for over time.

A stateful application is an application that retains information (the state) about previous interactions and uses that information to process future requests.

The application relies on stored context such as session data, user progress, or in-memory variables to function correctly. That state may live in application memory, on local disk, or in tightly coupled storage systems.

Any application that:

- Maintains server-side user sessions

- Tracks progress through multi-step workflows, and

- Relies on in-memory data between requests…

…is operating in a stateful way.

For example, a web app that stores session data directly on the application server becomes stateful by design. But if that server goes down, or traffic is routed elsewhere, the user’s session can be lost unless special handling is in place.

That “special handling” often introduces additional infrastructure requirements — such as sticky load balancing, replication, or standby capacity — which can increase baseline cloud spend.

How stateful applications affect your cloud costs, scalability, and business workflows

From a cost perspective, stateful architectures tend to concentrate spend into fewer, longer-lived resources rather than distributing it dynamically across demand.

From a business perspective, stateful applications tend to introduce stickiness, not just in the user experience, but in the infrastructure.

Because state is preserved across requests, stateful applications often assume continuity. The same user connects to the same instance, so the system can reliably retrieve prior context before responding.

Moreover, since the state is bound to specific resources, stateful systems often require:

- Longer-lived compute instances

- More careful traffic routing

- Higher baseline capacity to avoid disruption

This can make your costs less elastic. In practice, this means stateful systems often scale “up” more easily than they scale “down,” which can leave teams paying for capacity they no longer need. Even when demand drops, the stateful architecture can’t always scale down easily without the risk of losing sessions or degrading performance.

In FinOps terms, stateful workloads often shift costs from variable to semi-fixed, reducing the direct correlation between usage and spend.

For SaaS finance and FinOps teams, this shows up as:

- Higher fixed cloud spend

- Slower response to usage changes,

- And cost patterns that are harder to forecast or attribute

None of this makes stateful applications “bad,” but it does mean their cost behavior is shaped as much by architectural design as by raw usage.

What Is A Stateless Application?

Stateless design fundamentally changes cost behavior by allowing infrastructure to scale strictly with demand, rather than with session continuity or workflow duration.

A stateless application does not retain information about previous requests. Each request is treated as a standalone event and contains all the information the system needs to process it.

Just like in a self-service kiosk, every time you order, you start fresh. You tell the system exactly what you want, and it doesn’t need to know anything about your last visit to get it right.

Now, picture this. If one kiosk goes offline, another can instantly replace it without requiring any memory transfer, or handing off, to serve you specifically, right?

In a stateless design, the app does not depend on in-memory session data or local state tied to a specific instance. If a state is required, it is stored externally (in a database, object storage, or distributed cache) and retrieved as needed.

How stateless applications affect your cloud costs, scalability, and business workflows

Because no single request relies on prior interactions, stateless applications are inherently easier to replicate, scale, and replace. Any instance can handle any request, after all. This property enables aggressive auto-scaling, faster instance replacement, and more granular cost control in cloud-native environments.They also make cost attribution easier, since infrastructure usage aligns more directly with traffic, requests, or workload volume.

“Most cloud-native services you interact with today are built in a stateless way, even if it’s not obvious from the user experience.”

Examples of stateless app use cases include:

- RESTful APIs

- Microservices

- Serverless functions

- Frontend services backed by shared data stores

In these systems, if one instance disappears, another can immediately take its place without disrupting users. Traffic can be routed anywhere because no request depends on a specific server remembering what happened before.

This is why stateless applications are the default recommendation for containerized environments, auto-scaling groups, and platforms like Kubernetes. They also make cost attribution easier, since infrastructure usage aligns more directly with traffic, requests, or workload volume.

They remove many of the coordination challenges that come with cloud-native and distributed systems.

Stateful Vs. Stateless Applications: What’s the Difference?

Consider this quick comparison before we dive into details. This comparison highlights how architectural state influences scaling behavior, failure recovery, and cost predictability.

Dimension | Stateful Applications | Stateless Applications |

State handling | Stores session or request context between interactions | Does not store context between requests |

Request dependency | Requests depend on previous interactions | Each request is fully independent |

Scalability | Harder to scale horizontally | Easier to scale horizontally |

Fault tolerance | Failures can disrupt active sessions | Failures are easier to recover from |

Traffic routing | Often requires sticky sessions | Requests can be routed anywhere |

Cloud-native fit | More common in legacy or tightly coupled systems | Designed for modern cloud-native environments |

Cost behavior | Less elastic, higher baseline spend | More elastic, scales with demand |

Cost predictability | Harder to forecast and attribute | Easier to model and optimize |

Table: Side-by-side comparison of stateless vs. stateful applications

From a cost-management perspective, the most important difference is elasticity: stateless systems can usually reduce spend as demand falls, while stateful systems often cannot without redesign.

Stateless Vs. Stateful: How the Differences Show Up in Cloud Environments

When it comes right down to it, here are the key considerations you’ll want to make.

Scalability under load

Stateless systems can grow and shrink with demand because any new instance can immediately handle your traffic. But because stateful apps rely on user sessions or workflows tied to specific instances, adding or removing capacity isn’t always seamless.

Resilience and failure recovery

When a stateful instance fails, the system may lose in-progress sessions or require recovery logic to restore context. This adds operational complexity and increases the blast radius of failures.

In stateless architectures, failures are less disruptive. Instances can be replaced without users noticing, because no critical memory lives on the instance itself. And faster recovery reduces downtime risk (and the related costs, like rework and customer churn). It also reduces the need for expensive redundancy and overprovisioning designed solely to protect in-memory state.

Infrastructure utilization

Stateful systems tend to keep resources running longer to preserve memory and session continuity. And this can lead to idle capacity during off-peak periods, which can lead to higher fixed cloud costs. Idle capacity is one of the most common drivers of wasted cloud spend in stateful architectures.

With a stateless system, it is easier to rightsize because resources can spin down when demand drops without risking lost state.

Neither approach guarantees efficient costs on its own. However, stateless architectures can make it easier to see and control how your costs scale with changes in usage.

Now, here’s the thing.

Most Production Systems Are Hybrid

Hybrid architectures are where cost visibility challenges tend to compound, because state-related costs are distributed across multiple layers and services.

Very few real-world systems are purely stateful or purely stateless. Instead, common scenarios like this happen:

- Stateless application layers backed by stateful databases

- Stateless APIs with external session stores

- Microservices that are stateless individually, but stateful as a system

As you’ve probably noticed, the goal isn’t to eliminate the state. It’s to place it intentionally where it’s easiest to manage, scale, and observe.

This is also where cost visibility becomes essential. When state is spread across layers, clouds, and services, it’s easy for architectural decisions to drive your spending without anyone noticing until a surprise bill hits.

When Stateful Applications Still Make Sense (And When They Don’t)

The question isn’t whether state is “good” or “bad,” but whether its cost and operational impact is intentional and observable.

Despite the benefits of stateless design, stateful applications aren’t a mistake, and they’re not going away.

The key here is knowing when state is necessary versus when it’s simply inherited from older patterns, workflows, or conveniences.

Stateful designs are often the right choice when:

- Strong consistency is required: Systems like databases, ledgers, and transaction-heavy platforms depend on preserved state to ensure correctness.

- Long-running workflows are unavoidable: Multi-step processes that span minutes or hours may rely on maintained context to progress reliably.

- Latency-sensitive state access is critical: Keeping state close to compute can reduce round trips in specific, performance-critical scenarios.

In those cases, statefulness is a feature.

Stateful or Stateless Design, Here’s How You Stay In-Charge of Your Cloud Costs Regardless

Today, the real challenge isn’t choosing between stateful and stateless design. It’s understanding how those choices behave once they’re running in production.

Stateful components tend to anchor costs in place. Stateless apps scale them dynamically, across multiple cost drivers.

Both patterns are valid.

But without visibility into how each behaves at runtime, you can lose cost clarity, like:

- Which specific features, customers, or teams are driving your cloud spend

- Whether a cost increase is structural or purely usage-driven

- If a recent release introduced new state, or if traffic simply spiked

- Whether you’re scaling profitably versus overscaling infrastructure

The typical cloud bill doesn’t answer those questions. This is especially true in mixed stateful/stateless systems, where infrastructure costs don’t map cleanly to user behavior or feature usage. It shows what you spent, but not why — and certainly not in the context of how your people, products, and processes actually work.

But CloudZero does, and then some

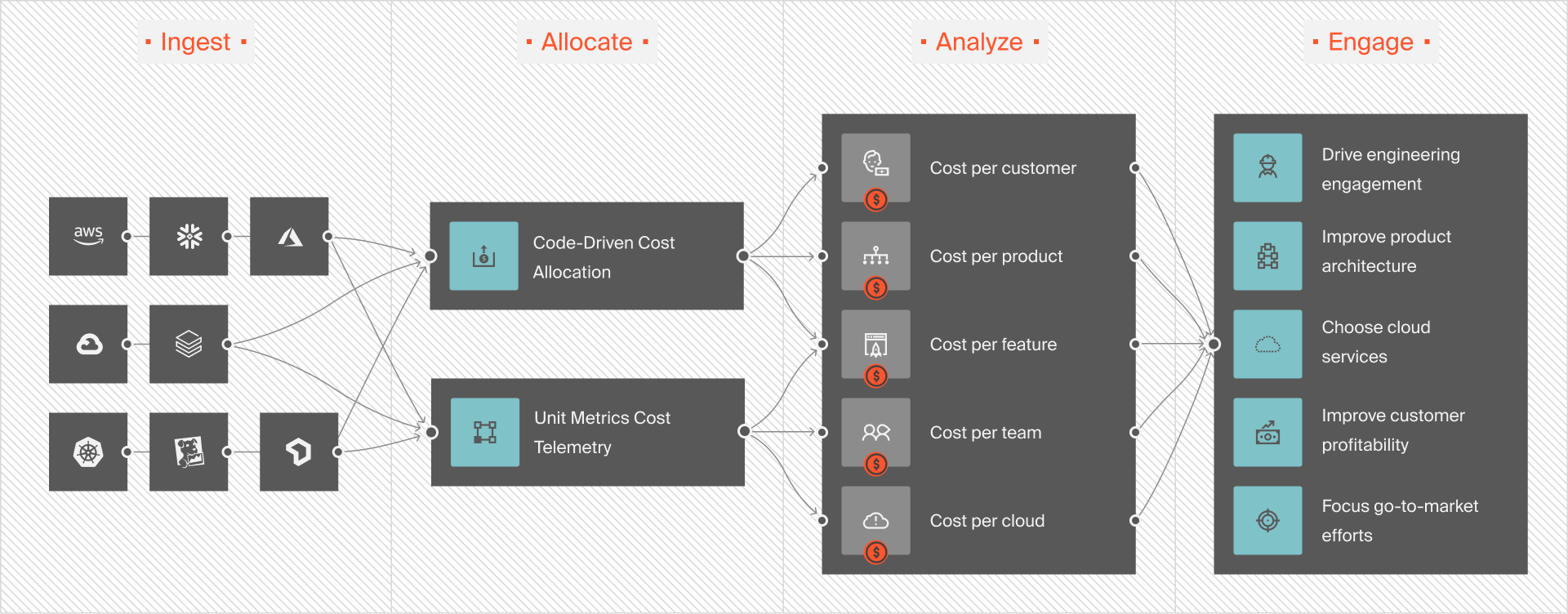

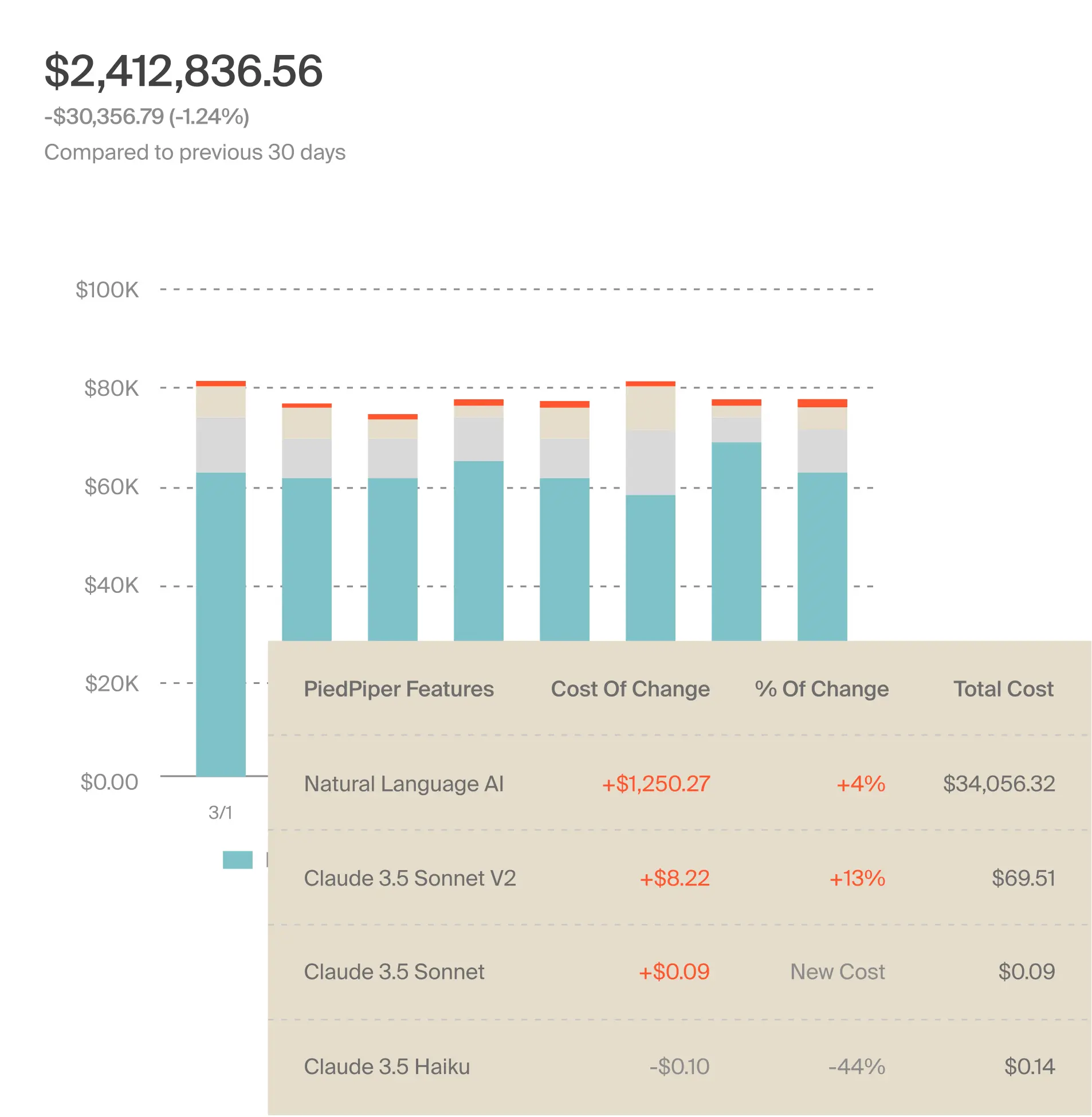

CloudZero connects architectural behavior — including stateful and stateless workload patterns — directly to cost outcomes in real time. With CloudZero, you can see how your stateful and stateless components behave as traffic changes, deployments roll out, and features scale — in real time and in business terms you’ll understand immediately. Like this:

Instead of debating whether an architectural decision was “worth it,” you can see its cost impact directly, tied to specific services, teams, environments, and customer accounts, like this for each AI service:

That clarity means your engineers can reclaim the freedom to design and iterate with confidence. Architectural trade-offs become measurable decisions instead of abstract debates. Finance can finally get the intel they need to fund your roadmap without sacrificing profitability. And leadership can support both in maintaining competitive advantages.

Key takeaways:

- Stateful vs. stateless design directly affects cloud cost elasticity

- Stateful systems tend to increase baseline and fixed cloud spend

- Stateless architectures align costs more closely with demand

- Most production systems are hybrid, which increases cost complexity

- Visibility is required to understand the real cost impact of state

That’s why the teams at Duolingo, Skyscanner, and Malwarebytes trust CloudZero to understand, control, and optimize cloud costs across their modern architectures.

Want the same visibility into how your stateful and stateless design choices affect your bottom line?  and see the impact for yourself.

and see the impact for yourself.