Before 2022, Alex Hanna worked on Google’s Ethical AI team. Today, she’s the director of research at the Distributed AI Research Institute, a transition sparked by Google’s handling of a paper exposing AI’s growing environmental footprint.

So, how bad is it, really? That depends on who you ask.

Take Jesse Dodge, a senior research analyst at the Allen Institute for AI. Jesse told NPR that a single ChatGPT query can use as much electricity as keeping a light bulb on for 20 minutes.

Other claims go further. That training GPT-4 consumes as much power as 30,000+ U.S. homes, or that data centers are drying up local water supplies.

But is AI truly that destructive to the environment, or are we missing the bigger picture?

In this guide, we’ll unpack the numbers behind AI’s environmental impact, separate hype from reality, and show how you can innovate with AI responsibly (using visibility platforms like CloudZero to connect AI spend to efficiency, emissions, and sustainability goals).

Related: Why Sustainable Cloud Starts With The Bottom Line – Not Before

Is AI Bad For The Environment?

It depends on where you draw the line and how you define “bad.”

Global data centers chew through about 1.5% of all electricity and produce roughly 1% of total CO₂ emissions. That’s about the same as the entire aviation sector, according to the International Energy Agency.

Tech hasn’t exactly been carbon-neutral. But what generative AI did was crank up the intensity, not because AI is uniquely wasteful, but because it scales exponentially.

Training models like GPT-4 or Claude means spinning up thousands of GPUs running flat out for weeks, all while cooling systems gulp down water to keep everything stable.

Then inference kicks in, with each request adding another watt to the meter. And once trained, those same models must run continuously to serve millions of users.

The better the models become, the higher their usage scales, too.

Now, picture this:

- By 2028, AI’s electricity consumption may exceed what 22% of US households currently use annually, according to MIT Technology Review.

- Some researchers estimate training GPT-4 required the same energy as powering 33,000 homes.

- Meanwhile, Google reports its Gemini AI uses about 0.24 Wh of electricity and 0.25 ml of ultra-pure water per typical query. The big G’s greenhouse gas emissions are up 48% since 2019, ending its “operational carbon neutrality.”

- Likewise, Microsoft’s emissions have risen 29% since 2020.

Not ideal, but not apocalyptic either.

AI still accounts for a small share of global emissions compared to aviation, steel, or crypto mining. What’s new is visibility. We can now trace, measure, and optimize AI’s energy appetite, which is where the real opportunity is.

The AI Environmental Impact Reality: Let’s Measure AI’s Sustainability Impact

This is no small feat, but let’s give it a solid shot.

A single GPT-scale model can require thousands of GPUs running for weeks or months. According to researchers at the University of Massachusetts Amherst, training a single transformer model can emit more than 626,000 pounds of CO2.

That’s roughly equal to the lifetime emissions of five average cars.

Once trained, the process of generating responses (inference) consumes far less power per interaction. However, inference happens billions of times a day. That’s why some analysts now estimate that inference could soon overtake training as the primary driver of AI’s carbon footprint.

Also see: AI Costs Climb 36% But ROI Still Unclear, State of AI Costs Report Finds

Remember that Google internal study we just mentioned? Well, it also revealed that a median Gemini prompt emits just 0.03 grams of CO2. That’s tiny in isolation, but massive at scale.

Data centers don’t just devour electricity — they drink too

AI clusters often rely on evaporative cooling systems to maintain temperature, drawing millions of liters of fresh water.

One study from the University of California, Riverside, estimated that the 700,000 liters of freshwater required for training GPT3 are enough to produce hundreds of cars or thousands of smartphones.

The University of California, Riverside, estimated that training GPT-3 used around 700,000 liters of water. That’s enough to produce hundreds of cars or thousands of smartphones. And in water-stressed regions like Arizona, Spain, or Singapore, that’s a significant concern.

Hardware and materials are the carbon you don’t see

Then there’s the embodied carbon in AI hardware itself. GPUs, TPUs, and networking gear require rare earth minerals and energy-intensive manufacturing.

A single high-end GPU can embody hundreds of kilograms of CO2 before it ever processes a token.

Multiply that across tens of thousands of accelerators in hyperscale data centers, and the embodied footprint rivals and sometimes exceeds the operational footprint.

But Measuring AI’s Sustainability Impact Is Still Super Hard

There’s no agreed metric, like “CO₂ per 1,000 tokens” or “liters of water per training run.”

So, some providers count only operational energy. Others exclude hardware manufacturing or inference emissions altogether.

That’s why claims range from “AI uses as much energy as a small country” to “AI accounts for only 0.5% of global power.” Both can be true, depending on what’s counted.

Still, transparency is improving. The Green Software Foundation, Partnership on AI, and EU AI Act are all pushing for carbon reporting standards. Cloud providers like Google, Microsoft, and AWS now publish sustainability updates, though disclosure remains optional, not yet universal.

Here’s How You Win: With A FinOps-Style Approach To Sustainability

This challenge mirrors cloud cost management from a decade ago. Too little visibility.

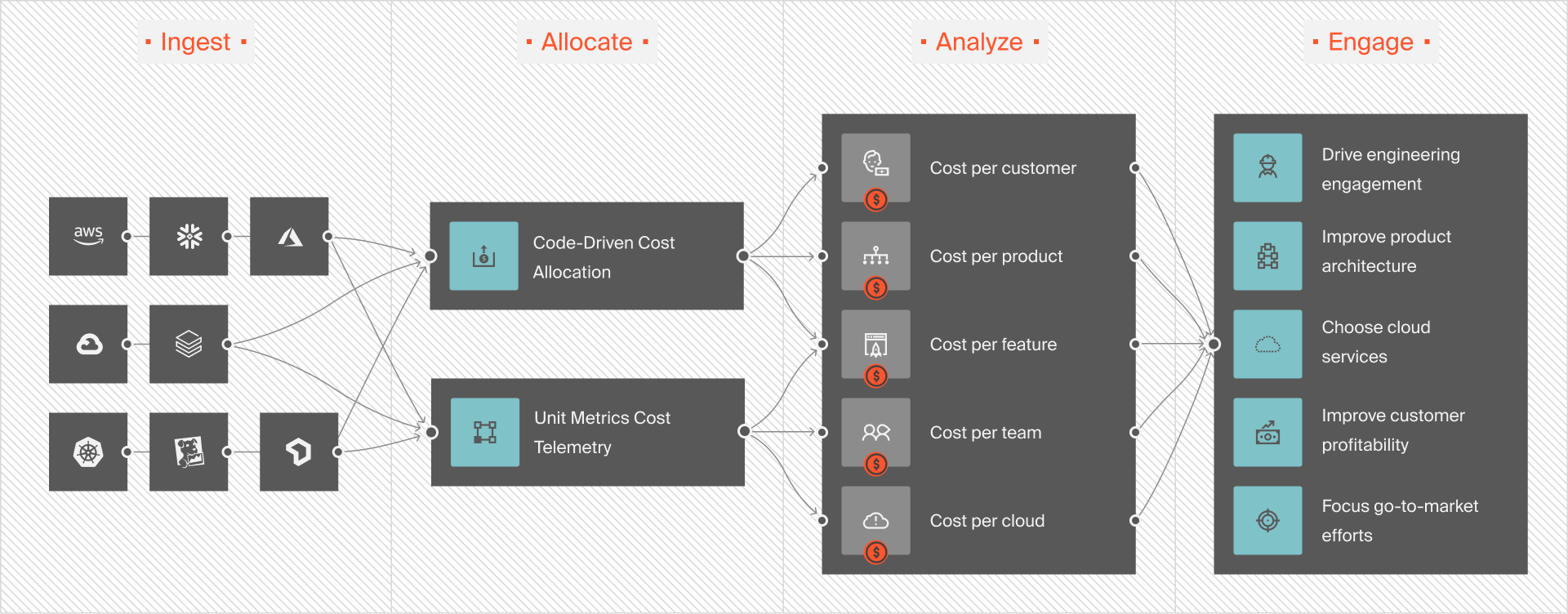

Without clear attribution, optimization is tricky. So, forward-looking organizations are now treating sustainability as a FinOps discipline, measuring energy, water, and carbon the same way they track cloud spend, usage, and performance.

Read more:

Imagine seeing grams of CO2 per query, energy cost per GPU hour, or water use per workload region right next to cost per feature or per customer.

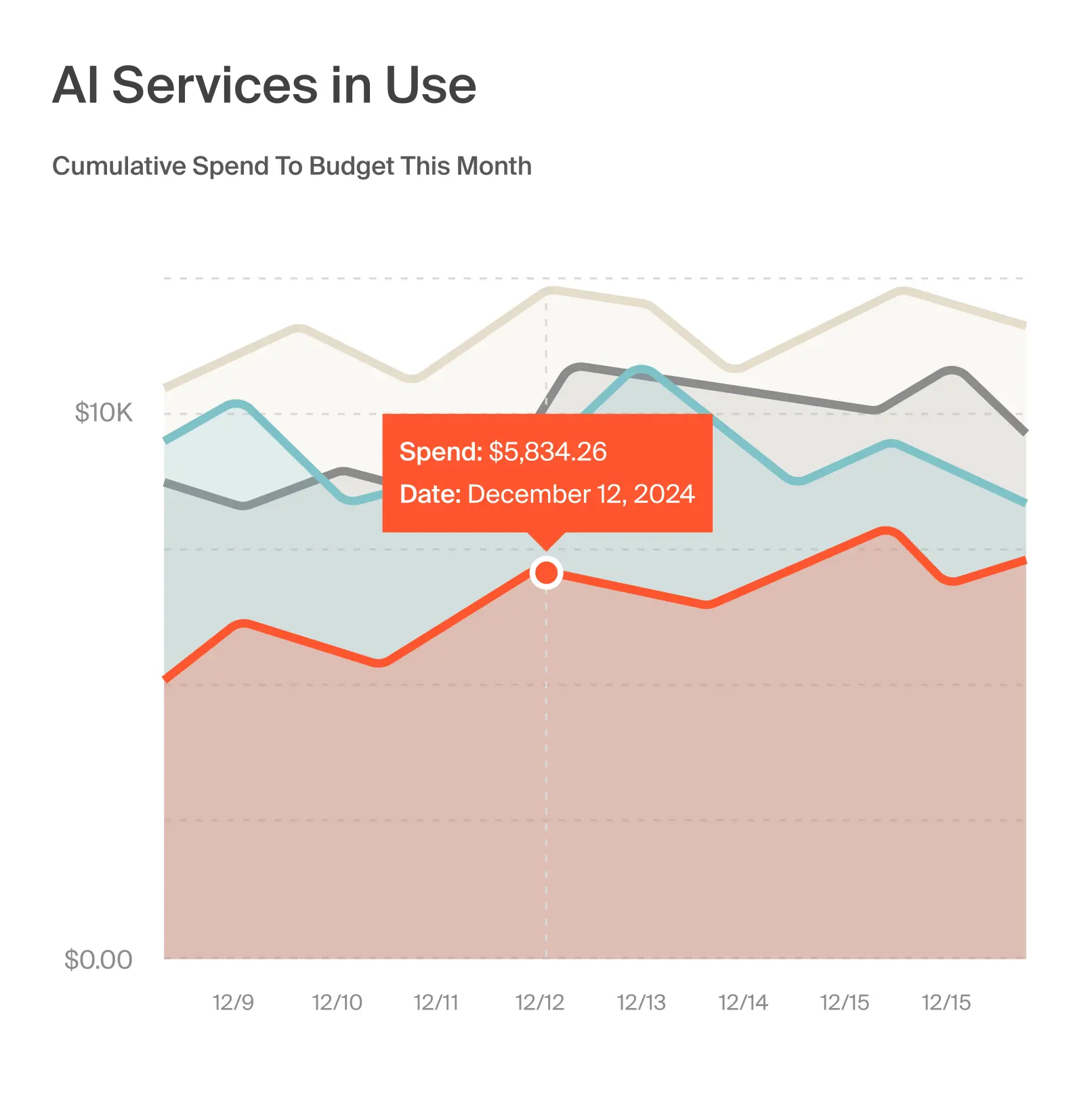

Platforms like CloudZero already help SaaS teams map cloud spend to business outcomes.

Extending that level of visibility to environmental metrics lets you measure (and manage) your AI footprint in real time.

The gist is that the same industry driving AI’s energy surge also holds the key to fixing it. AI itself can optimize cooling, manage grid loads, and predict renewable availability.

We’re at a point where AI’s cost and carbon impact can be tracked in the same dashboard. For engineering and FinOps leaders, this is the moment to make sustainability a first-class metric, right alongside cost and performance.

Here’s how.

Great! Now, How Do You Balance Innovating With AI And Sustainability?

The goal isn’t to stop innovating, but to innovate smarter. To scale AI without letting costs, carbon, or compliance spiral out of control. How?

Make sustainability a KPI

Leading players are now treating sustainability as a core performance metric rather than another woke buzzword.

Microsoft links leadership bonuses to carbon goals. Google reviews AI models for energy efficiency. AWS tracks data center progress as part of its journey to net-zero by 2040.

As energy prices and disclosure mandates rise, sustainability performance will increasingly affect margins, compliance, and reputation. So those are not PR moves but resilience strategies.

And here’s the good news. Most of the levers for AI sustainability already exist within the cloud and FinOps workflows you use every day. So…

Engineer sustainability into your AI workloads

Think of sustainability as another optimization axis, alongside cost, speed, and performance. The same habits that reduce your AWS or GCP bills can shrink your carbon footprint too.

Here are a few to start with:

- Right-size your AI models: Use smaller, fine-tuned models for narrow tasks instead of defaulting to frontier models for everything. You’ll optimize both dollars and emissions.

- Batch and cache your workloads: Group inference requests or cache frequent results to minimize redundant compute.

- Take advantage of carbon-aware scheduling: Run non-urgent jobs when grid carbon intensity is lowest.

- Use renewable regions: Deploy workloads in cloud regions powered by cleaner energy sources like wind or solar.

- Run on efficient hardware: Choose newer-generation GPUs or TPUs that deliver more performance per watt.

- Plan hardware lifecycles: Extend hardware use responsibly before refreshing clusters.

If you follow the CloudZero blog, then you know this looks a lot like FinOps for AI in action. It’s about optimizing for performance and cost in near real-time, only now with environmental efficiency in the mix.

Related read: FinOps For AI: How Crawl, Walk, Run Works For Managing AI Costs

Prepare for scrutiny, and be able to back up your claims

Soon, transparency around your AI footprint may matter as much as uptime SLAs or security certifications. And it’s not just regulators watching anymore. Enterprise buyers, investors, and even end users are asking tough questions about the environmental cost of AI products.

That raises a key question. Can you see which specific products, customers, or features drive your AI spend, and, by extension, your environmental impact?

Well, visibility is where it starts.

But few organizations can trace their AI costs or carbon emissions to specific workloads or teams. And as you know, you can’t reduce what you can’t measure.

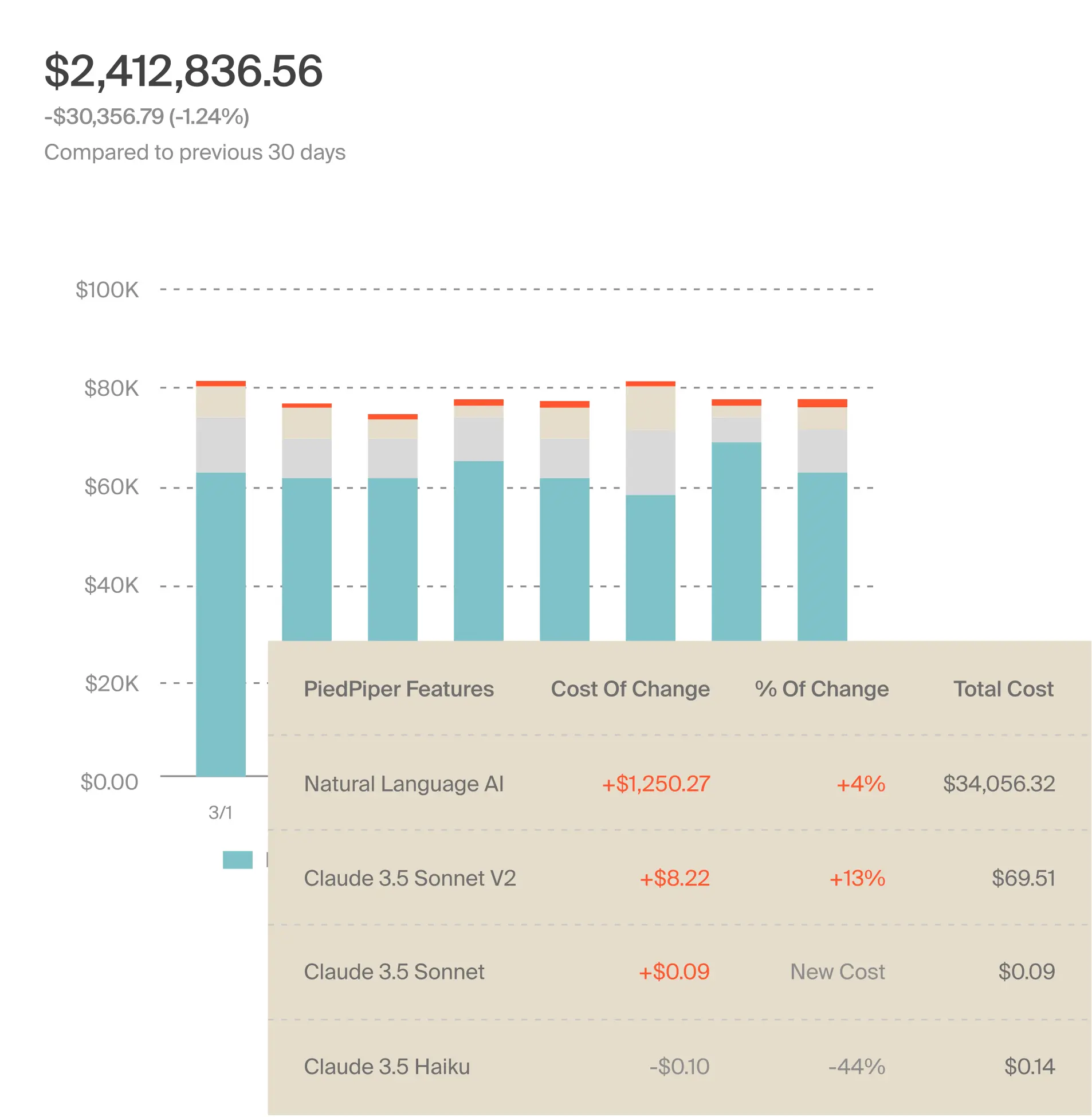

But you can now, using CloudZero. Picture this:

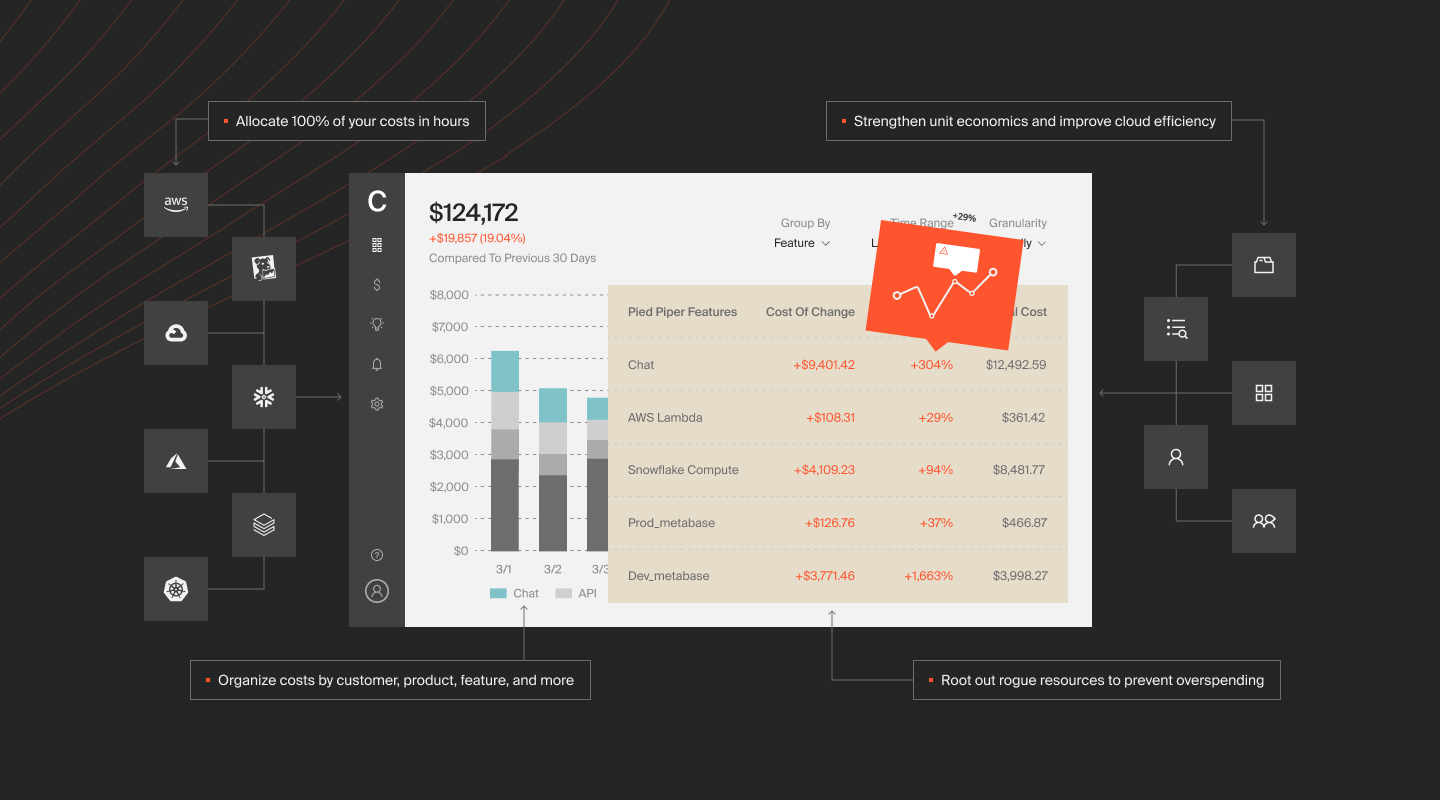

With CloudZero, you can allocate 100% of your AI spend with precision — in hours, not weeks. You can analyze your costs by AI service, model type, SDLC stage, or environment.

You can also view cost per user, per feature, or per project to pinpoint exactly which products, people, or processes are driving your energy consumption.

By mapping every dollar of AI and cloud spend to its underlying driver, CloudZero helps you uncover the workloads behind your biggest GPU costs and, by extension, your largest carbon footprint.

You’ll be audit-ready at any time, equipped with the data story to back every sustainability claim you make, whether that’s to your board, investors, or customers.

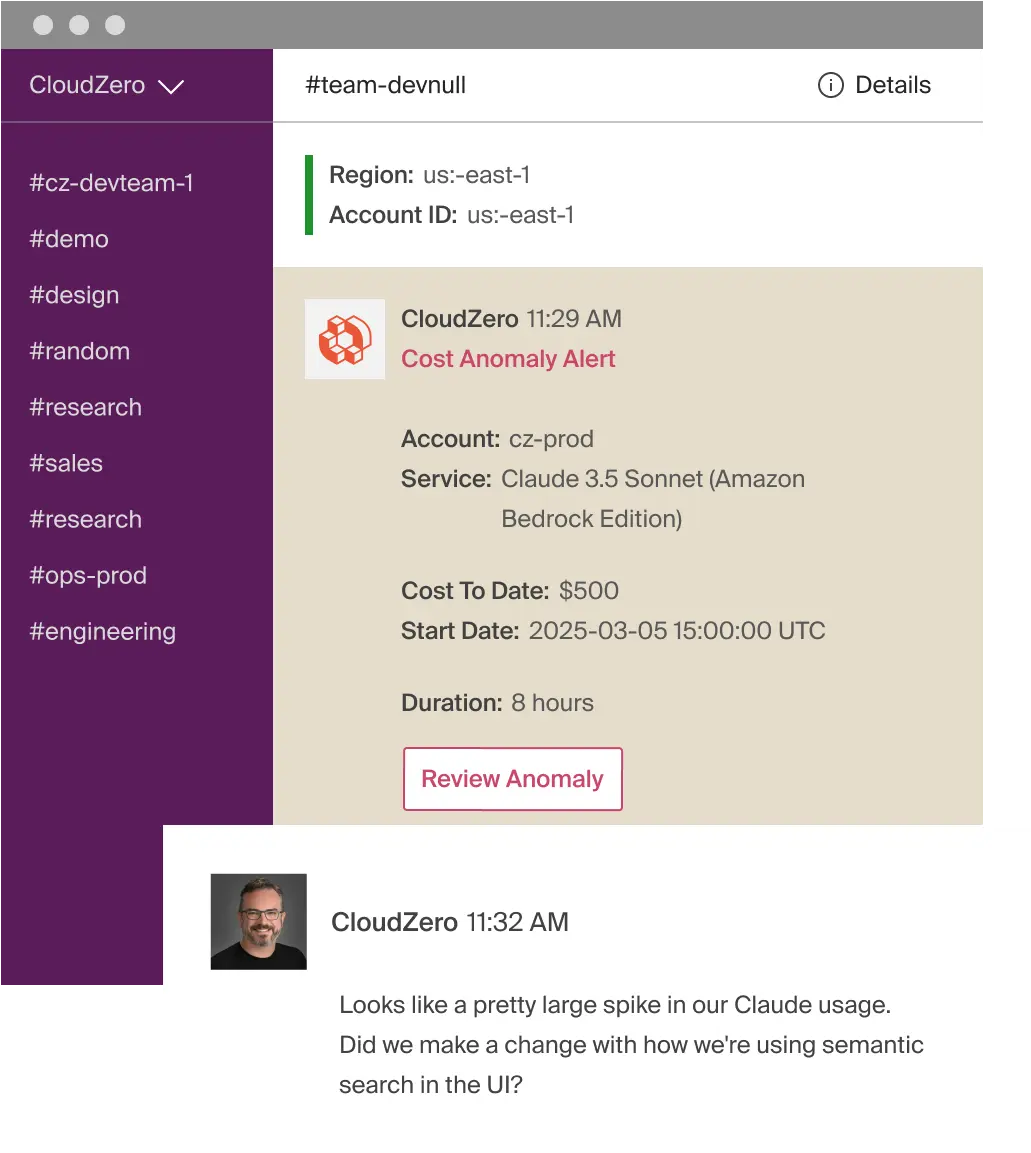

With CloudZero’s real-time anomaly detection, you’ll even spot unexpected spikes in resource consumption as they happen, not after the invoice lands a month later.

That means you can take action immediately, correct the anomaly, and prevent a costly surprise before it impacts your bottom line or bombs your compliance status. Don’t take our word for it.  to see it for yourself.

to see it for yourself.

From there, the playbook stays the same: reduce idle resources, shift workloads to cleaner regions, and measure both cost and carbon per customer or per feature. But it starts with reliable visibility.

Connect Your AI Costs And Carbon Emissions — And Protect Your Reputation And Margins

AI’s environmental impact is real, but it’s not insurmountable.

With the right visibility, you’ll finally see where your compute, energy, and cloud spend truly intersect —and how each influences the others.

That clarity lets you align AI costs, performance, and sustainability into a single feedback loop, pinpoint inefficiencies before they grow, and guide engineering decisions that are both profitable and responsible.

It’s how you build faster without burning through resources, keep innovation accountable, and scale in a way your CFO and sustainability officer can both stand behind.

With CloudZero, you can see where your AI and cloud dollars go, down to the hour, and how those costs translate into your environmental footprint. You can detect anomalies in real time, identify inefficient workloads, and redirect precious resources before they inflate both your bill and carbon impact.

Responsible AI doesn’t mean slowing down. Ready to balance innovating with AI and sustainability? Then  , and start driving sustainable growth for both your business and our shared planet — all while continuing to ship at the pace your market demands.

, and start driving sustainable growth for both your business and our shared planet — all while continuing to ship at the pace your market demands.