When OpenAI surged into the spotlight with ChatGPT, not everyone inside the company agreed on the path forward. In 2021, a group of senior researchers broke away. They had concerns about safety, transparency, and the direction of AI development.

They went on to found Anthropic. And their answer to ChatGPT was Claude.

Anthropic’s mission is for openness now. Yet, Claude’s pricing can feel as mysterious as the model weights behind the scenes.

In this guide, we’ll unpack Claude AI pricing in 2025. We’ll cover how it works, what you’ll really pay across models and plans, and how it stacks up against OpenAI’s GPT, Google’s Gemini, and Meta’s Llama.

We’ll also share how innovative SaaS leaders tie Claude usage directly to business value, down to the customer or feature level, to maximize their AI ROI.

What Is Anthropic’s Claude AI?

Claude, a family of large language models (LLMs), excels at natural language processing (NLP). It is multimodal, capable of working across text, images, and even audio.

For SaaS teams, that can mean powering customer support bots that don’t go off-script, analyzing feedback tickets at scale, and accelerating developer workflows with clean code suggestions.

Teams testing Claude soon find that every input and output adds up. The key to staying in control is knowing exactly how Claude’s pricing works — and what you’re really paying for, starting with the models.

A Breakdown Of Claude AI Models And Features

The Claude 4 family includes Opus 4/4.1 at the top end, Sonnet 4 as the balanced workhorse, and Haiku, currently anchored at 3.5 for ultra-low latency and price.

Let’s dive deeper.

Claude Opus 4 / 4.1

Opus is Anthropic’s most capable model, built for deep reasoning, complex, long-horizon coding, and agentic workflows.

If you’re asking an assistant to plan, write, refactor, and test real software over extended sessions, or digest dense legal/financial material with nuance, Opus can be it.

Opus 4.1 (released Aug 5, 2025) is a drop-in upgrade to Opus 4 with stronger agentic performance and real-world coding gains. Availability spans the Anthropic API, AWS Bedrock, and (as it rolls out) Vertex AI. Pricing is the same as Opus 4.

Related: Amazon Bedrock Pricing: How Much It Costs (+ Cost Optimization Tips)

Claude Sonnet 4

Sonnet 4 is built for SaaS workflows such as customer assistants, knowledge search, and end-to-end coding tasks. You’ll want it where you want strong reasoning without Opus-level spending.

As of August 2025, Sonnet 4 supports up to a 1M-token context window in public beta. This means you can ingest entire codebases or large document sets in a single shot.

Claude 3.5 Haiku

Haiku is tuned for near-real-time responses and high-volume, lower-complexity tasks. Examples here include classifying feedback, summarizing tickets, lightweight retrieval-augmented answers, and in-product micro-interactions.

Expect very aggressive token pricing and wide availability across Anthropic’s API and major clouds. If/when a Haiku 4 arrives, it’ll likely take up the “fast/cheap” mantle, too.

To get an even better idea of their capabilities, here’s a quick look at the competition.

How Claude Compares: Claude Vs. ChatGPT Vs. Gemini Vs. LLama

Claude now competes against some of the most advanced AI systems, including OpenAI’s GPT-5, Google’s Gemini, and Meta’s Llama.

Consider this:

OpenAI’s GPT-5 vs. Claude 4.1

ChatGPT-5’s architecture includes a real-time routing system. This enables it to switch between quick responses and deeper “thinking” modes based on the conversation’s complexity, tool needs, or explicit user cues like “think hard.”

It’s a unified model built for expert-level reasoning across tasks such as coding, math, health, and multimodal interactions.

With GPT-5 Pro, you get even better results on economics, science, and coding benchmarks, while reducing major errors.

GPT-4o is also excellent at voice/image, real-time interaction, and web browsing capability.

Claude, meanwhile, doubles down on context length and reliability. And where GPT-4o maxes out at a large but finite context window, Sonnet 4 can stretch up to a million tokens in beta.

On pricing, GPT-4o’s turbo versions can sometimes come in cheaper than Opus. However, once you factor in Claude’s prompt caching and batch discounts, the gap narrows, especially for repeat-heavy SaaS workloads (where GPT-5 can be overkill).

Gemini vs. Claude

Google’s Gemini models are known for rapid iteration and deep integration with Google Cloud. They excel at multimodal capabilities and enterprise tooling, but can feel experimental in deployment pace. Claude, by comparison, banks on predictability and enterprise-grade support.

Sonnet 4’s long context window and caching features make spending more predictable. It also delivers strong performance on tasks like knowledge retrieval and automated documentation.

Meta’s Llama vs. Claude

Llama models stand out for being open-source, tunable, and free. Yet that freedom also means you’re responsible for hosting, scaling, and securing the model.

Claude offers a managed API model with built-in safety, caching, and enterprise integrations (such as through AWS Bedrock). This can tilt the total cost of ownership in its favor if you are prioritizing straightforward deployment and reliable performance.

So, does pricing for Claude features make the most sense? See below.

How Much Does Claude AI Cost?

Claude’s pricing is usage-based. It is also based on per-million-token usage, billed separately for input (what you send) and output (what the model returns).

A few key factors also impact what you’ll actually pay:

1. Claude Model choice

Opus comes with the highest price tag. Sonnet balances speed, cost, and reasoning power. Haiku keeps costs minimal for lightweight, high-volume tasks. You do not want to pay expert rates for tasks that only need quick, functional answers.

2. Token volume

Let’s say you feed the model a 200-page spec or knowledge base. That would be thousands of input tokens before you even got to the response. Output tokens add up, too, especially if you generate long-form content. So, understanding your average input/output ratio is critical for forecasting cost.

3. Context window size

Sonnet 4’s 1M-token context window comes at a higher per-token rate once you cross the 200K threshold. So, use it wisely. That’ll be the difference between strategic insight and runaway costs.

4. Features like caching and batching

Prompt caching lets you reuse static system or context prompts at a fraction of the cost (up to 90% on repeated inputs). Batch processing halves input/output costs for asynchronous tasks like ticket summarization or daily report generation.

5. Enterprise vs API access

You can access Claude directly through the Anthropic API or via platforms like AWS Bedrock and, soon, Google Cloud Vertex AI. Pricing is usually aligned, but enterprise packaging often layers in extras like SLA guarantees, security controls, and support. For CFOs, that can change whether Claude appears as a line-item API cost or as part of broader cloud spend.

6. Hidden usage costs

Latency can impact developer productivity, leading teams to “upgrade” to Opus for faster thought processes. Large context prompts can bloat bills without delivering incremental value. And if usage spikes unexpectedly, for example, when a SaaS product scales or customers hammer a Claude-powered feature, costs can escalate.

With these factors in mind, let’s look at the hard numbers.

Claude Pricing Plans Explained

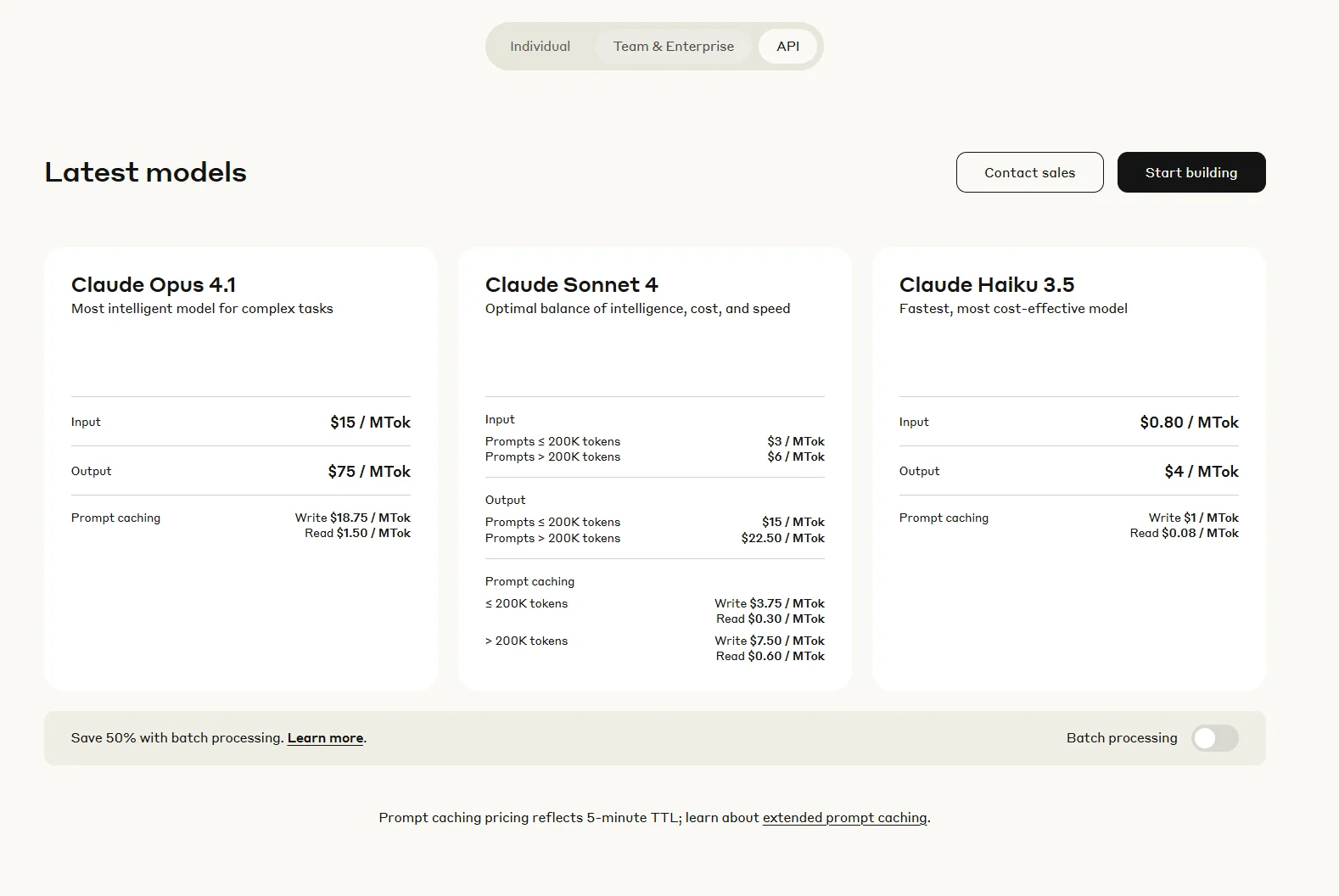

Here is the current breakdown across the latest models without batch processing.

Image: Claude API pricing without batch processing

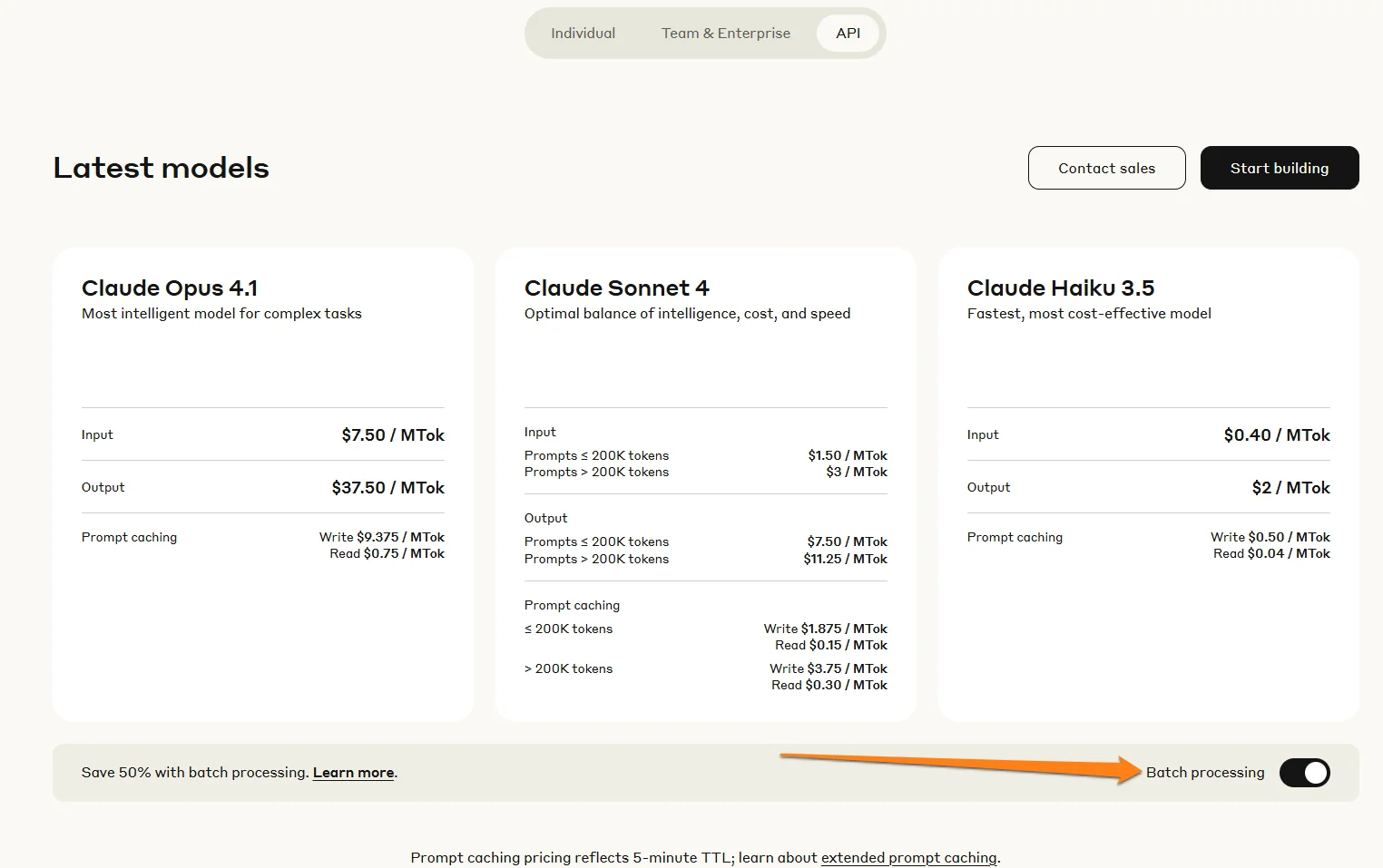

With batch processing, the pricing is slashed in half:

Image: Claude API pricing with batch processing

That MTok stands for 1 million tokens. The pricing reflects standard API rates across Anthropic and AWS Bedrock.

Additionally, prompt caching can reduce repeated context costs by up to 90%. For example, if your SaaS app sends the same system prompt to Sonnet hundreds of times a day, caching those tokens lowers the cost from standard to just cents.

Picture this:

|

Model |

Write Cache ($/MTok) |

Read Cache ($/MTok) |

|

Opus 4/4.1 |

$18.75 |

$1.50 |

|

Sonnet 4 |

$3.75 |

$0.30 |

|

Haiku 3.5 |

$1.00 |

$0.08 |

Claude AI Pricing Examples To Clear Things Up

Let’s take three SaaS scenarios so we can get a good grasp of what to expect here.

Scenario 1: Developer Assistant (Sonnet 4)

3 interactions/day, each about 1,700 input + 1,700 output tokens

Total/month (5 days/week): about 765K input + 765K output = 1.53M tokens

The cost would be:

- Input: 0.765M × $3 = $2.30

- Output: 0.765M × $15 = $11.48

- Total: $13.78/month per developer

Scenario 2: Same Workflow in Opus 4

Same token usage as above

- Input: 0.765M × $15 = $11.48

- Output: 0.765M × $75 = $57.38

- Total = $68.86/month per developer

Sonnet is nearly 5X cheaper for routine dev tasks, while Opus should be reserved for high-stakes work.

Scenario 3: High-Volume Customer Support Bot (Haiku 3.5)

50K tickets/month, avg 500 tokens input + 300 tokens output

Total: 25M input + 15M output tokens

The cost is:

- Input: 25M × $0.80 = $20

- Output: 15M × $4 = $60

- Total = $80/month at massive scale

Here, Haiku can handle large-scale classification or support summaries at a fraction of the cost of Sonnet/Opus.

Together, these numbers show why model right-sizing is crucial to your bottom line.

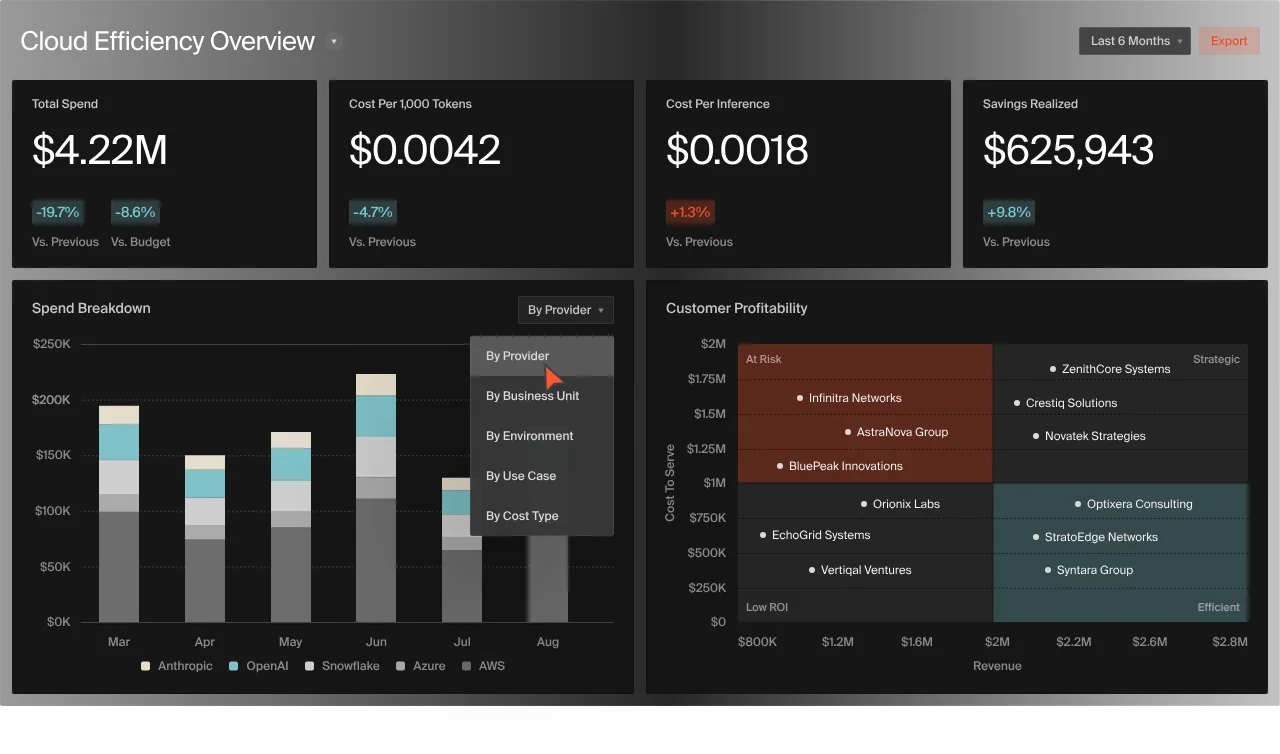

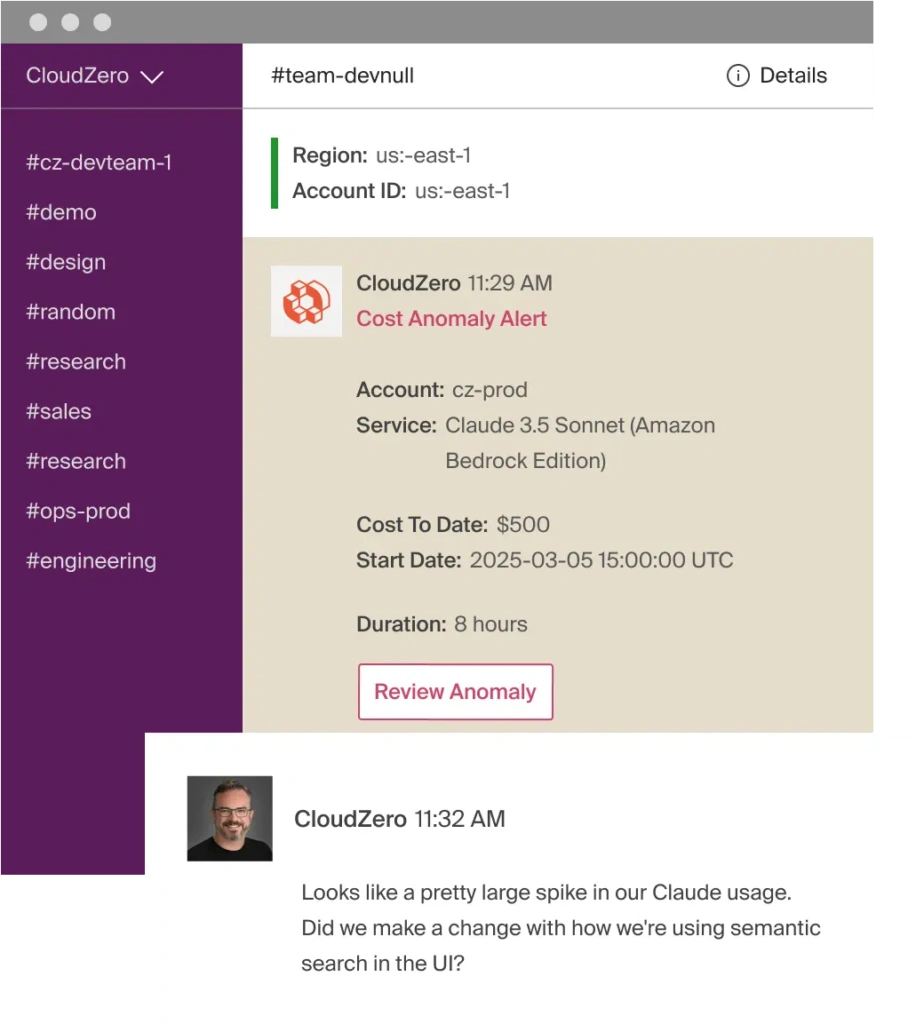

Now, here’s the thing. For CFOs, the danger isn’t what you see on the pricing page. The greater danger is the hidden costs buried in day-to-day usage. This is the whole reason CloudZero exists.

CloudZero helps you surface those blind spots across AI, R&D, and production, tying spend directly to business value. That way, you can tell exactly who, what, and why your AI costs are changing, and, more importantly, what to do about it before the bill hits.

In Claude’s case, a few overlooked “gotchas” can quickly eat into your margins. See the following areas where those risks hide, and how to rein them in without sacrificing innovation or growth.

Watch Out For These Claude AI Hidden Costs And Usage Considerations

Claude’s pricing looks straightforward on paper. In practice, your costs can creep in from less obvious places.

Context bloat

Loading entire codebases, PDFs, or documentation sets feels convenient, but those tokens add up fast. With Sonnet 4, the jump from 200K to 1M tokens can more than double your per-token cost. If you’re not trimming input intelligently, you may pay enterprise-level prices for throwaway context.

Output inflation

Long-winded outputs (think of verbose explanations or unnecessarily detailed summaries) can silently multiply costs. Teams that don’t define output constraints, like word limits or structured response formats, often burn far more tokens than they expect.

Latency trade-offs

Opus 4 and 4.1 are powerful but slower and more expensive. In time-sensitive SaaS environments, engineers sometimes “upgrade” to Opus to shave seconds off reasoning-heavy workflows, even when Sonnet or Haiku would have sufficed. That habit can quietly push your Claude bill into the thousands.

Usage spikes

Token-based pricing means unpredictable workloads can become surprise costs. Launch a new Claude-powered SaaS feature, and customer demand might suddenly double or triple your usage overnight. Without forecasting or real-time monitoring, your finance team can be left scrambling after the fact.

Related:

Integration overhead

When you access Claude through cloud providers like AWS Bedrock, you’re also absorbing any markups or hidden costs tied to that platform. While pricing alignment is close to Anthropic’s direct API, enterprise teams should check whether cloud provider billing practices (minimums, bundling, data transfer) alter the bottom line.

These Claude cost best practices can help safeguard your AI budget. And as promised, there’s one platform built to help you understand, control, and optimize every dollar you spend on Claude.

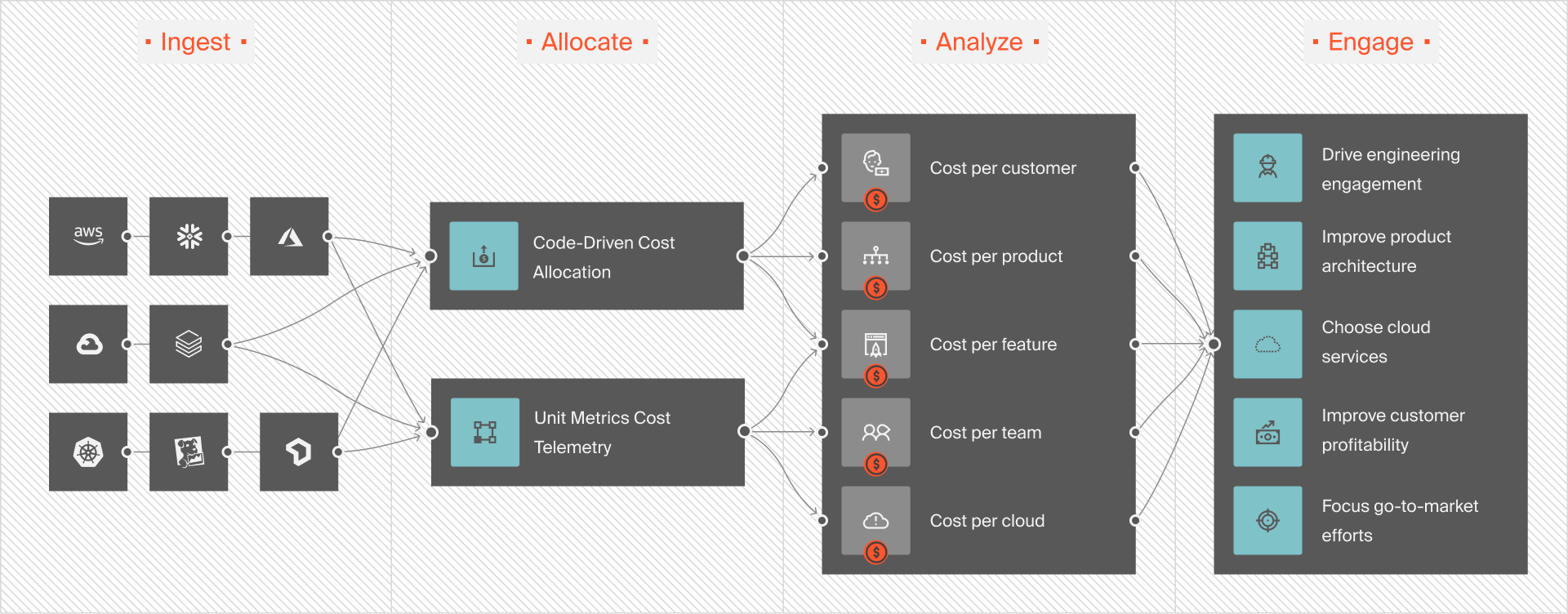

Picture this:

We’ll break that down in the next section.

Next Step: Align Claude Usage With Business Value — The Smarter Way

Claude’s pricing gives you flexibility, but without visibility, that flexibility quickly turns into risk and a fat bill. Pricing tables alone won’t tell you which products, features, or customers are driving those costs either.

That’s where CloudZero comes in.

CloudZero ties AI spend directly to business outcomes. Instead of just seeing “$12,000 in Claude API usage,” you can break it down into cost per model, per user, per feature, or per customer account. CFOs get the clarity to measure ROI. Engineering gets the guardrails to innovate without overspending.

With CloudZero, you can:

- Track costs in real time by team, environment, or feature

- Catch anomalies before they spiral with alerts on token spikes

- Right-size model usage so Sonnet handles what doesn’t need Opus

- Forecast with precision so finance isn’t blindsided by AI bills

The goal isn’t to spend less on AI. It’s to spend smarter. And leading teams like Grammarly, Skyscanner, and Toyota already trust CloudZero to manage their $14.5 billion in cloud spend. Heck, we’ve just helped Upstart save $20 million and Drift over $2.4 million.

You can be next.  to see how CloudZero aligns Claude usage with business value, ensuring every token has a measurable return.

to see how CloudZero aligns Claude usage with business value, ensuring every token has a measurable return.

Claude AI Pricing FAQs

Is Claude AI free?

Claude offers a limited free tier for individual users through its web app, but usage caps out quickly. For production workloads or enterprise deployment, you’ll need to use the paid API via Anthropic or providers like AWS Bedrock.

How much does Claude AI cost compared to ChatGPT or GPT-5?

At the high end, Claude Opus 4/4.1 costs $15 per million input tokens and $75 per million output tokens. That’s comparable to GPT-4 class models and in some cases higher than GPT-5 Turbo pricing. Sonnet 4, at $3/$15, often undercuts GPT-4 on cost while offering a much larger context window (up to 1M tokens). Haiku 3.5 remains one of the cheapest options for scale workloads at $0.80/$4.

What’s the cheapest Claude model?

That’s Claude Haiku 3.5. It prioritizes low latency and cost efficiency. It’s best for high-volume or low-complexity tasks, like support ticket triage or text classification.

Does Claude AI have an enterprise pricing plan?

Yes. Anthropic offers enterprise access via API or through partners like AWS Bedrock and soon Google Cloud Vertex AI. Enterprise packaging typically includes SLAs, compliance, and support. Pricing is structured around token usage plus any cloud provider overhead.

How do tokens affect pricing?

Every 1,000 characters you send and every word Claude generates consumes tokens. Bills scale with both input and output volume.

How can we control Claude AI costs?

Use the right model for the task (Haiku for volume, Sonnet for balance, Opus for deep reasoning), leverage prompt caching and batch mode, and monitor usage with a robust, accurate, and real-time AI cost intelligence platform like CloudZero.