As cloud systems become increasingly sophisticated, you want a cloud monitoring platform that helps you identify, isolate, and fix root-cause issues quickly. Meanwhile, engineering leaders are under increasing pressure to reduce technology costs as the global economic outlook remains uncertain.

With Datadog, you can observe, monitor, analyze, and report on the health of your infrastructure, applications, and services, in any cloud, and at scale. We hear from engineers that Datadog is easy to use, unlike quite a few alternatives, and that it offers actionable insights into root causes and cloud cost drivers.

One big complaint we often hear about Datadog is that it can be quite expensive. In this guide, we’ll discuss Datadog pricing, cost optimization strategies, and more.

Table Of Contents

What Does Datadog Do?

Datadog is a full-stack observability and cloud monitoring platform. You can track the health and performance of your cloud applications by aggregating, analyzing, and reporting telemetry and logs with Datadog.

Datadog offers over 18 monitoring services, organized into five categories; monitoring infrastructure health, application performance monitoring (APM), managing logs, monitoring digital experiences, and tracking security posture.

You can monitor any software stack or application at various levels of detail with Datadog’s all-in-one monitoring solution.

How Datadog Pricing Works (And How Much Does Datadog Cost?)

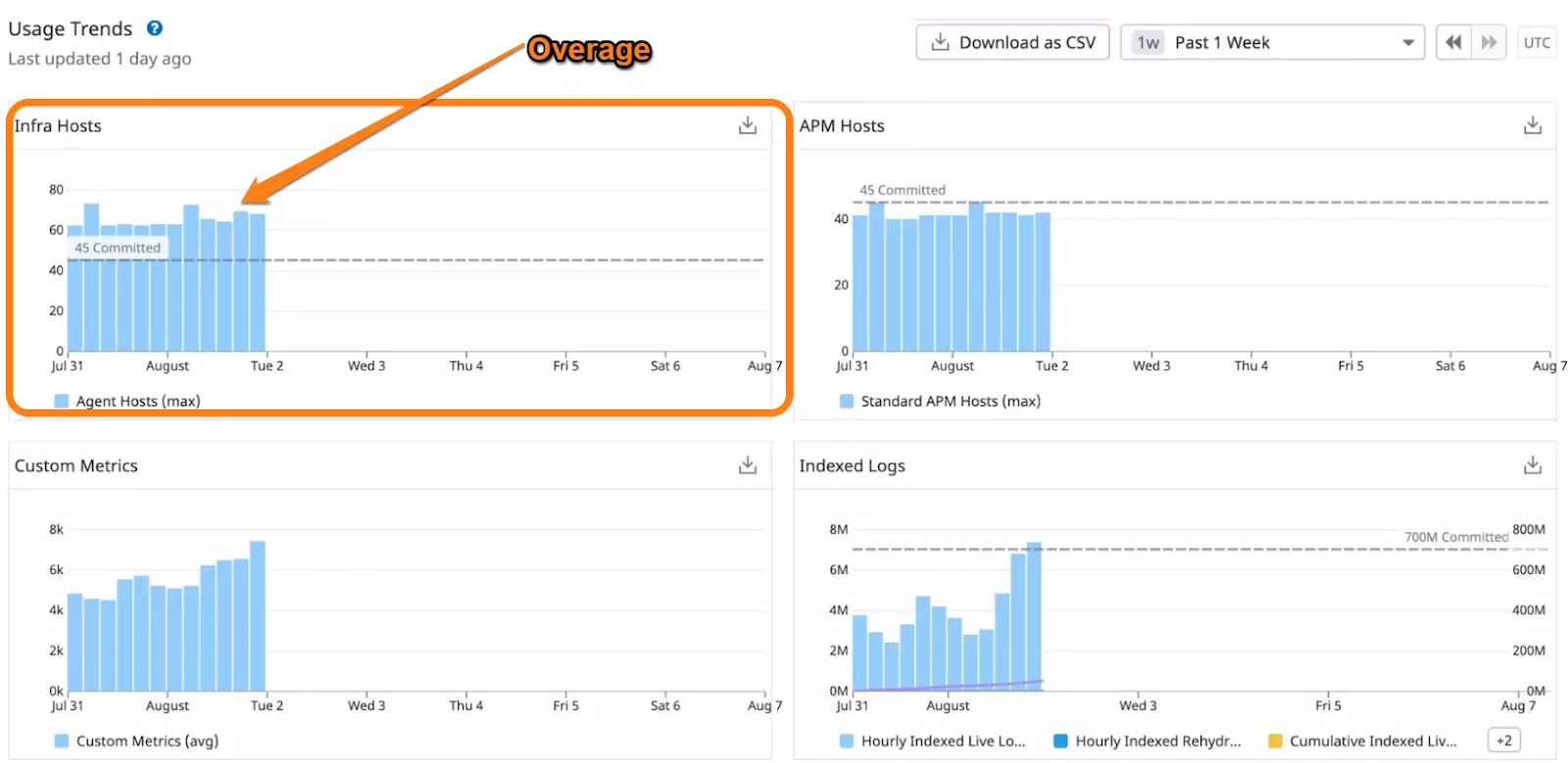

Datadog pricing is usage-based and depends on the specific monitoring services you configure it to track. Monitoring infrastructure costs different from tracking your application’s performance metrics, and so on.

There are a few more variables to consider, though. Some services charge per host while others bill you per user, per GB of data, by spend, or per session/test/function/run.

For example, Datadog charges per host per month for infrastructure monitoring. It defines a host as any virtual or physical OS instance it monitors. That instance may be a server, virtual machine (VM), node (for Kubernetes) or App Service Plan instance (for Azure App Services).

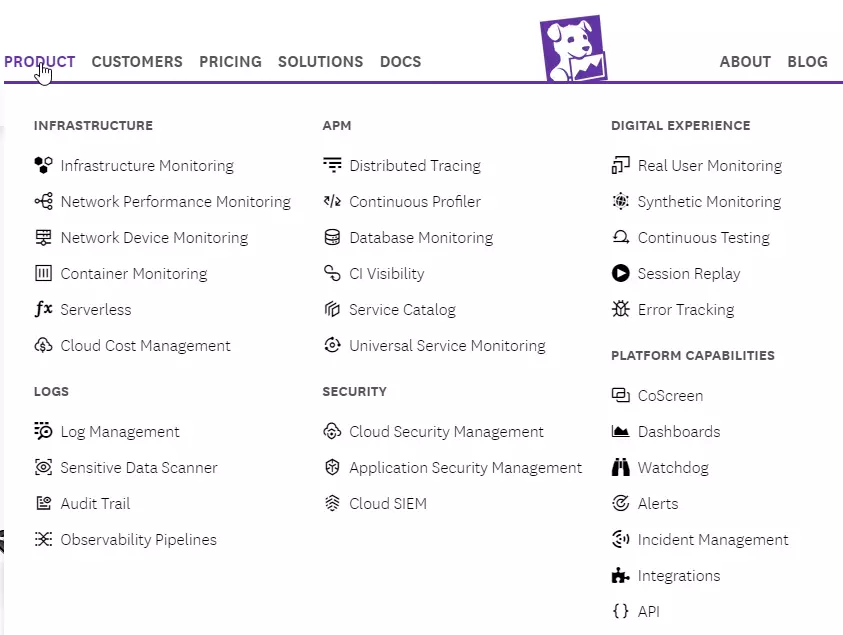

The service is free for up to five hosts and 1-day full-resolution data retention. After that, Datadog plans start at $15 per host per month and volume discounts apply if you use over 600 hosts per month.

Datadog infrastructure monitoring plans

Why Optimize Datadog Costs?

Monitoring costs can add up quickly with Datadog. For example, when running microservices, the monitoring service can pull a lot of logs, increasing your ingestion costs. In addition, Datadog charges increasingly higher data retention fees the longer you store your monitoring metrics and logs.

It also charges $1 per container per month (committed use), meaning your Datadog charges can ramp up quite a bit as your containerized application scales.

Even so, building your own cloud monitoring stack with open-source and “free” tools, such as Grafana and Prometheus, can take a lot of time, skill, and may end up costing more than using a SaaS monitoring service like Datadog.

Yet, if you’re like most engineers, CTOs, and CFOs, you’re under pressure to keep costs low and ROI high. However, you do not want to indiscriminately slash your Datadog cloud monitoring cost. Instead, you’ll want to optimize it. Here’s the difference.

What Is Datadog Cost Optimization?

This refers to improving the cost of using Datadog by identifying inefficiencies you could remove, minimize, or repurpose to save more.

Cost optimization for Datadog, like with other cloud tools, requires that you first understand where your money is going. Only then can you efficiently pinpoint what to cut or retain without weakening your monitoring and observability capabilities.

To optimize Datadog costs, organizations experiment with a variety of workarounds, including:

- Reducing the time they keep logs in Datadog

- Ingesting a portion of the logs and not retaining it at all (dealing with log rehydration when required)

- Ingesting fewer logs altogether, potentially hindering observability and monitoring

Workarounds like these are not ideal. Besides hindering insights, these moves can also negatively impact regulatory compliance. Instead, here are five legitimate ways to reduce your Datadog costs without hurting your ability to derive actionable insights.

Improve Your Datadog Costs With These Cost Optimization Best Practices

Give the following techniques a try and see how your Datadog costs change over time.

1. Chat with your account manager or sales rep

Your Datadog Account Manager or sales representative can be helpful in several ways. Here are two example scenarios.

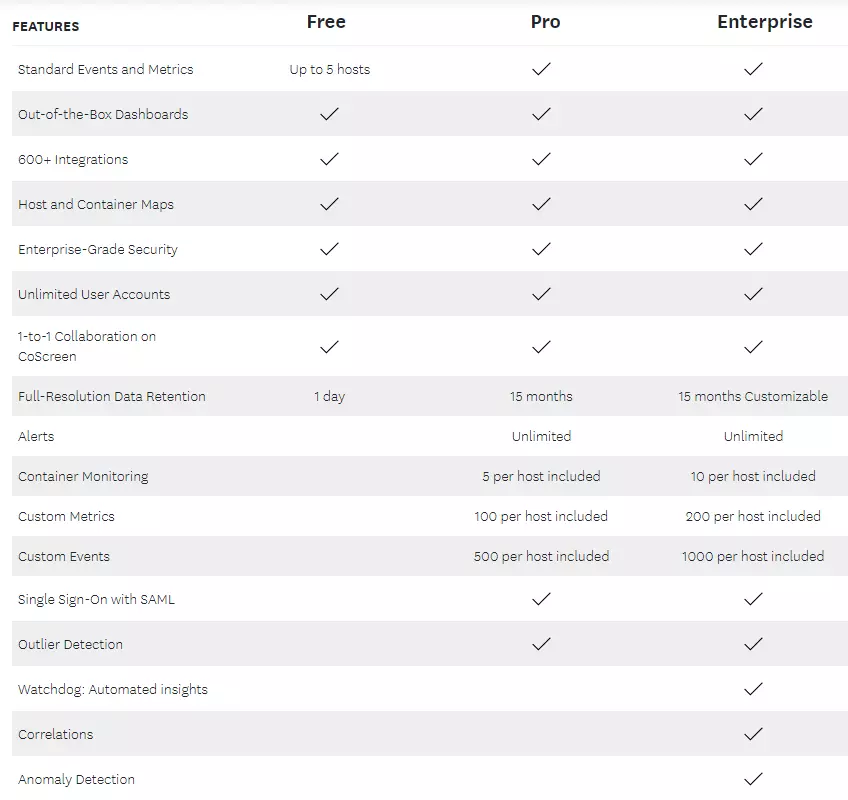

You can request them to clarify any gray areas in your potential contract. One thing to ask, for instance, is about the price and impact of overages on your Datadog subscription.

Take services that charge “per host”, for example. They come with a limited volume of metrics and containers that you can query and monitor. Exceeding this threshold can incur overages, which can be expensive (Datadog charges $0.002 per additional container per hour).

Overages in Datadog usage

Another thing to consult is the level at which the next tier begins. The goal here is to understand when you are nearing the next pricing tier. With this in mind, you can then calculate the financial impact of bumping up your volume for better unit pricing.

One more tip. First-time Datadog users can request a free trial to test out the platform before signing up. This reduces your risk by verifying the tool is suitable for your monitoring needs before spending money or committing long-term.

2. Choose the right commitment level for your needs right off the bat

A crucial aspect of negotiating your Datadog contract is selecting an ideal commitment level for your requirements. You do not want to overcommit from the beginning, even if you know your usage will grow over time.

How?

You can instead adjust your committed volumes twice or thrice in a year. And this can help optimize your unit prices.

But, keep in mind that you can only increase, not decrease, the commitment level. So, it may be smarter to set your commitment at the lower end of your projection volume range, and gradually increase your commitment as your needs grow.

Something else. You can use one of three Datadog payment options; annual, monthly, or hourly. Annual committment delivers the most savings ($23 per host/hour vs $27 per host/hour on the Enterprise plan, for example). You can, however, choose a shorter-term commitment if cash flow is an issue and you need more flexibility.

3. Take advantage of Datadog’s committed use discounts

The platform has monthly minimum usage commitments for various services, including infrastructure monitoring, APM, log management, and database monitoring. Now, Datadog does not publicly share all of the rates for minimum commitments.

But as an example, container monitoring costs $1 per prepaid container per month versus $0.002 per on-demand container per hour. In a 30-day month, using this approach can save you $0.44 per container per month in committed use discounts. That figure can add up fast as your application scales to thousands of containers.

4. Reconsider what custom metrics to monitor

Datadog deems a metric custom if it doesn’t come from one of just over 600 of its integrations. Each one is identifiable by a combination of a name and tag values (such as host tags). The Pro plan offers 100 custom metrics per host you include while the Enterprise plan offers 200.

Here’s the thing. Metrics are pooled across hosts. So for every ten hosts monitored, there are 1000 custom metrics allotted. By configuring only some hosts for custom metrics, for instance, you can assign 400 custom metrics to two hosts and 200 to the remaining eight (such as for build servers).

Then, once you streamline how you use custom metrics in your account, you can opt to remove unused tags. You want to be sure that when adding tags, it is at a higher level of granularity compared to existing tags. For instance, if you add a state tag to a collection of metrics you already track at the city level, your custom metrics allocation will not be affected.

5. Turn off Datadog containerized Agent logs

A portion of the logs generated by the Datadog agent are Datadog tracking its own performance, which you pay for. To reduce transit costs, you’ll want to disable the Agent at the ingestion and agent levels.

Disabling log management is also an option. This is disabled by default, but it’s worth checking if it was enabled and left on by mistake or on purpose. Remember, it costs $1.27 per one million log events per month and $0.10 per ingested/scanned GBs per month, so disabling this can have substantial savings over time.

6. Ingestion controls and retention filters to the rescue

Datadog enables you to set ingestion controls to send only the most crucial traces from an application. You can then use retention filters to define the retention period for each indexed span as soon as the traces arrive. Tweaking these settings can help minimize overages on APM.

7. Reduce egress charges

In addition to Datadog’s built-in billing, you may incur interacting costs between Datadog and your infrastructure provider.

Transmitting a large volume of logs out through NAT Gateways, or through public internet access points located in other clouds, incurs data egress charges. If you are an AWS customer, you can instead proxy through PrivateLink, enabling you to ingest logs via an internal endpoint. Here, data transfer savings can be as high as 80%.

Take The Next Step: Take Advantage Of Cloud Cost Intelligence

If you are looking to collect in-depth, yet easy-to-digest cloud cost insights, CloudZero can help. Unlike traditional cloud cost management tools, CloudZero’s code-driven approach makes it more of an observability tool than a mere cost platform.

That means you can automatically aggregage, analyze, and get reports on your tagged, untagged, and untaggable cost data from both your infrastructure and applications.

Besides, CloudZero breaks this data down into immediately actionable cost intelligence, such as cost per individual customer, per product, per software feature, per project, and more.

You can also view these insights by role; engineering (cost per deployment, per environment, per dev team, etc), finance (cost per customer, per project, per message, etc), and FinOps (COGS, and more).

Better yet, you can use CloudZero AnyCost to combine and analyze costs of different cloud service providers together or independently, including AWS, Google Cloud, and Azure, as well as Kubernetes, Snowflake, MongoDB, Databricks, and New Relic.

To help you prevent overages, CloudZero provides real-time cost anomaly detection. Noise-free alerts notify you of abnormal cost trends soonest possible via Slack, email, or your favorite incident management tool.

Plus, CloudZero offers a tiered pricing model that won’t vary month to month.

But don’t just take our word for it.  to see all of CloudZero’s features!

to see all of CloudZero’s features!