Kubernetes is increasingly the standard for deploying, running, and maintaining cloud-native applications running in containers. Kubernetes (K8s) automates most container management tasks, empowering engineers to manage high-performing, modern applications at scale.

Meanwhile, surveys from VMware and Gartner reveal that insufficient Kubernetes expertise prevents many organizations from fully adopting containerization. Understanding how Kubernetes components work removes this barrier.

In that case, we’ve put together a bookmarkable guide on pods, nodes, clusters, and more. Let’s dive right in, starting with the very reason Kubernetes exists: containers.

Quick Summary

Pod | Node | Cluster | |

Description | The smallest deployable unit in a Kubernetes cluster | A physical or virtual machine | A grouping of multiple nodes in a Kubernetes environment |

Role | Isolates containers from underlying servers to boost portability Provides the resources and instructions for how to run containers optimally | Provides the compute resources (CPU, volumes, etc) to run containerized apps | Has the control plane to orchestrate containerized apps through nodes and pods |

What it hosts | Application containers, supporting volumes, and similar IP addresses for logically similar containers | Pods with application containers inside them, kubelet | Nodes containing the pods that host the application containers, control plane, kube-proxy, etc |

What Is A Container?

A container is a lightweight runtime that packages an application with everything it needs to run:

- Application code

- Libraries and runtimes

- Configuration files

- System dependencies

A container runs as an isolated process on a host system while sharing the host’s operating system kernel.

Because containers do not include a full operating system, they are smaller and faster to start than virtual machines.

What Is A Containerized Application?

In cloud computing, a containerized application is an application built using a cloud-native architecture to run within containers. A container can host either an entire application or small, distributed components (microservices).

Developing, packaging, and deploying applications in containers is called containerization. Containerized apps can run across a variety of environments and devices without compatibility issues.

Containerized applications offer a critical advantage: developers can isolate faulty containers and fix them independently before they affect other components or cause downtime. This isolation is difficult to achieve with traditional monolithic applications where a single bug can bring down the entire system.

What Is A Kubernetes Pod?

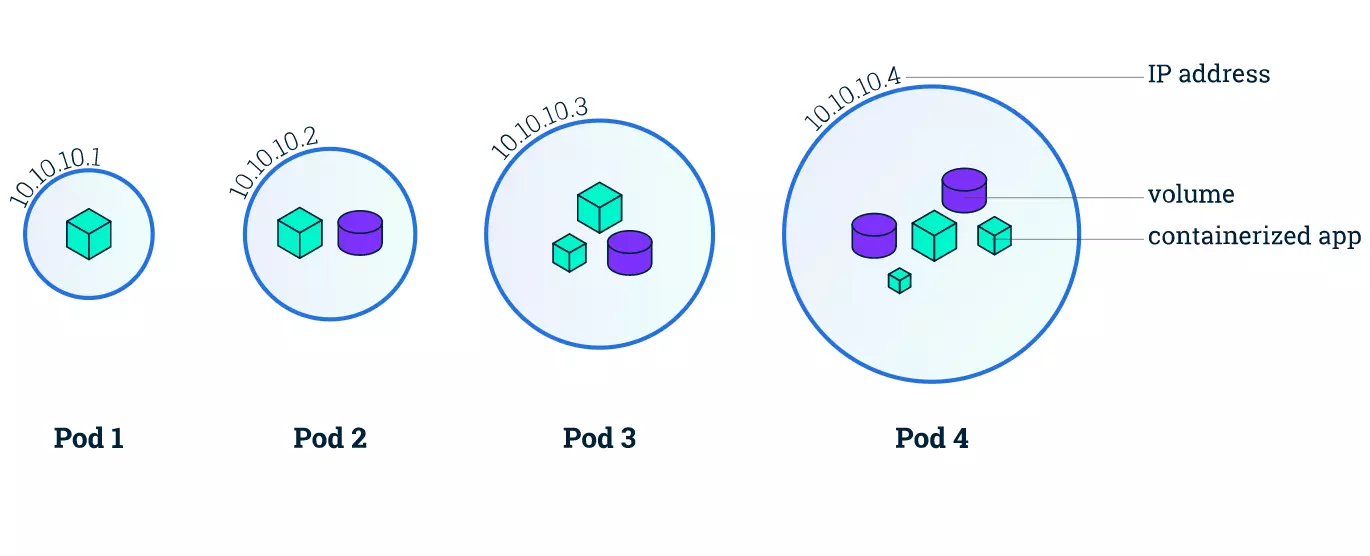

A Kubernetes pod is the smallest deployable unit in Kubernetes that runs one or more application containers. Pods provide an abstraction layer that includes:

- Shared storage volumes

- A single IP address shared by all containers in the pod

- Inter-container communication over localhost

- Host-level information for running containers

This architecture allows closely related containers to communicate efficiently while maintaining isolation from other pods.

Credit: Kubernetes Pods architecture by Kubernetes.io

Containers do not run directly on virtual machines; pods are a way to manage container lifecycles.

Containers that must communicate directly with each other are housed in the same pod. These containers are also co-scheduled because they work within a similar context. Additionally, shared storage volumes enable pods to survive container restarts by providing persistent data.

Kubernetes automatically scales pods up or down to meet changing demand. When traffic increases, Kubernetes creates additional pod replicas. When demand decreases, it removes excess pods. Similar pods scale together as a group, maintaining consistent application behavior.

Another unique feature of Kubernetes is that rather than creating containers directly, it generates pods that already have containers.

Also, whenever you create a K8s pod, the platform automatically schedules it to run on a Node. This pod will remain active until the specific process completes, resources to support the pod run out, the pod object is removed, or the host node terminates or fails.

Each pod runs on a Kubernetes node and can fail over to a logically similar pod on a different node in the event of failure. And speaking of Kubernetes nodes.

What Is A Kubernetes Node?

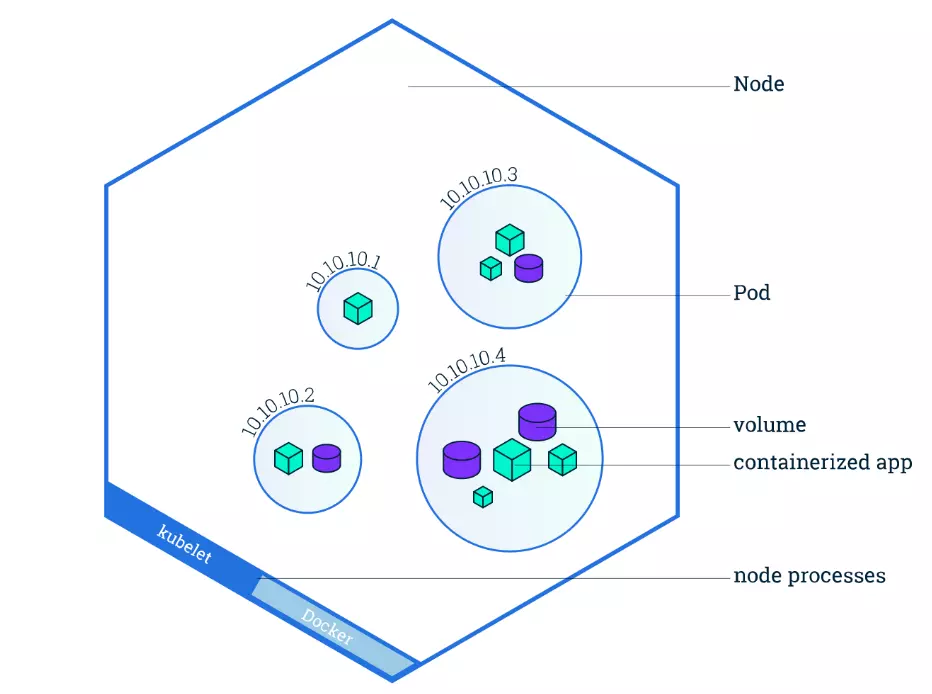

A Kubernetes node is either a virtual or physical machine on which one or more Kubernetes pods run. It is a worker machine that provides the necessary services to run pods, including the CPU and memory resources they require.

Now, picture this:

Credit: How Kubernetes Nodes work by Kubernetes.io

Each node includes three essential components:

- Kubelet: An agent that runs on each node to ensure pods run correctly, communicating with the control plane and managing pod lifecycles.

- Container runtime: The software that runs containers. Common runtimes include Docker, containerd, and CRI-O. The runtime retrieves container images from registries, unpacks them, and executes the application.

- Kube-proxy: A network proxy that manages network rules within the node (between its pods) and across the entire Kubernetes cluster, enabling pod-to-pod communication.

Here’s what a Cluster is in Kubernetes.

What Is A Kubernetes Cluster?

Nodes usually work together in groups. A Kubernetes cluster consists of a set of worker machines (nodes). The cluster automatically distributes workload among its nodes, enabling seamless scaling.

The Kubernetes hierarchy works like this:

Cluster → Nodes → Pods → Containers

- A cluster consists of multiple nodes working together

- Each node (virtual or physical machine) provides compute resources and runs one or more pods

- Each pod contains one or more containers with shared networking and storage

- Each container hosts application code and all dependencies required to run

The cluster also includes the Kubernetes Control Plane (or Master), which manages each node. The control plane is a container orchestration layer where K8s exposes the API and interfaces for defining, deploying, and managing containers’ lifecycles.

The master assesses each node and distributes workloads based on available capacity. This load balancing is automatic, improves performance, and is one of the most popular features of Kubernetes, a container management platform.

You can also run the Kubernetes cluster on different providers’ platforms, such as Amazon’s Elastic Kubernetes Service (EKS), Microsoft’s Azure Kubernetes Service (AKS), or the Google Kubernetes Engine (GKE).

Take The Next Step: View, Track, And Control Your Kubernetes Costs With Confidence

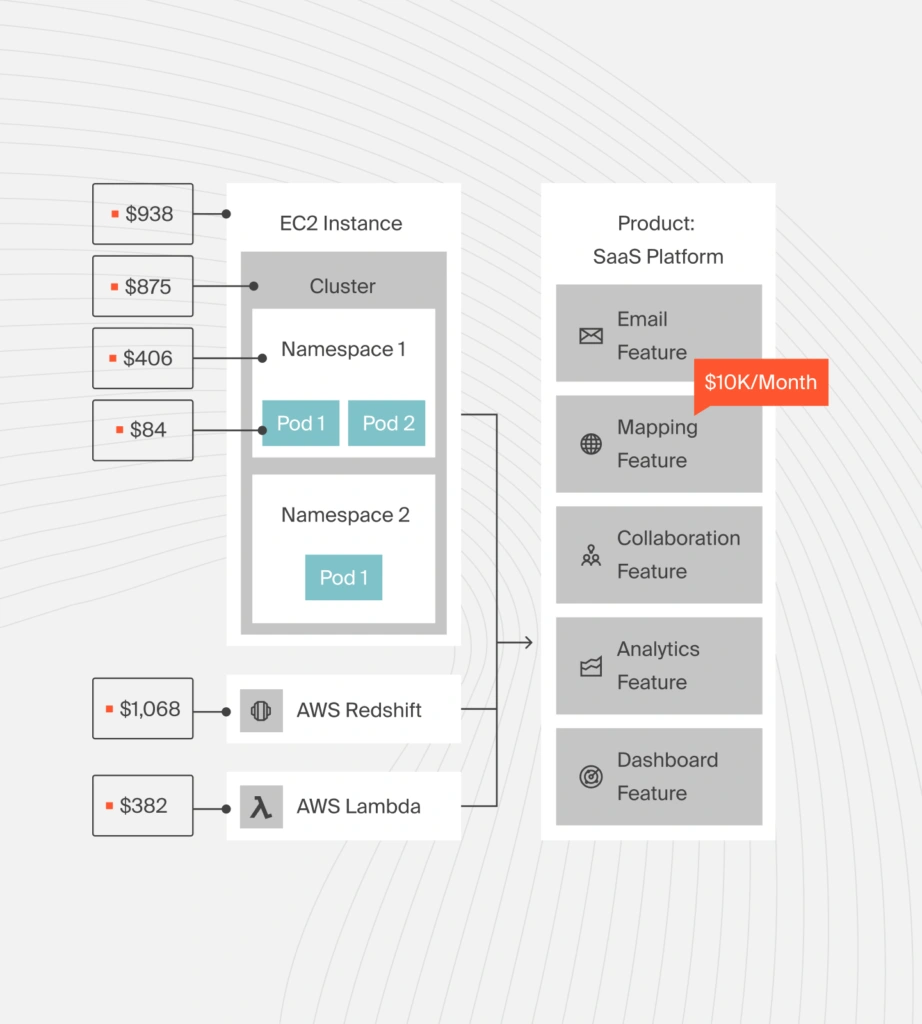

Kubernetes is powerful for managing containerized applications. It’s open-source, highly scalable, and self-healing. But as Kubernetes scales to support business growth, cost management often becomes an afterthought.

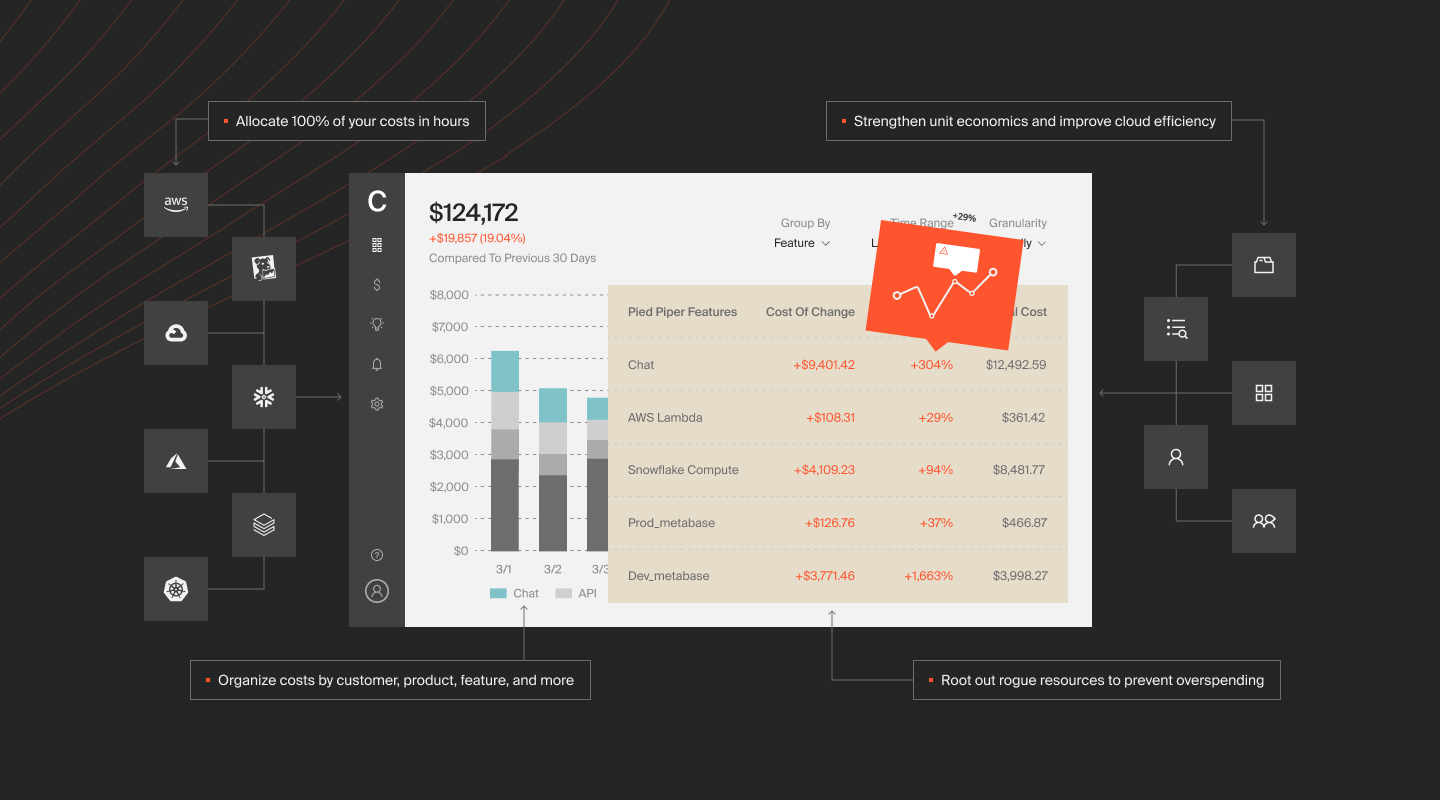

Most cloud cost tools show total spend without breaking down the portion from Kubernetes workloads. CloudZero provides hourly Kubernetes cost visibility by K8s concepts: cost per pod, container, microservice, namespace, and cluster. This granularity reveals which teams, products, and processes drive your Kubernetes spend.

By drilling down to this level of granularity, you can identify which people, products, and processes are driving your Kubernetes spend.

You can also combine your containerized and non-containerized costs to simplify your analysis. CloudZero enables you to understand your Kubernetes costs alongside your AWS, Azure, Google Cloud, Snowflake, Databricks, MongoDB, and New Relic spend. Getting the full picture.

You can then decide what to do next to optimize the cost of your containerized applications without compromising performance. CloudZero will alert you to cost anomalies before you overspend.

to see these CloudZero Kubernetes Cost Analysis capabilities and more!

to see these CloudZero Kubernetes Cost Analysis capabilities and more!

Kubernetes FAQ

Is a Kubernetes pod a container?

No. A pod is not a container. A pod is a wrapper that runs one or more containers together with shared networking and storage. A pod is the smallest deployable unit in Kubernetes, not the container itself.

What is the difference between a container node and a pod?

A container runs application code. A pod groups one or more containers. A node is the machine that runs pods. Each layer builds on the one below it.

Can a pod have multiple containers?

Yes. A pod can run multiple containers. This is used when containers must share resources or communicate closely over localhost. Containers in the same pod are scheduled and managed together.

How many pods run on a node?

There is no fixed number of pods per node. The limit depends on node resources, pod resource requests, and cluster configuration. The Kubernetes scheduler places pods based on available capacity.

Does Kubernetes schedule containers directly?

No. Kubernetes schedules pods, not containers. Containers are always created and managed within a pod.