Kubernetes, also known as K8s, is an open-source, portable, and scalable container orchestration platform. With K8s, you can reliably manage distributed systems for your applications, enabling declarative configuration and automatic deployment.

Yet, K8s can be resource-intensive and costly, with a rather steep learning curve. But in 2019, a lighter, faster, and potentially more cost-effective alternative appeared: K3s. Still, K3s is not a magic wand that works for all Kubernetes deployments.

Here’s a look at what K3s is, the differences between K3s vs. K8s, their ideal use cases, and more.

K3s Explained: What Is K3s?

K3s is a lightweight, easy-to-install, deploy, and manage version of stock Kubernetes (K8s). No, it is not a fork of Kubernetes. K3s is a certified Kubernetes distribution.

Although K3s is a refined version of Kubernetes (the upstream version), it does not alter the core functionality of Kubernetes.

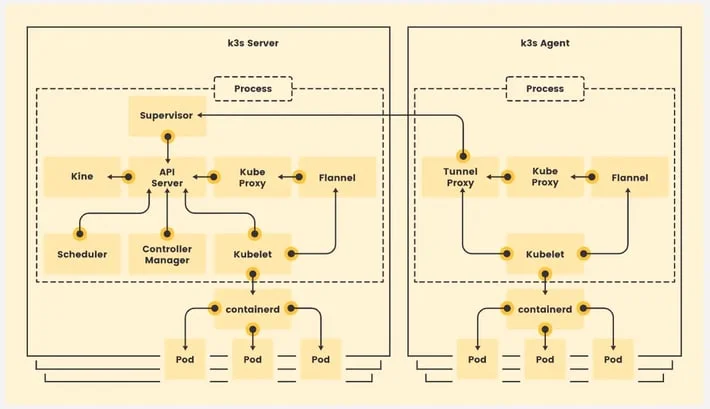

Credit: How K3s works

K3s removes a lot of “bloat” from stock Kubernetes. Depending on the version, the single binary file can range in size from under 40MB to 100MB and requires less than 512MB of RAM to run.

Rancher Labs created K3s when building RIO, a PaaS on Kubernetes project that’s no longer active.

When the project caught on, Rancher opened K3s to the community and donated it to the Cloud Native Computing Foundation (CNCF). Today, the CNCF owns K3s and regulates both K8s and K3s.

Rancher made K3s lightweight by removing over 3 billion lines of code from the K8s source code. It trimmed most non-CSI storage providers, alpha features, and legacy components that weren’t necessary to fully implement the Kubernetes API.

What Are The Advantages Of K3s?

K3s boasts several powerful benefits:

- Lightweight – The single binary file is under 100MB, making it faster and less resource-intensive than Kubernetes (K8s). The master, nodes, and workers do not need to run in multiple instances to boost efficiency.

- A flatter learning curve – There are fewer components to learn before applying it to real-world situations.

- Certified Kubernetes distribution – It works well with other Kubernetes distributions. It is not inferior to K8s.

- Easier and faster installation and deployment – K3s takes seconds to minutes to install and run.

- Run Kubernetes on ARM architecture – Devices that use ARM architecture, such as mobile phones, can run Kubernetes with K3s.

- Run Kubernetes on Raspberry Pi – It’s so lightweight that it supports clusters made with Raspberry Pi.

- Supports low-resource environments – For example, IoT devices and edge computing.

- Remote deployment is easy – Bootstrap it with manifests to install after it comes online.

- Smaller attack surface but ships with batteries – Although K3s is bare-bones, it contains all the necessary components you need, including CRI (containerd), ingress controller (Traefik Proxy), and CNI (Flannel). It helps install essential components like CoreDNS.

- Supports single-node clusters – An in-built service load balancer connects Kubernetes Services with the host IP.

- Flexibility – Aside from the default SQLite, K3s also supports PostgreSQL, MySQL, and etcd datastores.

- Start/stop feature – Turn K3s on and off without interfering with the environment. Therefore, you can easily update K3s, restart it, and continue using it without any issues.

But K3s isn’t limitless.

What Are The Disadvantages Of K3s?

Among its limitations is that K3s does not come with a distributed database by default. This limits the control plane’s high availability capabilities. You need to point K3s servers to an external database endpoint (such as etcd, PostgreSQL, or MySQL) to achieve high availability of its control plane.

Fortunately, Rancher has developed Dqlite, a distributed, high-availability, and fast SQLite database. It utilizes C-Raft to ensure a minimal footprint and optimal efficiency, allowing you to replace the default SQLite database in K3s.

K3s does not currently support any database other than SQLite, or more than a master on a master node, which is another major limitation of K3s.

Pro tip: Configure a 3-node etcd cluster and then deploy three K3s servers with one or more agents to create a high-availability cluster at the edge for ARM64 or AMD64 architectures. This gets a production-ready environment (with HA for the control plane).

Could all these benefits mean that K3s is simply better than K8s?

What Is The Difference Between K3s And K8s?

The most significant difference between K3s and K8s is how they are packaged.

Kubernetes and K3s share the same source code (upstream version), but K3s contains fewer dependencies, cloud provider integrations, add-ons, and other components that are not absolutely necessary for installing and running Kubernetes.

So, it may be more relevant to compare the use cases each Kubernetes version excels at rather than comparing K3s and K8s in terms of which is better.

Before that, here are a few differences between the K3s and K8s:

- K3s is a lighter version of K8s, which has more extensions and drivers. So, while K8s often takes 10 minutes to deploy, K3s can execute the Kubernetes API in as little as one minute, starts up faster, and is easier to auto-update and learn.

- K8s runs components in separate processes, whereas K3s combines and operates all control plane components (including kubelet and kube-proxy) in a single binary, server, and Agent process.

- K3s relies on a SQLite3 datastore by default. It supports MySQL, PostgreSQL, and etcd3 for more complex needs, but Kubernetes only supports etcd.

- Unlike K8s, you can switch off embedded K3s components, giving you the freedom to install your own DNS server, CNI, and ingress controller. You can also use an existing Docker installation for your CRI to replace the container installation that ships with K3s.

The following section covers the best use cases for K3s versus K8s, which fully illustrate these differences and others. But before we dig into those, here’s a quick look at K3d.

What does K3d do?

K3d is an open-source tool that allows you to run lightweight and highly available K3s clusters within a Docker container. It enables you to build single-node or multi-node K3s clusters, supporting easy Kubernetes development and testing locally.

Running it on Kubernetes makes scaling workloads up or down even easier, without the additional effort required to achieve this.

By wrapping K3s, K3d implements many of its features with additional capabilities, such as hot code reloading, building, deploying, testing Kubernetes apps with Tilt, and managing a full cluster lifecycle across both simple and multi-server clusters.

With its powerful commands, k3d also simplifies managing Docker-based K3s clusters.

Now back to K3s vs. K8s.

When To Use K3s

Among the best uses for K3s are:

- With K3’s lightweight architecture, small businesses can run operations faster and with fewer resources, while enjoying high availability, scalability, security, and other benefits of a full-blown Kubernetes (K8) architecture.

- Create a single-node cluster using K3s to maintain the deployment workflow of the manifests. It works great when you install K3s on an edge device or server. This enables you to use your existing CI/CD workflows and container images with the YAML files or Helm charts.

- For cloud deployments, point K3s servers to a managed database, such as Google Cloud SQL or Amazon RDS, to utilize multiple agents for deploying a highly available control plane. For maximum uptime, K3s can run each server in a separate availability zone.

- K3s supports AMD64, ARM64, and ARMv7 architectures, among others. That means you can run it anywhere from a Raspberry Pi Zero, Intel NUC, or NVIDIA Jetson Nano to an a1.4xlarge Amazon EC2 instance, as long as you use a consistent installation process.

- K3s can also handle environments with limited resources and connectivity, including industrial IoT devices, edge computing, remote locations, and unattended appliances.

- It is a great way to run Kubernetes on-premises without additional cloud provider extensions. Using K3s with a third-party RDBMS or external/embedded etcd cluster can improve performance compared to a stock Kubernetes running in the same environment.

- K3s comes online faster than K8s, so it’s suitable for running batch jobs, cloud bursting, CI testing, and various other workloads requiring frequent cluster scaling.

So, where does that leave stock Kubernetes?

When To Use K8s

For everything else requiring heavy-duty clusters, K8s is the best choice. Note that while K3s is purpose-built for running Kubernetes on bare-metal servers, Kubernetes (K8s) is a general-purpose container orchestrator. Also, K8s offers many configuration options for various applications.

If you want more high-availability options, such as automatic failover and cluster-level redundancy, full-blown K8s may be the better choice. Likewise, K8s offers plenty more extensions, dependencies, and features, such as load balancing, auto-scaling, and service discovery.

In addition, K8s can handle more sophisticated applications, such as robust big data analytics and high-performance computing (HPC). K3s is better suited for resource-constrained environments, such as IoT devices and edge computing.

In the long run, both small and larger companies can use K8s to handle complex applications with multiple extensions, cloud provider add-ons, and external drivers to get things done.

How About Using Both K3s And K8s?

Some teams use both K8s and K3s — right workload, right tier, respectively.

For example, in:

- Cloud + edge balance: K8s runs backend services in the cloud. K3s, on the other hand, handles edge workloads, such as retail kiosks or IoT gateways, providing local autonomy with a lighter footprint.

- Multi-cloud or hybrid sites: Organizations use K8s for central, production clusters and K3s in distributed locations — even without a shared network. They leverage K3s’s support for multicloud clusters via VPN mesh.

- Resource-constrained and fog environments: In hybrid setups, K3s powers resource-limited edge or fog nodes. K8s manages the cloud layer, delivering faster real-time processing and lower latency for IoT workloads.

2025 Suitability Matrix: K3s Vs. K8s By Use Case

Here’s a focused matrix comparing K3s and K8s across common 2025 deployment scenarios — from IoT and edge workloads to enterprise-scale production.

|

Use Case |

K3s |

K8s |

|

IoT And Edge |

Ideal for lightweight, low-power devices and real-time edge apps |

Too heavy for resource-limited edge environments |

|

Hybrid Deployments |

Best for edge/fog layer (K3s) with K8s managing the core |

Core orchestration layer in hybrid models |

|

Dev/test Environments |

Simple, fast configuration for local/dev workflows |

Slower spin-up, more config-heavy |

|

Enterprise Production |

Not production-hardened for complex enterprise workloads |

Best for large-scale, policy-rich production environments |

|

Cost-Constrained Use |

Minimal CPU/memory usage; great for cost-efficiency |

Higher infra + ops overhead = more spend |

Meanwhile, new trends are reshaping what lightweight and alternative Kubernetes solutions can look like.

Rising Trends In Lightweight Kubernetes And Alternatives

These trends reflect a shift toward lighter, more targeted solutions — especially where resource constraints, speed, or operational simplicity are most critical.

- MicroK8s is gaining traction among developers for its simplicity in test and dev environments.

- K3d, a tool that runs K3s in Docker, is being used for fast local development cycles. Related reads:

- In AI + Edge, K3s is used to deploy lightweight AI inference models at the edge, particularly in smart retail and surveillance applications.

- Wasm (WebAssembly) is also being explored as a more efficient alternative to containers. It potentially reduces the need for full Kubernetes control planes.

For more: 10 Top Kubernetes Alternatives (And Should You Switch?)

What Next: Control Kubernetes Costs With CloudZero

Choosing K3s vs. K8s will ultimately depend on your project requirements and available resources.

Alternatively, you can run both versions of Kubernetes for different purposes.

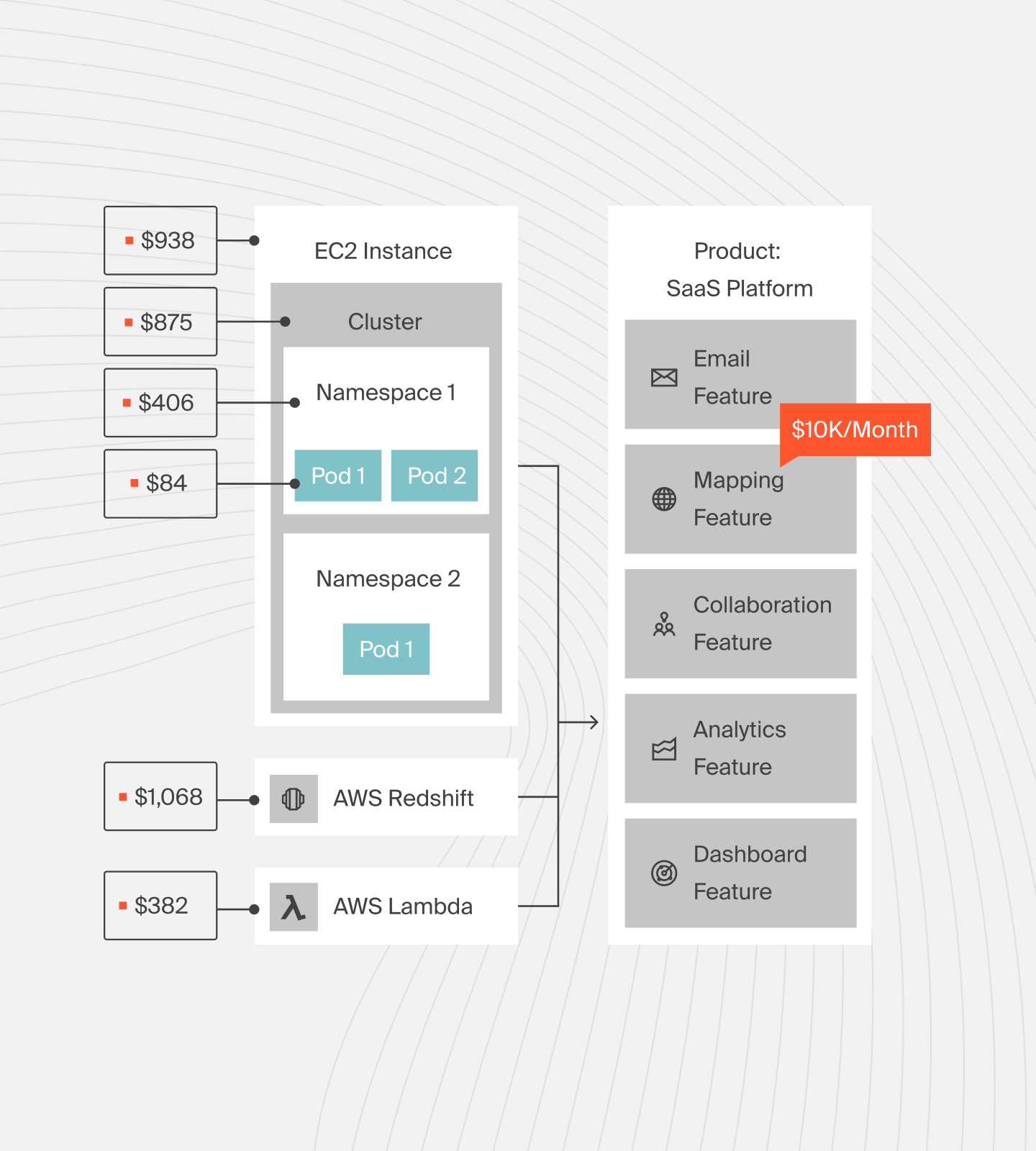

Either way, you still have to keep tabs on your Kubernetes costs, since they can rapidly spiral out of control. With CloudZero Kubernetes cost analysis, you can continuously track container costs by cluster, pod, or namespace.

For example, you can examine and understand how your cluster’s usage and cost fluctuate over time as you scale it up or down. Manual allocation rules are not required.

Besides knowing who and what affects your Kubernetes costs and why they are changing, you’ll also get timely alerts when an application consumes more cluster resources than expected, so you can act before it becomes a costly surprise.

to see the power of CloudZero for yourself.

to see the power of CloudZero for yourself.

Frequently Asked Questions About K3s And K8s

Is K3s faster than K8s?

Yes. In most cases, K3s will spin up clusters faster than K8s will. K3s is lightweight and requires fewer resources compared to K8s.

Should I use K3s for production?

Yes, but only if you are running a small deployment or the environment is rather resource-constrained, such as at the edge.

Does K3s use containerd or Docker?

Containerd. And you don’t need Docker for K3s. Kubernetes 1.24 no longer supports dockershim, which otherwise enables communication between dockerd and the kubelet.

Should I use K3s or K3d?

K3d is suitable for use in even smaller deployments than K3s, such as IoT and Raspberry devices. K3s is ideal for more complex applications, such as edge computing, where resources may be too restrained for K8s to run smoothly.

Should I use K3s or K8s?

That depends on your specific Kubernetes performance needs, resource availability, and business goals. Be sure to carefully review your unique requirements to select the ideal platform.