Looking to optimize your Kubernetes architecture?

While the word “Kubernetes” translates to “helmsman” (i.e., someone who steers a ship), Kubernetes ultimately functions more like an orchestra conductor than a ship captain.

Kubernetes (also known as K8s) simplifies the orchestrating containers for engineers. This frees engineering up to focus on innovation, reduce time to market, and optimize cloud spend. Yet, things don’t always go smoothly for engineering teams using Kubernetes.

We get it. Kubernetes architecture can be challenging. So, to help you get the most out of your Kubernetes setup, this guide will cover Kubernetes architecture in full detail and list the best practices you can use to optimize your Kubernetes environment.

What Is Kubernetes Architecture?

Kubernetes is both a platform and a tool. Think of the Kubernetes architecture as the operating system (platform) for containerized applications.

Kubernetes is the most popular orchestration tool for deploying, scaling, networking, and maintaining containerized applications. Using Kubernetes, engineers can manage those activities at scale through automated coordination and performance of all aspects of containers and their dynamic environments.

A Kubernetes cluster can run all major types of workloads, such as:

- Microservices (the most popularly deployed with Kubernetes)

- Monolithic

- Stateless applications

- Stateful applications

- Batch jobs

- Services

It wasn’t always like that. For example, Kubernetes didn’t support stateful applications at first, but now it can with improved cluster architecture.

The Components Of Kubernetes Architecture

Kubernetes architecture comprises three major components:

- A control plane (master)

- A single or several cluster nodes (workers, powerhouses, or workhorses)

- A persistent storage (etcd) that ensures cluster state consistency

Pods, services, networking, and the Docker Swarm base container engine are also part of the Kubernetes environment.

Kubernetes environments usually comprise a single master server acting as a point of contact and a control node. It uses a client-server architecture. It is possible, however, to set up more than one master configuration.

Kubernetes also involves the concept of the desired state versus an actual state, which is one reason for implementing Kubernetes for workloads.

The desired state describes the state of the objects your container will use through a declarative or imperative API. A running object’s actual state refers to the state in which it is at any given time.

Kubernetes is designed to always strive for the most optimal Kubernetes environment for running workloads. As an example, it can self-heal when issues arise to minimize downtime and keep operations as close to the desired state as possible.

Why Optimize Kubernetes?

However, Kubernetes isn’t perfect. First, Kubernetes architecture is ideal for large, distributed workloads. So, it may be helpful for growing startups and enterprises, but it would likely be overkill for a small business.

Second, Kubernetes can be complex to deploy, scale, and maintain, especially in-house, without experienced Kubernetes engineers to help set everything up correctly, fully utilize the features, and optimize Kubernetes costs.

There are several other Kubernetes architecture challenges, limitations, and disadvantages.

Yet, 70% of companies surveyed for the 2021 Kubernetes Adoption Report said Kubernetes was a top priority for their containerization needs.

So, how do you make the Kubernetes architecture work for you instead of against you?

11 Kubernetes Architecture Best Practices

Here are some best practices you’ll want to implement for your Kubernetes architecture:

1. Simplify Kubernetes management

Some engineers think configuring Kubernetes on their own is simple because they can quickly set up a cluster with minikube on a laptop. Several weeks or months later, they wish they had left the task to a cloud service or a third-party provider.

Creating your own Kubernetes cluster requires using virtual machines (VMs) from a suitable Infrastructure-as-a-Service (IaaS) provider to build control plane servers, services, and networking components.

You need to configure all the hardware, software, ingresses, and load balancers to communicate and work fluidly, be easy to monitor, and optimize Kubernetes costs while you’re at it.

Leave the infrastructure to managed services and save time, errors, and costs.

2. Upgrade to the latest Kubernetes version

As with any program, use the latest version because it offers advanced features, improved security patches, speed, and enhanced overall usability.

3. Optimize Kubernetes monitoring

Engineers use Kubernetes monitoring to continually track a Kubernetes environment’s health, performance, security, and cost metrics.

For example, engineers and finance can determine where, when, and how Kubernetes costs are incurred. By monitoring Kubernetes properly, they can track costs, ensure high availability in service delivery, and more.

4. Increase Kubernetes cost visibility

Many companies actually see their Kubernetes spending increase when they adopt its architecture to save costs, improve time to market, or for other reasons. The most common reason for this is that they configure their architecture and then leave it to run without continuous and adequate Kubernetes cost management practices.

Without proper monitoring, your company may accrue a set of Kubernetes architectural decisions that lead to cost overruns.

It may not be difficult to fix a single overrun.

But, discovering and fixing a thread of architecture decisions made over time can be a challenge that leads to margin losses. SaaS companies often find themselves in this situation, leading them to report weaker margins than needed.

Use a robust Kubernetes cost intelligence solution to see where, when, and how your Kubernetes infrastructure affects your company.

5. Ensure you can scale accordingly

In a growing organization, it is easy to always think of Kubernetes’ auto-scaling capabilities as ways to scale up rather than down. Seeing the company expand its Kubernetes architecture as demand grows is a positive mindset.

Nevertheless, ensuring your architecture can grow and shrink efficiently in response to usage is critical. Kubernetes components should not run idle and waste your time and dollars.

Cluster Autoscaler and Horizontal Pod Autoscaler help adjust pod and node volumes dynamically — and in real-time.

6. Keep workloads stateless

By keeping workloads stateless, you can use spot instances. Spot instances can disappear, which is why some engineers dislike them. However, keeping your application stateless solves this problem.

Preserve the cluster for differentiated services and store data separately to ensure a smooth experience.

7. Keep security tight

Be sure to deploy Role-based Access Controls (RBACs) in a Kubernetes environment. RBACs enable you to determine which users have access to which resources in your Kubernetes cluster. Keep in mind that Kubernetes’ complexity leaves it vulnerable to compromise if not set up correctly.

There are two ways to set RBAC permissions:

- ClusterRole if you want to set them for a non-namespaces resource

- Role for namespaced Kubernetes resource

Still, if you want to reference roles already administered on a group, service, or user account, you can use ClusterRoleBinding and RoleBinding.

8. Reconsider your multi-cloud approach

Many companies contemplating Kubernetes architecture worry about vendor lock-in. They would like to take advantage of the benefits offered by different cloud providers.

However, multi-cloud implementations often add unnecessary complexity, reduce visibility, and increase costs owing to increased networking costs. So, if you can, work with a reputable, single vendor.

9. Use Alpine images vs. base images

Base container images tend to come with a lot of baggage. Many engineers can often live without the libraries and packages that come with base images out-of-the-box. You can speed up builds, spend less space, and pull images faster by using Alpine images, which can be tenfold smaller.

The smaller the container image, the fewer things you have to worry about, including potential vulnerabilities. You can always add the specific packages and libraries your application needs over time.

10. Implement readiness and liveness probes

This is essential for maintaining application health and ensuring efficient resource utilization.

- Liveness probes: These checks determine if an application is running as expected. If a liveness probe fails, Kubernetes restarts the container, aiding recovery from failures such as deadlocks.

- Readiness probes: These checks assess whether an application can handle traffic. If a readiness probe fails, Kubernetes removes the pod from service endpoints, preventing it from receiving traffic until it’s ready.

11. Remember the basics

Here are some fundamental Kubernetes best practices to keep in mind:

- Package your applications with Helm charts.

- Avoid restarting failed containers. Perform a clean crash with an error code so the Kubernetes infrastructure can restart them automatically.

- Utilize namespaces to share cluster resources between teams concurrently accessing them. Furthermore, if a process hangs on the cluster, it won’t be able to hog resources.

- Log everything to stderr and stdout.

- Configure resource requests and limits.

- Use a non-root user within a container.

- Let each process run in its own container.

- Set the file system to read-only.

- Use as many descriptive labels as needed.

- Avoid using the latest or no tag. Add tags to your containers to ensure they include the latest changes you just made.

- It is simpler and cheaper to use ingress to load-balance multiple services via a single end-point than to use type LoadBalancer.

Discover How Changes To Your Kubernetes Architecture Impact Costs

CloudZero’s Kubernetes cost monitoring solution enables engineers to see where their Kubernetes spend goes and what drives it. Additionally, using CloudZero, engineering teams can measure costs by the metrics that matter most to their business, like cost per customer, team, feature, product, and more.

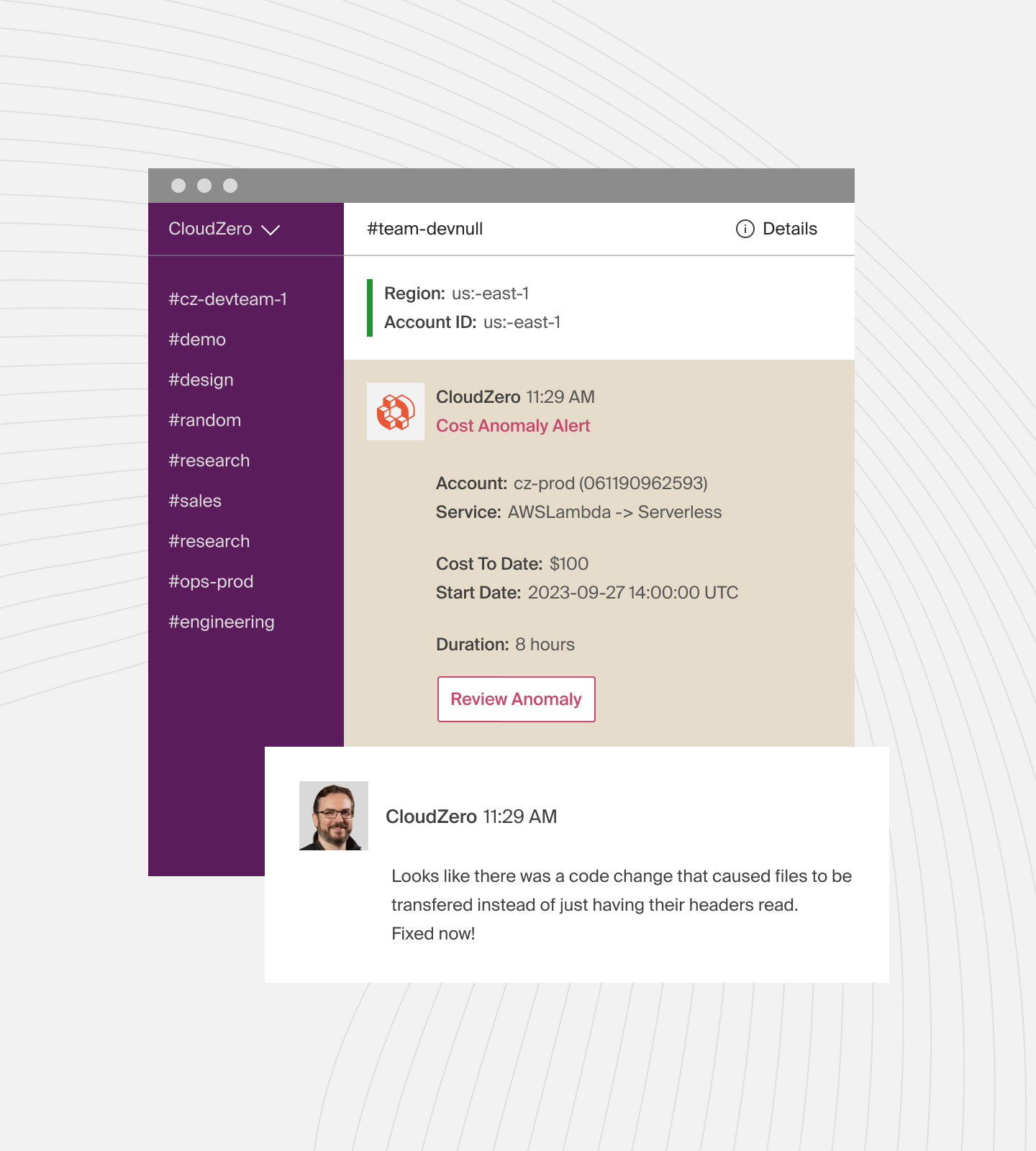

CloudZero even alerts teams of cost anomalies via Slack so engineers can address any code issues to prevent expensive cost overruns.

to see how CloudZero can help your organization measure and monitor your Kubernetes costs.

to see how CloudZero can help your organization measure and monitor your Kubernetes costs.