Containers and serverless computing are two of the most popular methods for deploying applications. Both methods have their advantages and disadvantages. To choose the one that’s right for your business, you need to understand the pros and cons of managing your own containers versus using serverless services.

What Are Containers?

A container is a virtualization architecture that contains both an application and all it needs to run smoothly, such as system libraries and settings. That is, an application and all its dependencies are “packed into a dedicated box” that can run on any operating system.

Containerized applications are portable and can be moved from one host to another as long as the host supports the container runtime. Containers are faster and more efficient than virtual machines because only the operating system is virtualized in containers, which makes them more lightweight and faster than virtual machines.

The most popular container orchestrators are Kubernetes, Amazon ECS, and Docker Swarm.

What Is Serverless?

Serverless computing is a type of architecture in which computing power or backend services are available on-demand. It is a practice of using managed services to avoid rebuilding things that already exist in the cloud.

In serverless systems, the user does not have to worry about the underlying infrastructure; instead, the serverless provider manages and maintains all the underlying servers so the user can focus on writing and deploying code.

The term “serverless” stems from the fact that the user is not aware of the physical servers even though they exist.

Serverless Vs. Containers: What Are The Similarities?

While containers and serverless systems are distinct technologies, they share some overlapping functionalities.

In both systems, applications are abstracted from the hosting environment. This makes them more efficient than virtual machines. They still require orchestration tools for scaling. Their primary function is that they allow the deployment of application code.

Differences Between Containers And Serverless

Deployability

In general, serverless systems are easier to deploy because there’s less work involved on the part of the developer. For instance, in AWS, you can create a queue, database, or Lambda function and seamlessly connect them together. With a container service, deploying these same services is more taxing.

If you’re using Kubernetes, for example, you’d have to figure out a suitable Kubernetes configuration, then choose namespaces, pods, and clusters before moving to the deployment stage. Overall, the serverless deployment process is more plug-and-play than containers because you only need to select the managed services provided by your cloud service provider.

Also, when properly configured, containers will take a few seconds to deploy. Serverless functions deploy in milliseconds, so the application can go live as soon as the code is uploaded.

Cost

When compared equally, serverless managed services will most likely be more expensive than managing your own containers because you’re offloading maintenance and management of services to the vendor.

But a head-to-head comparison does not give the full picture. The important question to ask when using containers is: What is the cost of the underlying services that power those containers?

This is because containers run on other services under the covers and those services may be idle most of the time. So, are you using pay-as-you-go for these underlying services, or are paying even when you are not using them?

If you’re running the same workloads all the time, then container services will almost certainly be cheaper than managed serverless services. However, if you have dynamic workloads that change a lot and you don’t manage your containers effectively, you may have idle resources that you pay for so you might have more waste.

Maintenance

In serverless systems, maintenance of servers and any underlying infrastructure is offloaded to the vendor. However, in containers, you have to manage your application backend and ensure they are patched regularly.

Host environments

Containerized applications can run on any modern Linux server and certain versions of Windows. Serverless systems, on the other hand, are tied to the host platforms which are often based in the cloud.

Scalability

In a serverless architecture, the backend scales automatically to meet the demand. In addition, services can easily be turned on and off without performing any additional work. In container-based architecture, the developer has to plan for scaling by procuring the server capacity required to run the containers.

When To Use Serverless Functions Vs. Containers

Overall, containers are better if you want complete control over your application environment. Containers are particularly useful when migrating monolithic legacy applications to the cloud because you can easily replicate the application’s running environment.

Serverless architecture works well for teams that want to write and release applications quickly without the responsibility of managing and maintaining servers or underlying infrastructure. You should also consider serverless functions if you have unpredictable workloads that will be difficult to smoothly scale up or down in a container-based system.

That said, another option is to create a hybrid architecture where some functions are deployed in containers and some run on serverless, so it doesn’t have to be one or the other. What’s important is that your applications run in the most suitable environment for their respective use cases.

Controlling Your Cloud Spend With CloudZero

Whether you’re using containers, serverless services, or both, understanding your unit costs is crucial for building profitable software.

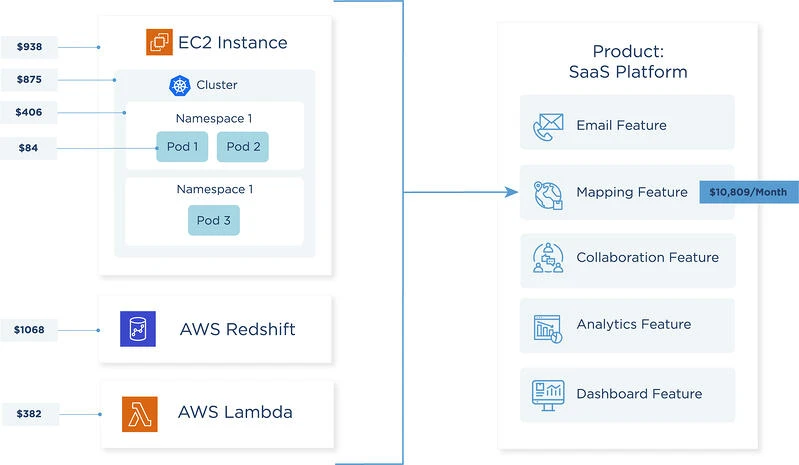

The challenge with Kubernetes run on AWS services, for instance, is that costs are hidden behind your AWS bill, so it’s difficult to understand how your container costs break down at the pods level. On the other hand, the on-demand nature of serverless services means that costs can quickly spiral out of control if services are not properly monitored.

CloudZero’s cost intelligence platform provides a solution for these problems.

With Kubernetes, for example, CloudZero separates idle time from actual compute time so you can identify when your Kubernetes clusters are not doing any work. CloudZero also breaks down Kubernetes costs in the context of your business so you can roll up costs to products, features, or teams.

For managed services, CloudZero identifies those that are costing a lot of money and provides anomaly detection for those services.

While on-demand cloud services have infinite scale, you do not have an infinite wallet. CloudZero helps you make the most of your cloud services, whether you’re running containers or deploying serverless applications. To learn more about how CloudZero helps you keep your cloud spend under control, request a demo.