Imagine you have an API service composed of multiple microservices. Traffic fluctuates — sometimes light, sometimes spiking. Without Fargate, you’d have to manage EC2 instances, autoscaling, patching, and more. With Fargate, you define each microservice as a task, setting the CPU/memory, container image, network rules, and AWS schedules, and then run them as needed. The result: faster deployment, lower ops overhead, and smooth scaling.

Yet, despite its robustness, Fargate is not for every team. For example, you can’t run Fargate on Azure, Google Cloud, or on-premises.

The usual AWS Fargate alternatives are AWS EKS, Azure AKS, and Google GKE Autopilot. There are more. But first, here is why many teams still love Fargate, and for good reasons.

What Are The Benefits Of AWS Fargate?

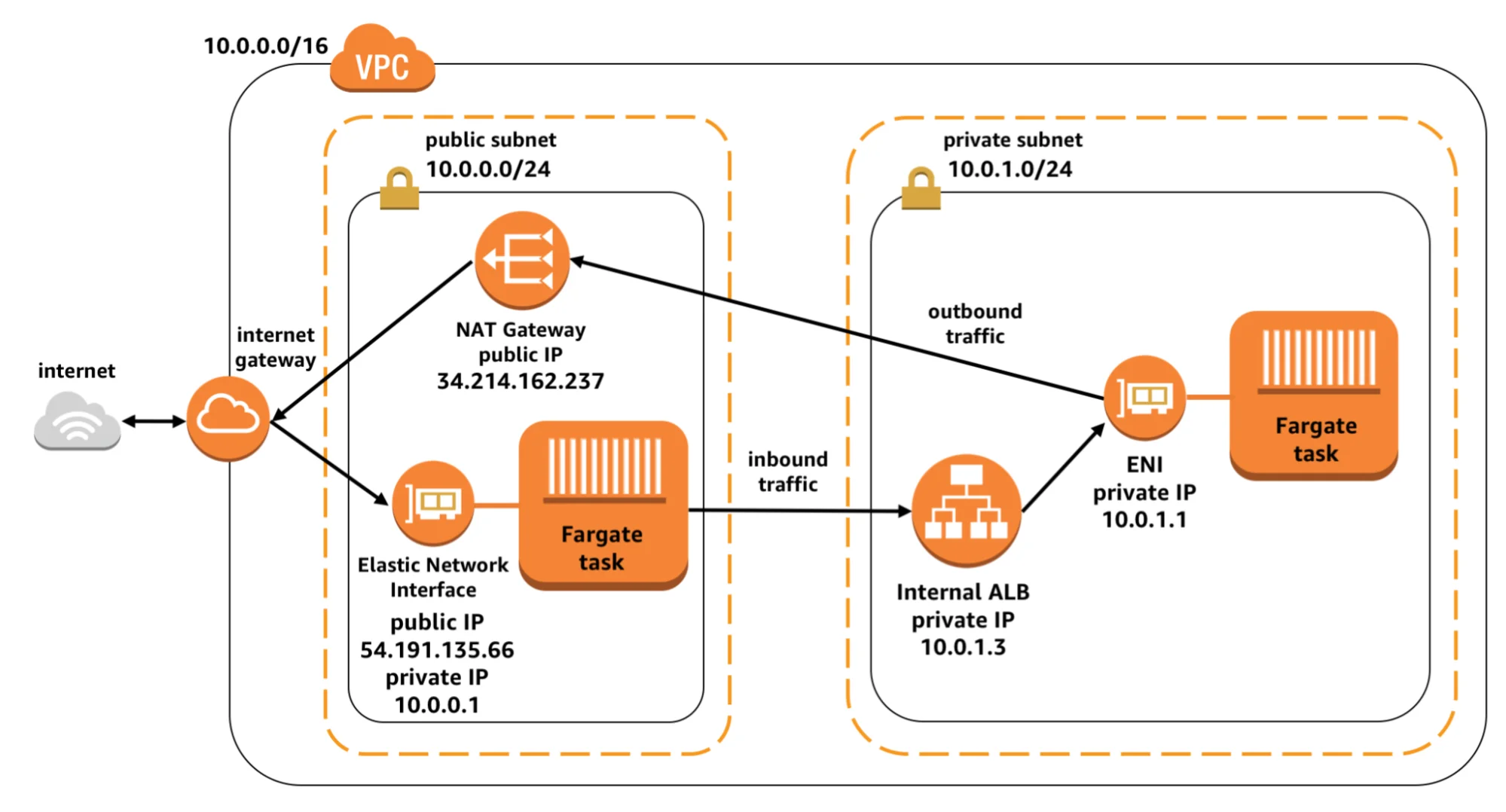

Yes, Fargate is serverless — but it’s not your typical black-box platform. It’s built for enterprise teams that need control as much as convenience. It runs containers inside your own VPC, with your IAM roles, security groups, and data from ECR or EFS. That balance gives teams the simplicity of serverless and the governance, security, and visibility enterprise environments demand.

Fargate integrates directly with AWS services like CloudWatch, CloudTrail, IAM, ECS, ECR, and EFS, eliminating the need for plugins or add-ons. Competing platforms usually rely on extra configuration or third-party tools to get similar security, monitoring, or storage integrations.

Fargate also lets you define the exact CPU and memory for each task — no nodes, clusters, or VMs to manage. AWS provisions and scales it automatically. You’re billed per second for those allocations, not for idle servers. It eliminates most over-provisioning waste and node planning, which competitors like Kubernetes, AKS, and GKE still require.

Other advantages of AWS Fargate include:

- Hybrid Kubernetes flexibility (via Fargate profiles). On EKS, you can run select pods on Fargate while keeping others on managed EC2 nodes. This lets teams isolate sensitive or variable workloads without running separate clusters — a hybrid option unique to AWS’s implementation.

- Compliance-ready foundation. Fargate inherits AWS infrastructure compliance for standards like HIPAA, PCI DSS, SOC, and FedRAMP. It simplifies audits by removing host-level responsibilities, though application-level compliance remains your responsibility.

- Persistent storage with EFS. You can attach Amazon EFS volumes directly to Fargate tasks or pods, enabling stateful workloads. This feature, supported from platform version 1.4.0, is uncommon among serverless container platforms. Learn more about EBS vs EFS here.

- Strong CI/CD integrations. Fargate works smoothly with AWS CodePipeline, GitHub Actions, GitLab CI, CircleCI, and Jenkins through ECS or EKS deployment steps. These official integrations simplify container delivery compared to the custom scripting often required on other serverless platforms.

Related read: 50+ CI/CD Tools to Streamline DevOps Workflows

Again, AWS Fargate is not for every team. Here’s why:

Why Consider AWS Fargate Alternatives

Fargate’s biggest drawback is cost visibility. Because it abstracts the infrastructure, teams can’t see which services, environments, or customers drive costs. AWS bills Fargate usage as flat ECS or EKS task charges, leaving finance and engineering blind to where money goes — and how it ties to product value.

CloudZero closes that gap. It ingests Fargate spend from AWS and maps it back to real business context — cost per feature, customer, or environment. Teams can track unit economics, detect anomalies in real time, and align finance and engineering around a shared cost view. In short, CloudZero gives Fargate users the clarity and control that AWS’s native tools can’t.  to see how.

to see how.

Other AWS Fargate limitations include:

- Fargate doesn’t support GPU-based containers or custom hardware acceleration. ML and AI teams running inference workloads often move to GKE, AKS, or EC2 GPU instances.

- Fargate tasks can take 30–90 seconds to start, especially with large images. Teams running event-driven or real-time microservices find Cloud Run or Lambda scales faster (cold starts measured in milliseconds).

- Fargate abstracts Kubernetes so heavily (when used with EKS) that many K8s-native tools, such as Helm hooks, DaemonSets, and local volumes, don’t work. Alternatives such as GKE and AKS deliver the full Kubernetes experience with managed control planes and extensive tooling.

- Fargate tasks are restricted to certain AWS regions, Local Zones, or Outposts, and must be placed in private subnets. Azure AKS and Google GKE both offer regional and multi-zone support out of the box, improving proximity and availability.

- While Fargate supports EFS, it lacks EBS and advanced persistent volume provisioning. Stateful applications (databases, caching) are difficult to run efficiently.

Given these limitations, it’s worth asking: which platforms provide similar benefits to Fargate — and where do they do it better?

Top 9 Fargate Alternatives

Here are a few platforms, both serverless and non-serverless, that do it differently than Fargate — and, for some teams, more effectively.

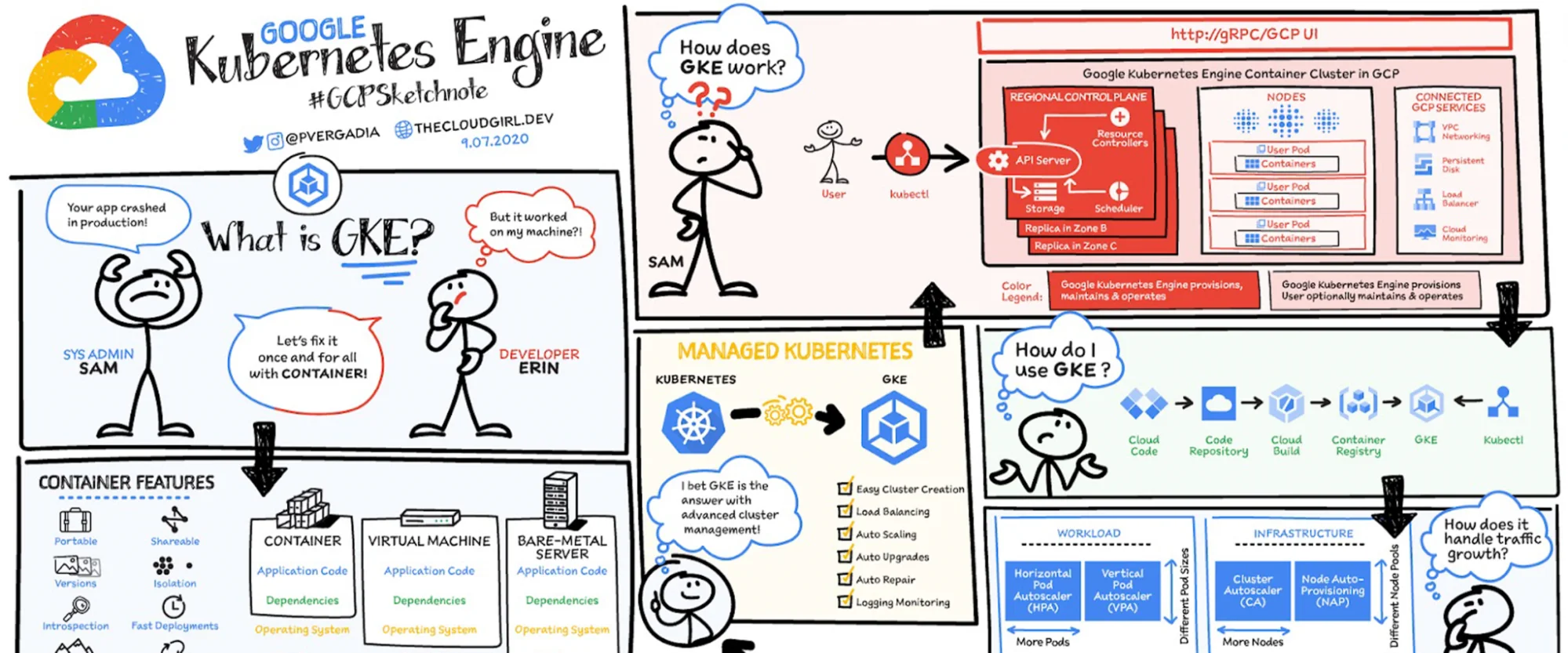

1. Google Kubernetes Engine (GKE)

Best for: Teams needing more control and lower costs at scale.

GKE is to Google Cloud what Fargate is to AWS. But GKE gives engineers more control. You can combine node types, use preemptible VMs, and fine-tune autoscaling to cut costs.

It also supports full Kubernetes features, including DaemonSets, privileged pods, and custom networking, which are limited in Fargate. Many teams choose GKE for its faster scaling, flexible pricing, and deep ties to Google’s AI and data tools. In short, it offers the same container ease as Fargate, with more room to optimize. Get a complete GKE pricing guide here.

2. VMware Tanzu Platform (Broadcom)

Best for: Enterprises with big VMware/vSphere footprints that want first-class hybrid/on-premise Kubernetes

Tanzu wins where Fargate can’t play. It gives you complete Kubernetes control across on-prem vSphere, VMware Cloud on AWS, and public clouds.

With Tanzu, teams can manage clusters, policies, and security from a single place using Tanzu Mission Control. It supports DaemonSets, privileged pods, GPUs, and custom networking — all of which are restricted in Fargate. Its TKG and Hub stack deliver robust fleet management, compliance, and deep infrastructure control, ideal for hybrid or regulated environments.

Note: Tanzu isn’t serverless. It automates Kubernetes and abstracts much of the infrastructure work, but you still manage the underlying compute.

3. Portainer

Best for: Teams that want a simple, visual way to run containers across on-prem, cloud, and edge.

Portainer is a management layer — not a compute like Fargate. It sits on Docker, Swarm, Kubernetes, Podman/ACI.

You manage everything from one UI/API, including multiple environments via agent/edge-agent. Compared to Fargate’s serverless model, you keep control of your own nodes and costs.

4. Red Hat OpenShift

Best for: Enterprises needing full Kubernetes control, built-in DevOps tooling, and hybrid/on-premise deployment.

OpenShift also delivers what Fargate doesn’t. A complete Kubernetes stack with integrated CI/CD, monitoring, logging, RBAC, and enterprise-grade support. Because it runs on your own or hybrid infrastructure, you control nodes, networking, storage, and compliance. You can customize workloads, run privileged containers, and fine-tune resource usage for efficiency.

Read more: Best OpenShift Alternatives For Today’s DevOps Teams

5. Azure Kubernetes Service (AKS)

Best for: Teams using Microsoft Azure

AKS also gives you more control than Fargate. With virtual nodes through Azure Container Instances, you can scale pods serverlessly while core workloads run on VM node pools. You choose node sizes, use Spot or GPU nodes, and define storage classes — options for Fargate limits. This makes AKS ideal for cost-focused, high-performance, or advanced Kubernetes deployments.

But for unpredictable workloads, Azure AKS doesn’t come out cheaper. Use these practical tips to optimize your AKS costs.

Related read: Curious how to scale Kubernetes without overspending? Check out Scaling Kubernetes On A Budget to see tips for cutting costs with AKS and EKS.

6. Oracle Cloud Infrastructure (OCI) Container Instances

Best for: Teams already using Oracle Cloud

With OCI Container Instances, you choose CPU and memory, connect directly to Virtual Cloud Networks (VCNs), and control security and placement.

It’s mostly suitable for teams that need Oracle Database or analytics integration, or must keep workloads in certified regions. You can also pair it with Oracle Kubernetes Engine (OKE) for a smooth path from single containers to full clusters. While not multi-cloud, it fits easily into hybrid setups across on-prem and OCI environments.

7. Red Hat OpenShift on IBM Cloud

Best for: Enterprise/teams that want a managed OpenShift platform built into IBM Cloud.

Red Hat OpenShift on IBM Cloud provides the complete OpenShift experience, eliminating the need for infrastructure management. You get all key features, custom networking, stateful workloads, operators, and advanced RBAC — while IBM takes care of provisioning, patching, and uptime SLAs. Since it’s part of IBM Cloud, it works seamlessly with IBM IAM, monitoring, security tools, and Watson AI services.

8. Rancher

Best for: Teams managing Kubernetes clusters across multiple clouds or on-prem environments.

Rancher gives organizations the multi-cloud freedom Fargate can’t. It provides a single control plane to deploy and manage Kubernetes clusters on AWS, Azure, GCP, or in data centers — all with complete visibility and policy control. It’s also more cost-efficient for steady workloads and avoids serverless premiums. It’s also ideal for enterprises that need hybrid consistency instead of being tied to AWS.

Related read: Top Rancher Alternatives

9. DigitalOcean

Best for: Small teams or startups that want fast container deployments and predictable pricing.

DigitalOcean offers a simpler, cheaper developer experience. Its App Platform enables you to deploy containers and web apps without advanced DevOps configurations. Its Managed Kubernetes also provides full control when you need it.

What next?

Each of these platforms runs containers in its own way. The real question isn’t which one is best overall; instead, which one fits your team without boxing you into a single vendor?

How To Choose A Container Service (And Avoid Vendor Lock-In)

Here’s a simple checklist to help you choose the right platform and keep your freedom to migrate later.

- Know what you’re optimizing for. List your top three goals: cost, control, or speed. Serverless is faster to launch; node-based gives more control and lower cost at steady load.

- Keep your containers portable. Use OCI-compliant images so the same container runs on any platform — AWS, Azure, GCP, or on-premise.

- Stay observability-neutral. Use OpenTelemetry for traces, metrics, and logs. You can change backends without touching your code.

- Make infrastructure cloud-agnostic. Use Terraform or Crossplane so the same infrastructure works across different cloud providers and on-premise.

- Design for hybrid and multi-cluster early. Learn Kubernetes federation and placement policies. It’s easier to stay flexible than to rebuild later.

- Avoid vendor-specific APIs. Stick to certified Kubernetes APIs and open standards. Proprietary features make cloud migration difficult.

- Quick rule of thumb. Need GPUs, DaemonSets, or custom networking? Choose node-based. Need speed and zero ops? Go serverless.

- Use portable security controls. Apply OPA/Gatekeeper policies, image signing, and CIS benchmarks that work across platforms — not just one vendor’s tool.

- Plan an exit upfront. Write down how you’d move containers, manifests, secrets, and telemetry to another platform. Test it with a small service.

- Compare real costs. Test both models: serverless can be cheaper for bursty traffic, but node pools or Spot VMs usually win for steady workloads.

Then…

Simplify Your Container Deployment Workflow With Accurate Cost Monitoring

Now, here is the thing. No matter what container model you use — serverless or node-based, you’re still renting someone else’s compute, storage, and network. Every container, pod, or function consumes real cloud resources, directly impacting your bill.

In serverless, costs hide in usage patterns. You’re billed per second or per request, so inefficiencies such as constant cold starts or high data transfer volumes can quietly inflate spend. You might scale instantly — and overspend instantly too.

In a node-based system, waste shows up differently. You pay for entire VMs or nodes, even when they’re underutilized. Over-provisioned clusters, idle DaemonSets, and poorly right-sized workloads can result in hundreds or thousands of dollars in unused capacity each month.

Either way, the more dynamic your containers, the easier it is to lose visibility. Costs shift across clusters, namespaces, teams, or features. Without granular monitoring, you only see a big number at the end of the month, not where or why it’s growing.

That’s why teams using any container model need cost intelligence, not just cloud billing.

Enter CloudZero

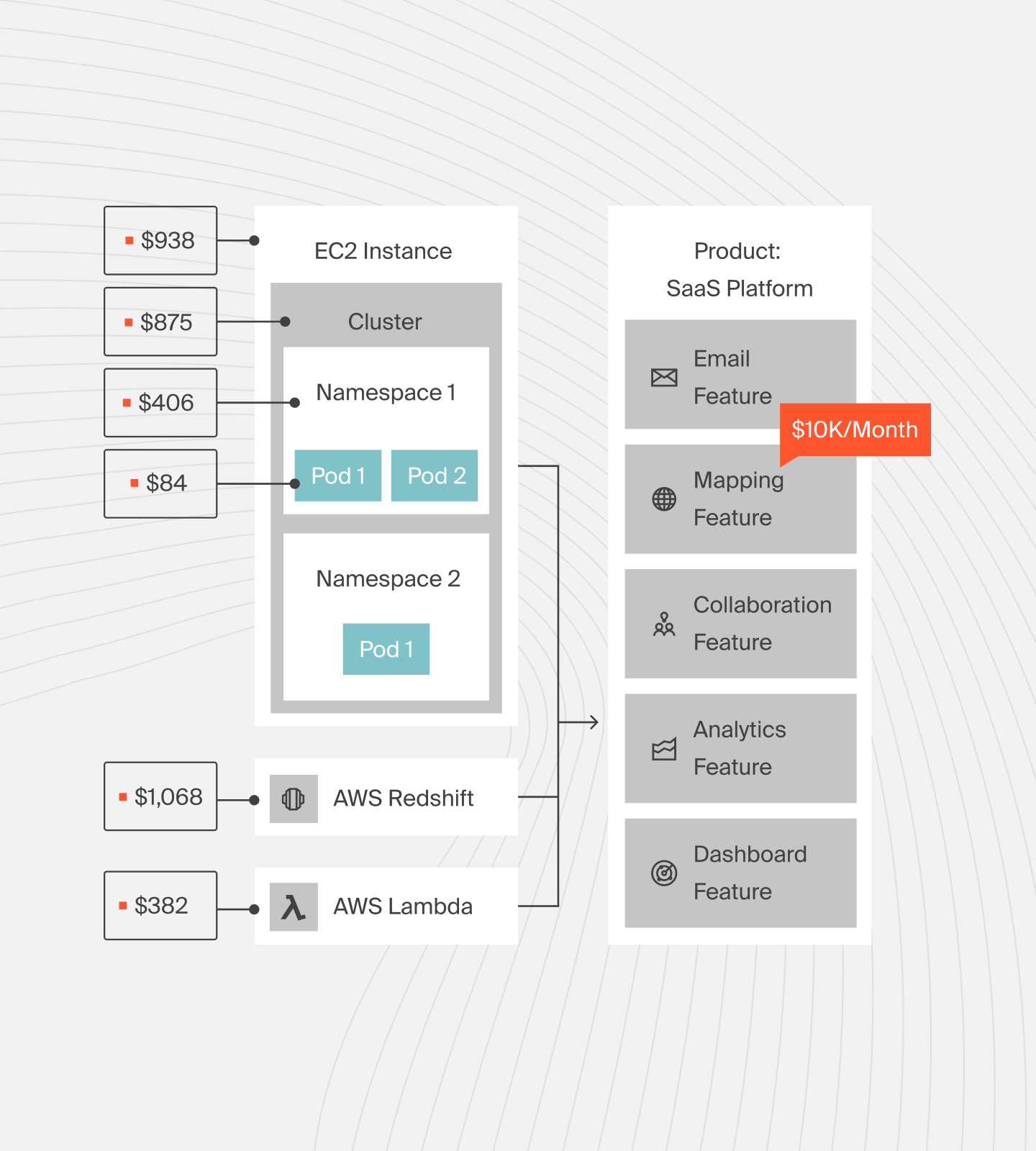

CloudZero pulls cost data from Fargate, EKS, AKS, GKE, and more, then maps it to what matters — your features, teams, and customers. You see the cost per service or deployment, rather than a single, vague monthly total.

With CostFormation, CloudZero automatically connects every container and workload to the right cost. No tagging, no spreadsheets — just real visibility.

For serverless containers, CloudZero uncovers what AWS or Azure pricing hides. For Kubernetes clusters, it breaks spend down by node, pod, or namespace so you know which workloads drive cost.

Check this out:

Finance and engineering also see the same numbers, in real time, with shared context. That means faster decisions, smarter scaling, and fewer billing surprises.

You’ve read what CloudZero can do. Now see it for yourself.  and learn how teams like Toyota, Duolingo, Wise, and more cut spend, boost visibility, and deliver savings in weeks.

and learn how teams like Toyota, Duolingo, Wise, and more cut spend, boost visibility, and deliver savings in weeks.