Google Cloud released Google Kubernetes Engine (GKE) as a commercial version of native Kubernetes (K8s). GKE promises a user-friendly, reliable, and cost-effective service. Yet calculating GKE costs can be daunting, including understanding what you’re paying for and maximizing your return on investment.

In this GKE pricing guide, we’ll discuss how GKE pricing works, what it costs, and more.

What Is The Difference Between Kubernetes And Google Kubernetes Engine (GKE)?

Kubernetes is an open-source, highly-scalable platform for managing containerized workloads. But GKE is a fully managed Kubernetes service that runs only on Google’s cloud infrastructure (Google Cloud).

While you can use Kubernetes regardless of your cloud provider, GKE is only available to Google Cloud customers. Yet, GKE is secure by default, whereas you need to protect native Kubernetes yourself.

Another thing: whereas Kubernetes requires manual installation, configuration, and ongoing management, the GKE service manages the bulk of the underlying operations and components, such as the scheduling, scaling, and monitoring involving the control plane, clusters, and nodes.

Notably, Google developed Kubernetes (K8s), but donated it, along with Knative and Istio, to the Cloud Native Computing Foundation (CNCF). Google Cloud still supports K8s development but alongside other contributors like RedHat and Rancher.

So, how does GKE billing work?

GKE Billing Explained: How Does GKE Pricing Work?

GKE charges you based on your cluster mode — Standard or Autopilot. Other costs include cluster management, backups, ingress, and discounts that can help you save.

Here’s a breakdown of everything that impacts your bill:

GKE Standard vs. Autopilot modes: How the cluster operation mode affects GKE pricing

GKE’s Autopilot Mode is perfect for organizations new to Kubernetes or those lacking technical expertise, or that simply need a simpler way to run containerized workloads with a hosted solution. The GKE team will be responsible for most of the infrastructure management on the organization’s behalf.

The pricing for Autopilot mode on GKE is on a pay-per-pod basis with a cluster management fee of $0.10 per hour per cluster — after depleting your Free tier credits, of course. In addition, you pay for the CPU, RAM, and ephemeral storage that your currently scheduled pods request.

Here’s more:

- There’s no minimum duration required, and resource billing is done in one-second increments.

- Unscheduled pods, unallocated space, system pods, and operating system overhead are not charged.

- To reduce your Google Cloud costs further, you can use Spot Pods in Autopilot mode. Spot pods work just like Spot VMs or Spot instances on AWS — they offer great rates when available, but can be reclaimed at any time with just a 30-second notice. Only use them for workloads that can handle interruptions.

- Committed use discounts apply in Autopilot mode, too. Exactly how much you can save depends on the GKE compute class you choose.

Autopilot mode provides four types of compute classes:

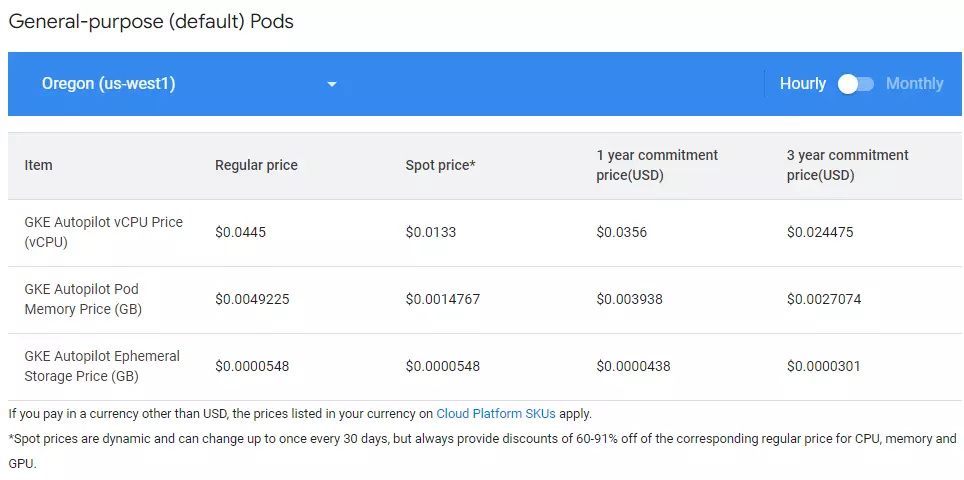

General purpose

GKE defaults to this class when you haven’t specified any category expressly. The compute class is ideal for moderately intensive workloads such as small to medium-sized web servers and databases.

Credit: Pricing for GKE Autopilot mode’s general-purpose pods in the Oregon region

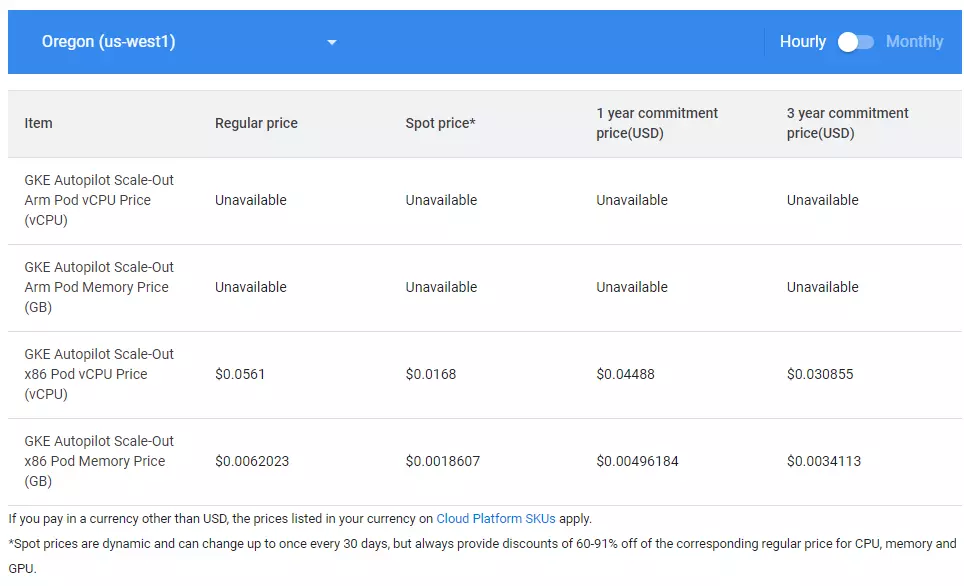

Scale-out pods

This scale-optimized class disables multi-threading. This platform is suitable for processing data logs, deploying containerized microservices, and running large-scale Java applications.

Credit: Pricing for GKE Autopilot mode’s scale-out pods in the Oregon region

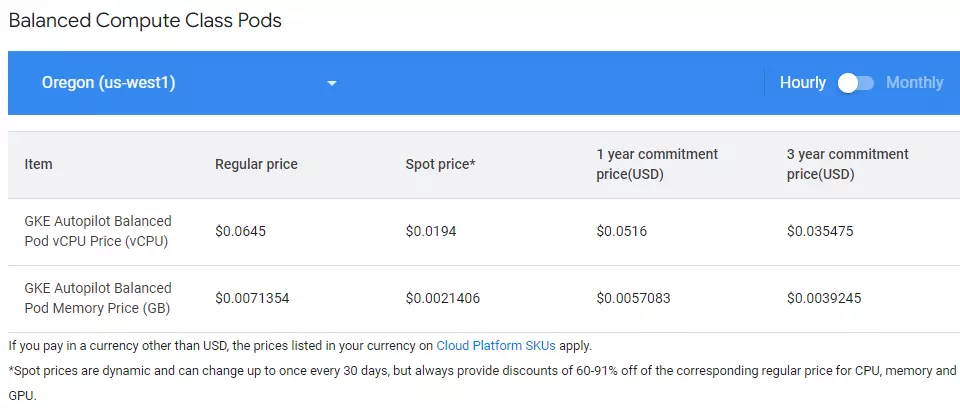

Balanced pods

Pick this compute class when you want higher performance than general-purpose pods can provide, but with a good balance of CPU and memory capacity.

Credit: Pricing for GKE Autopilot mode’s balanced compute class pods in the Oregon region

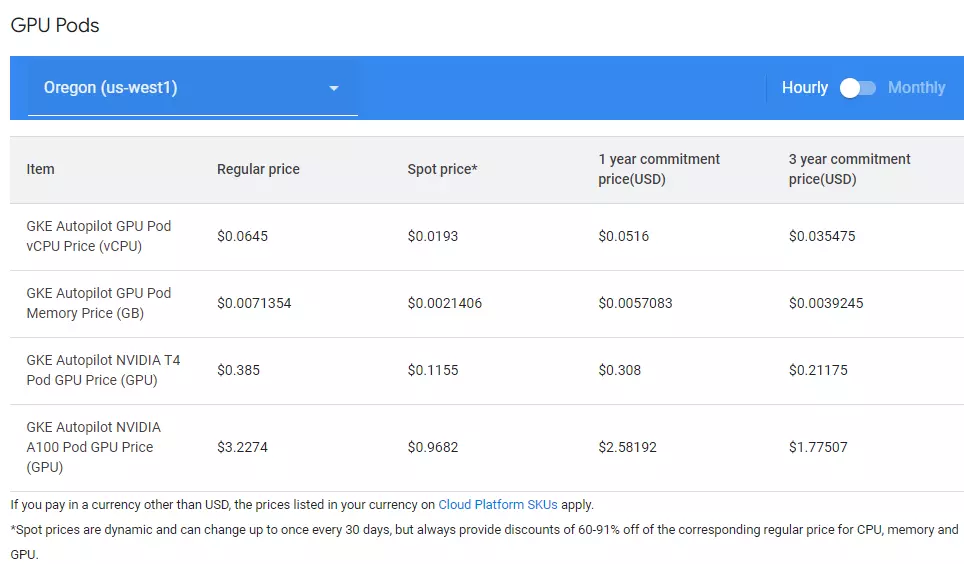

GPU pods

Choose this GKE compute class for graphics-intensive workloads, including data visualization, gaming, and multimedia applications.

Credit: Pricing for GKE Autopilot mode’s GPU compute class pods in the Oregon region

Standard Mode in GKE enables organizations to customize their GKE infrastructure to run containerized workloads with more control. To help accomplish this, the Standard mode uses Compute Engine instances as worker nodes.

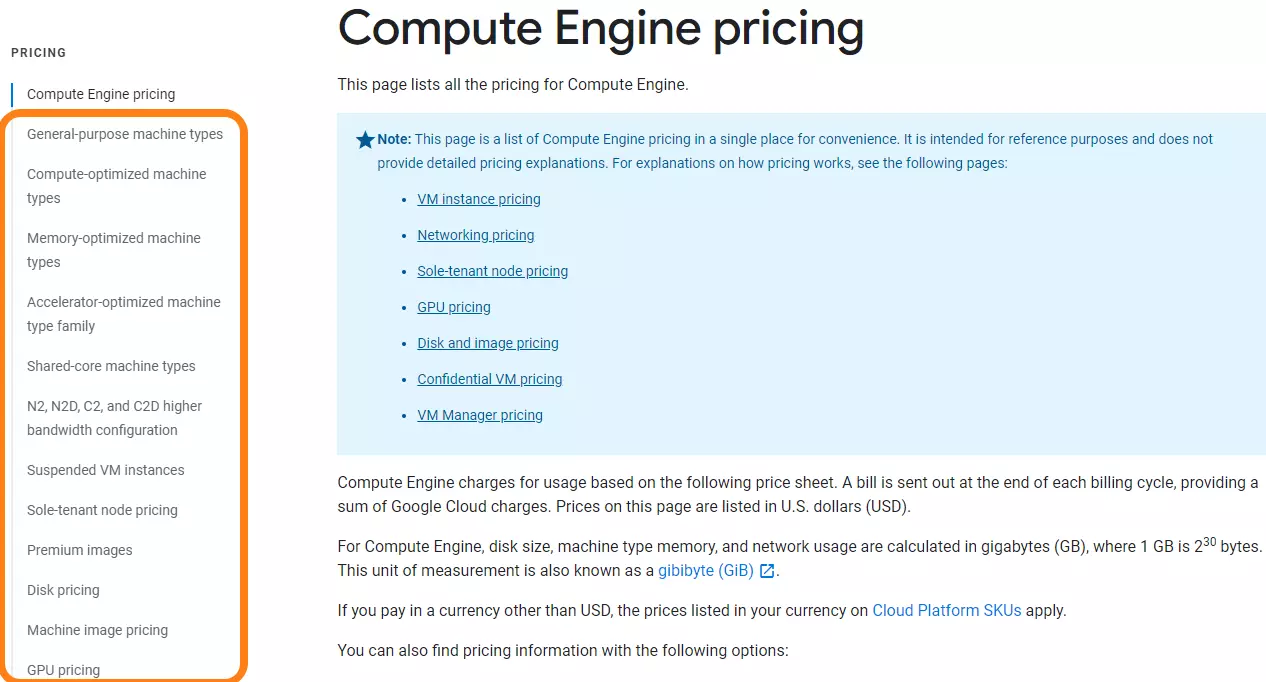

And so it follows that GKE’s Standard mode pricing is pay-per-node based on Compute Engine pricing for each instance. There’s more:

- You pay $0.10 per hour per cluster as a management fee, regardless of topology (zonal or regional) or cluster size. This cost is billed in 1-second increments and runs until you delete the node.

- Costs for Compute Engine resources are charged per second, with a minimum requirement of one minute of usage. This is the on-demand pricing rate.

- But you can use Committed Use discounts, which apply based on the Compute Engine prices for the instances within a cluster.

- You can use Spot instances to take advantage of surplus GKE compute capacity for cheap while on Standard mode — provided you use it with fault-tolerant workloads.

The exact pricing depends on the specific types of Compute Engine virtual machines you choose. There are five types to pick from:

Types of Compute Engine machines and their pricing

- General purpose – Best for workloads requiring balanced CPU and memory capacity for small and medium-intensity use cases.

- Compute-optimized – Ideal for workloads demanding high processing power than general-purpose machines can deliver.

- Memory-optimized – Suitable for use cases that require high RAM utilization

- Accelerator-optimized – Best for graphics-intensive workloads.

- Shared core – The instances come with fractional vCPUs and custom RAM settings.

As you’ve probably noticed by now, your GKE mode of operation is the biggest pricing factor to consider. Yet, there are a few more details to consider. Let’s break those down together.

GKE Free Tier

You get $74.40/month in credits per billing account. It applies to Autopilot and zonal Standard clusters.

Once it’s used up, normal rates apply.

Cluster management fees

GKE charges $0.10/hour per cluster in both modes. It’s billed per second and runs until the cluster is deleted. Anthos clusters are excluded.

Multi-cluster ingress costs

Used for load-balancing traffic across multiple regions. Without Anthos, it costs $3/month or $0.0041/hour. You only pay for pods directly connected to MultiClusterIngress resources.

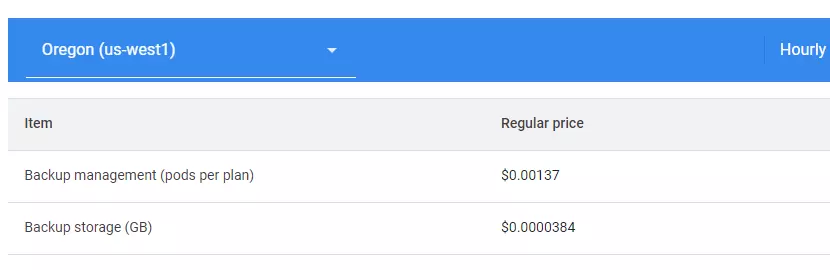

GKE backups

You pay based on:

- The volume of data stored per GB/month

- The number of pods protected

- Region and cross-region network egress if backup is stored elsewhere

Credit: Pricing for backups on GKE in the Oregon region

Committed use discounts

Save around 20% with a 1-year commitment or over 45% for 3 years. Discounts apply to both modes and depend on the compute resources used.

Spot VM and Spot pod discounts

Use Spot VMs in Standard mode or Spot Pods in Autopilot mode to run fault-tolerant workloads at up to 60% lower cost. They’re cheaper, but can be reclaimed at short notice.

Next, how to estimate your GKE costs based on your configuration.

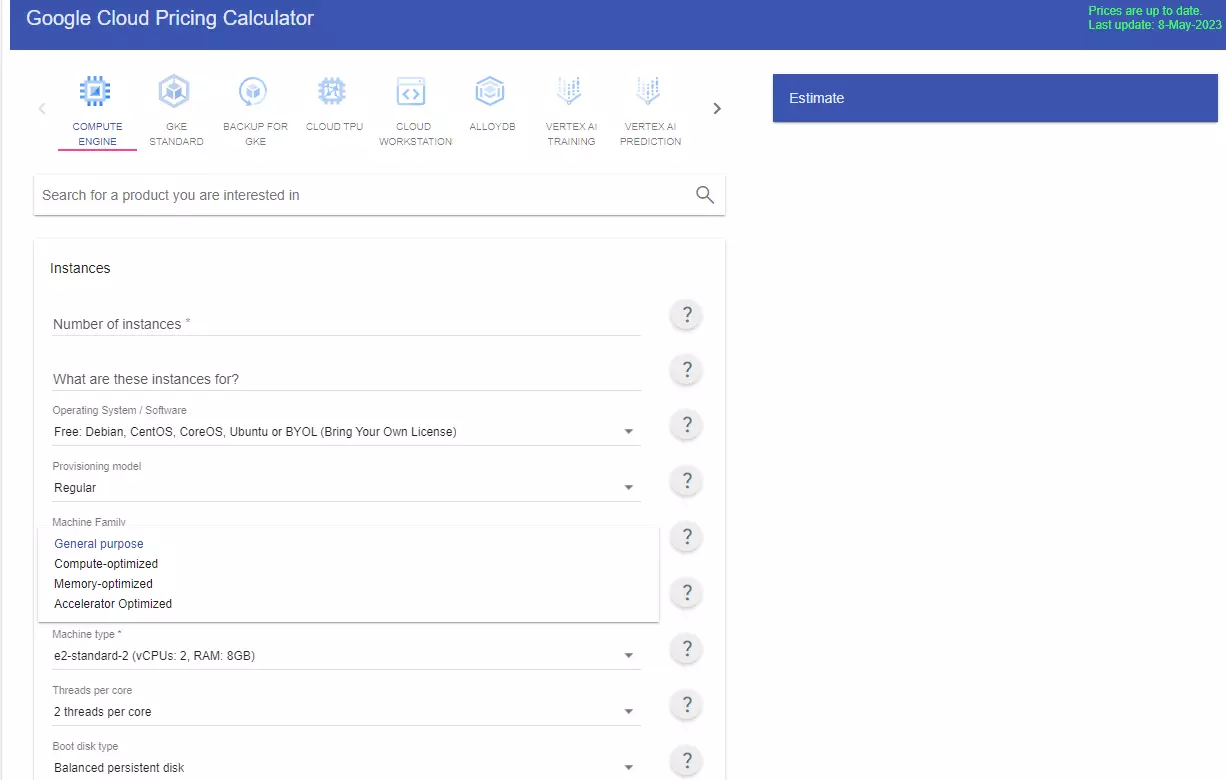

How To Use The Google Cloud Pricing Calculator

We’ve focused on breaking down Google Kubernetes Engine costs so far. This will help you get a clear idea of what you are actually paying for on GKE.

But if you are just interested in estimating the cost of running a particular GKE configuration ASAP, you can use the Google Cloud cost calculator.

The calculator will provide cost estimates on the right by selecting your preferred configuration options. Take a look at this:

How the Google Cloud Pricing Calculator works

Using the calculator is the best way to learn how to use it. Quite user-friendly, actually.

How To Choose The Best GKE Pricing Plan For You

Every organization operates its non-IT workloads differently, and it will do the same for its GKE workloads. As a pointer, here are a few things to think about:

When to use GKE’s Autopilot Mode

Use Autopilot when:

- The default GKE infrastructure configuration is sufficient for your workload.

- The Kubernetes engineers you have are not proficient or experienced enough to manage manual configurations on an ongoing basis.

- Choosing autopilot mode gives you the best price-performance ratio.

When to use GKE’s Standard Mode

Use Standard mode when:

- It would benefit you to have more control over the infrastructure your workload runs on.

- You have the expertise to set up, manage, and optimize the underlying infrastructure.

- The standard mode pricing makes the most sense to you in terms of price-performance.

How To Really Understand, Control, And Optimize Your GKE Costs

Google’s Cloud Pricing Calculator provides a rough estimate of the GKE costs to expect. So, your actual GKE bill might differ from this estimate, resulting in a surprise bill. The challenge then is:

- How do you tell exactly what, why, and who is driving your GKE costs?

- How do you pinpoint the products, processes, and people influencing your GKE pricing when Google Cloud sends you a bill without these details?

- How can you reduce unnecessary spending on GKE when you are not sure where the money is going in the first place?

- And what can you do to truly understand and optimize your GKE costs on an ongoing basis without endless tagging, manual evaluations, and so on?

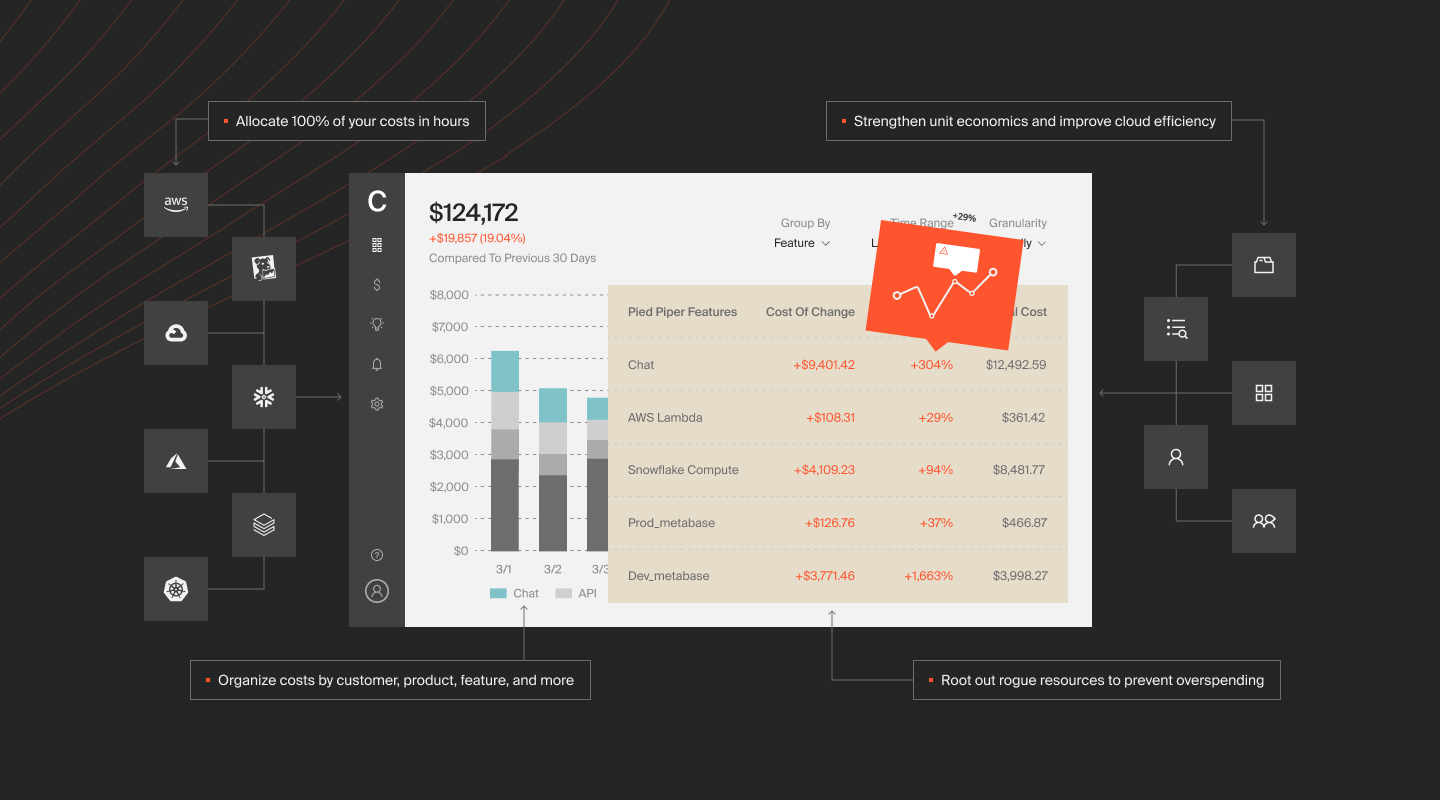

CloudZero can help.

Here’s how:

- Accurately collect, analyze, and share Google Cloud costs, including GKE costs, even if you have messy cost allocation tags.

- View your unit costs, such as cost per individual customer, per team, per GKE project, per feature, per product, etc.

- Get contextual cost insights by role: engineering, finance, and FinOps. Engineers get metrics such as cost per deployment, feature, project, etc. Finance gets insights such as cost per customer, product, gross profit, etc. FinOps gets intel such as cost of goods sold (COGS), margin analysis data, etc.

- Bring together Google Cloud, AWS, and Azure cost analysis to ease your multi-cloud cost management. CloudZero also supports Kubernetes cost analysis as well as for platforms such as Databricks, New Relic, Datadog, MongoDB, etc.

- Take advantage of real-time cost anomaly detection and receive timely alerts to stop unnecessary spending before it’s too late.

Yet reading about CloudZero’s Cloud Cost Intelligence approach is nothing like seeing it in action for yourself.  to experience these capabilities in action.

to experience these capabilities in action.