Cloud computing promised speed, scale, and freedom. And it delivered. Engineers can deploy in seconds. Teams can scale globally overnight. But somewhere between all that freedom and speed, control got blurry.

Resources piled up. Budgets ballooned. And suddenly, no one could answer the simple question: What are we paying for and why?

Cloud resource management is how we reclaim that control, without slowing down. In this guide, we’ll decode what it is, how it works, and how to master it across engineering, finance, and leadership.

What Is Cloud Resource Management?

Cloud resource management is the practice of strategically allocating, monitoring, and optimizing computing resources across cloud environments. These resources include virtual machines (compute), containers, storage volumes, and network bandwidth.

Here’s the thing. How you handle your cloud resources has a direct impact on performance, availability, and cost.

For CTOs and software engineers, this means provisioning the right infrastructure to support agile development and scale with demand.

For CFOs, it means minimizing waste, improving cost predictability, and ensuring cloud investments align with business value (and produce ROI).

Proper cloud resource management ensures your cloud resources are used efficiently, securely, and in alignment with both engineering needs and financial goals.

How Does Resource Management Work In Cloud Computing?

Let’s break down how effective resource management in the cloud works.

1. Provisioning and allocation

Cloud platforms let you spin up compute, storage, and networking resources on demand. But provisioning is also about choosing the right size, region, and configuration for your workload.

Picture this:

- An engineer might choose a specific Amazon EC2 instance (or GKE node pool for managed K8s) to provide just the right threshold of performance for a specific operation.

- A finance professional may prefer Reserved Instances or Savings Plans over On-Demand pricing. The goal here would be to balance performance demands with cost control.

Overall, misaligned provisioning, such as oversized VMs or unused services, is often the top source of waste (a.k.a. surprise bills and shrinking margins).

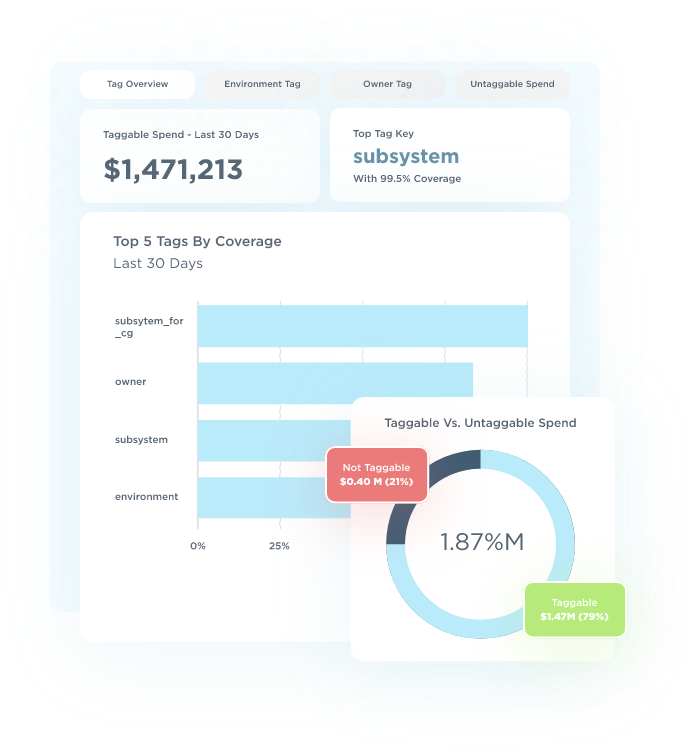

2. Tagging and metadata management

Tags are labels that help you group and organize resources by environment (prod, dev, staging), team (data, platform), project, or customer.

Done right, tagging enables accurate cost allocation and chargebacks. But when neglected or inconsistently applied, it creates a reporting and accountability black hole.

Accurate and consistent tags are a major reason you’ll want to use tools like AWS Tag Editor or Azure Policy.

Better yet, using more robust platforms, like CloudZero, can help you extract cost context from your infrastructure and application data, even from untagged or poorly tagged assets.

3. Monitoring and visibility

Once your resources are live, you need good observability tools to monitor usage, performance, and cost. This includes:

- Metrics and logs: CPU usage, storage consumption, traffic spikes

- Dashboards and alerts: For spotting anomalies, underutilized resources, or unexpected spikes

- Recommendations: Many platforms offer AI-driven optimization insights (think AWS Trusted Advisor, GCP Recommender, and CloudZero Advisor) to help you optimize your current configuration for an optimal price-performance ratio.

The best observability tools don’t just show what’s happening. They unravel why it’s happening, with business context. Take CloudZero, for example.

Let’s say your Amazon EC2 costs jumped 12% last month. Native tools tell you that it happened. CloudZero shows how; it pinpoints the specific deployment, team, or feature that triggered the spike.

That’s real observability. It helps you retrace your steps and cut waste — without compromising performance, engineering velocity, or user experience.

The right tool can help you here. Consider these examples:

- Cost per Feature: Identify opportunities to shift popular free features into paid plans. Or, decide if it’s time to retire that costly but unpopular feature.

- Cost per Customer: Justify renegotiating contracts with high-cost, low-revenue customers. This way, you can protect your margins without discouraging subscription renewals.

- Cost per Deployment: Forecast infrastructure needs and costs as customer adoption and engineering velocity scale.

For later reading: How Hiya Answers “What If” Questions Around New Business Initiatives (Maintaining a -0.6% Cloud Spend Growth Rate)

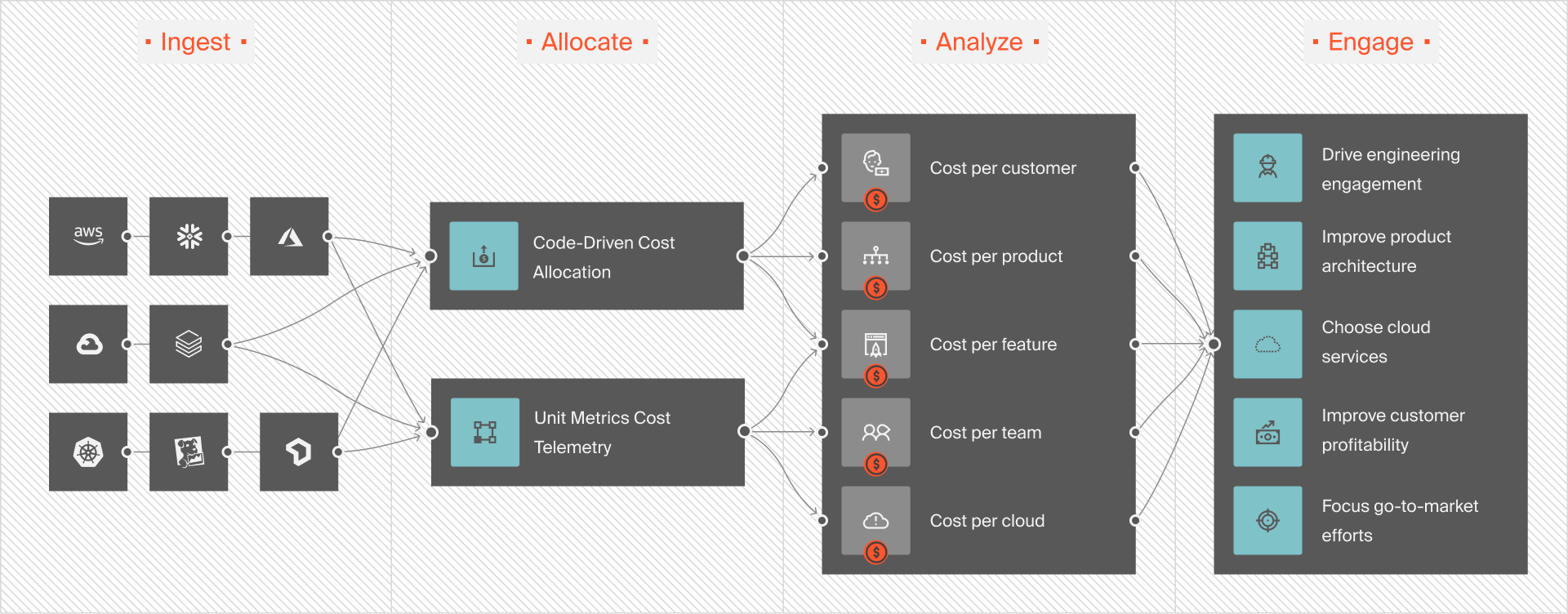

You can understand not just the what, but also the who, how, and why behind your cloud dollars. We call this approach Cloud Cost Intelligence, and it’s foundational to modern cloud resource management.

4. Automation and governance

Modern cloud environments rely heavily on automation to scale reliably and cost-effectively. Consider these:

- Infrastructure as Code (IaC) tools like Terraform or Pulumi define resources declaratively for repeatability.

- Autoscaling provisions or shuts down resources based on real-time load, balancing performance with cost control.

- Budgets and guardrails: You can use policy tools (like Azure Management Groups or AWS Organizations) to prevent overspending or rogue deployments.

5. Cross-functional cost intelligence

For cloud resource management to work, engineering and finance need shared visibility into usage and cost drivers. Cloud-native tools like AWS Explorer and Azure Cost Management are a starting point. They break down usage and costs by application or team.

Advanced platforms offer granular insights, down to the hour, feature, product line, or customer. This gives every stakeholder the clarity and accountability to optimize cloud resources on a daily basis.

Now, let’s break down the different types and how each supports your goals.

Types Of Cloud Resource Management (Like, The Crucial Stuff)

Different teams within your organization care about different dimensions. So, to effectively manage cloud resources, it helps to crack the discipline into three interconnected layers.

1. Infrastructure resource management

This is the technical foundation. It involves managing your compute, storage, and networking resources to support application performance and reliability.

- Engineers ensure the right instance types, autoscaling groups, and storage classes are in place.

- Common tasks here include right-sizing VMs, managing container orchestration (like Kubernetes), and applying availability best practices.

The tools involved here include Terraform, Kubernetes, AWS Auto Scaling, Azure Virtual Machine Scale Sets, and more.

The goal: Maximize performance and uptime while minimizing wasteful overprovisioning.

2. Application-level resource management

This layer focuses on how applications consume infrastructure and how those patterns impact cost and user experience.

- Your team monitors how specific services, microservices, or features use resources.

- Think: “How much memory does this new ML model use?” or “How much traffic does Feature X generate during peak hours?”

- You also track horizontal scaling decisions, CI/CD-driven deployment impacts, and user-triggered usage spikes.

The goal: Tune application design for performance, resilience, and efficiency at runtime.

3. Financial resource management (a.k.a. cloud cost governance)

This is where engineering meets finance. It’s also where many organizations struggle. The goal here is to connect cloud usage to business outcomes and ensure every dollar you spend has a purpose.

- You track spend by team, feature, environment, or customer.

- Forecasting, budgeting, and real-time anomaly detection are key here.

- You’ll want to use a cost intelligence platform to go beyond raw billing data and see unit costs per business metric (like cost per product feature or cost per project).

The goal: Drive accountability, prevent overspend, and optimize ROI.

Each type of resource management reinforces the others. Optimizing infrastructure, for example, can lower application costs, making your cloud financial planning more predictable.

Next, we’ll move from theory to practice.

Real-World Cloud Resource Management Examples To Learn From

Let’s look at how real organizations use these cloud resource management types to solve everyday performance and cost challenges.

1. Kubernetes cost control in a SaaS environment

A SaaS company runs workloads on Amazon EKS. They initially over-provisioned their clusters to avoid performance issues. A hefty bill and a tense board meeting later, they reconsidered.

By adopting best practices like autoscaling and right-sizing nodes and pods, they cut monthly compute costs — without sacrificing performance.

You can also track cost per microservice or feature. Meaning, you can see how your Kubernetes costs map to product components and guide your investment and pricing decisions.

Real case study: How Upstart Saved $20 million on Their Red Hat OpenShift on AWS (ROSA) Usage.

2. Serverless optimization for a FinTech provider

A FinTech team leverages AWS Lambda and DynamoDB to handle unpredictable traffic from customer transactions.

At first, their usage spiked unexpectedly. This led to cost anomalies. They did three things:

- Set budget alerts

- Monitored function duration

- Adjusted memory allocations

The results? They optimized performance while reducing excess Lambda spend.

They used application-level resource management to identify high-cost functions, refactor inefficient code, and improve both cost and latency for critical transactions.

Real case studies:

3. Cost per customer in a multi-tenant architecture

A multi-tenant SaaS platform needed to understand the true cost of supporting each customer, and whether their contracts were still profitable.

Using CloudZero, the finance team calculated cost per customer across AWS services like EC2, S3, and RDS.

When they found several high-usage clients were unprofitable, they renegotiated pricing to protect margins. This is financial resource management in action.

Real case study: How Gaming Software Company Beamable Turned Multi-Tenant Infrastructure Costs Into Per-Customer Metrics

4. Right-sizing and chargebacks for a data team

A large enterprise’s data engineering team relied heavily on GCP BigQuery. However, they lacked clear ownership or accountability.

After adding project- and team-level tagging and using CloudZero for cost allocation, they enabled chargebacks.

The result: more responsible queries, better storage practices, and lower BigQuery spend.

Real case studies:

Want to see even more tangible examples of cloud resource management at its finest? Steal the best practices from companies like Shutterstock, Drift, and Remitly on our customer stories page here. Drift, for example, saved over $2.4 million.

Next, we’ll look at the key metrics you’ll want to track to support your resource management strategy.

Key Metrics To Monitor For Cloud Resource Efficiency

Effective resource management starts with measuring what matters. Here are the most impactful metrics, organized by technical layer and linked to business outcomes.

1. Resource Utilization Rates

Understanding how well you’re using your infrastructure helps prevent overprovisioning and underutilization, two major cost drivers.

- CPU and Memory Utilization (for VMs, containers, and functions): Low utilization often signals overprovisioning and room for rightsizing or autoscaling.

- Disk I/O and Network Throughput: This is useful for tuning performance-critical workloads (like streaming and analytics).

- Instance and Container Uptime: Helps identify idle or forgotten resources consuming cost with no value.

Resource utilization rates helps your engineers identify underused infrastructure and optimize deployments. It also helps finance reduce unnecessary spending.

Interesting read: 5 Ways CloudZero Engineers Found Over $1.7 Million in Infrastructure Savings.

2. Idle or Orphaned Resource Count

Resources like unattached EBS volumes, idle load balancers, or unused static IPs add up quickly, and often unnoticed.

- Unattached storage volumes

- Dormant Kubernetes namespaces or nodes

- Expired snapshots or backups

Cleaning these up can lead to immediate cost savings without compromising production workloads.

3. Cost Per Unit Metrics

This is where engineering meets finance. Using unit economics unlocks decision-making power at every level.

- Cost per customer

- Cost per product or feature

- Cost per environment (dev, staging, prod)

- Cost per deployment

- Cost per engineering team

Platforms like CloudZero automate the tracking of these metrics, even if you have messy tags. And that means you can answer questions like:

- “Which customers are the most expensive to support?”

- “Are we pricing this feature sustainably?”

- “How will our infrastructure costs change if we scale this team or product line or onboard five more customers in this segment?”

Understanding unit economics supports your SaaS pricing strategy, margin protection, and team-level accountability.

Related read: What Is Cloud Unit Economics Really (And Should You Even Care)?

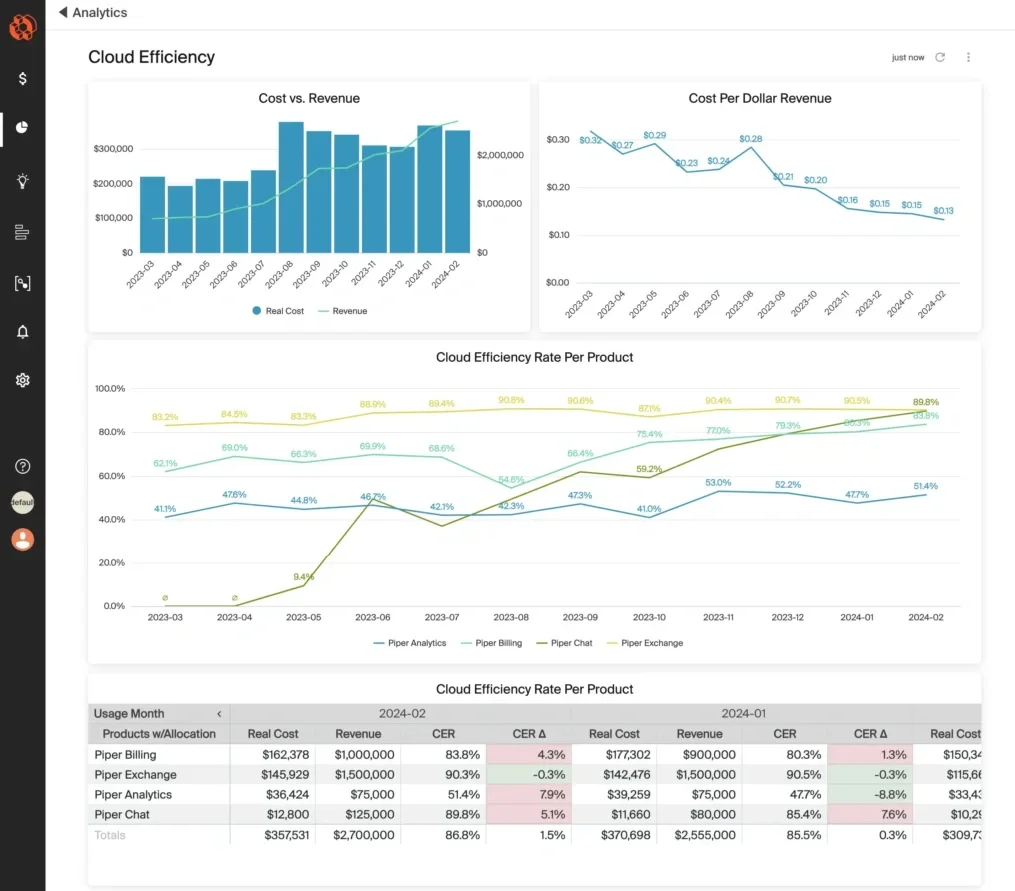

4. Cloud Efficiency Rate (CER)

This metric helps quantify your ROI on cloud spend. It measures how much value (like revenue, traffic, and users) you get for each dollar you spend.

- Often expressed as: Cloud Efficiency Rate = Business Output / Cloud Cost

For example, SaaS companies may track ARR per $1 of cloud spend, or API calls per $100 of infrastructure spend.

The CER helps you connect cloud usage directly to business performance. And that’s crucial intel for CFOs and product leaders.

See: Cloud Efficiency Rate: How To Quantify Cloud-Native Business Value

5. Anomaly and spike detection

Sudden changes in resource usage or spending often signal underlying inefficiencies or incidents. So:

- Track cost anomalies at the resource, team, or service level

- Set alerts for percentage-based cost increases or changes in usage patterns

Early detection prevents runaway costs and ensures your teams can investigate and respond quickly.

6. Tagging and allocation coverage

While tagging isn’t perfect, it’s still foundational for allocating spend accurately. As such, measure:

- Tagging Coverage Rate: This measures the percentage of your spending that is tagged.

- Unallocated Spend: The portion of costs that can’t be attributed to a team, service, or feature.

Advanced cost platforms can help you track untagged spend by analyzing naming conventions and usage patterns. And that would bring you closer to 100% visibility.

Better allocation = better accountability, budgeting, and cost optimization.

These metrics help you uncover inefficiencies, drive accountability, and enable smarter cloud decisions. But common challenges may try to get in the way.

Related reads:

Challenges In Cloud Resource Management

Even seasoned teams struggle to manage cloud resources at scale. Here are the most common challenges, why they matter, and how to beat them.

1. Overprovisioning by default

Teams often choose larger instance types “just in case.” This leads to wasted capacity and inflated costs. And without real-time feedback or incentives to rightsize, this becomes the norm.

Engineering overspends without realizing it. Finance sees bloated cloud bills with little cost attribution.

2. Poor tagging hygiene

Tagging is key to visibility. But many organizations lack consistent policies or struggle with enforcement. The result: unallocated costs make it hard to track spend with precision.

3. Siloed engineering and finance workflows

When finance and engineering don’t share the same view of cloud usage and costs, decisions are made in a vacuum.

Finance can’t forecast accurately, and engineering can’t justify infrastructure decisions with business context.

4. Multi-cloud and hybrid complexity

Managing multiple providers or environments creates inconsistent tooling, pricing, and visibility. This makes optimization harder and billing reconciliation nearly impossible without advanced (and often complex) tools.

5. Lack of cost accountability

Without clear cost ownership at the team or product level, optimization takes a backseat to feature delivery. Cloud costs spiral, and leadership loses trust in scaling efficiently.

6. Reactive cost management

If you wait for the bill to spot usage spikes or anomalies, it’s already too late. You’ll miss savings and have to deal with budget surprises.

Here are some key best practices to help you avoid that and build a cost-efficient, high-performing cloud strategy from the inside out.

Best Practices For Effective Cloud Resource Management

Here’s how top-performing teams do it, so you can too.

1. Align resource usage with business KPIs

Don’t manage infrastructure in isolation. Tie cloud resources to business metrics, like cost per customer, cost per deployment, or margin per product line. This bridges the gap between engineering and finance.

2. Automate rightsizing and scaling

Manal provisioning almost always leads to waste. Automate resource scaling based on real-time usage patterns using:

- Autoscaling groups (AWS, Azure, GCP)

- Serverless where appropriate

- Scheduling for non-production environments

Tip: Set policies to shut down unused dev/test resources on weekends or off-hours.

3. Establish and enforce tagging standards

Create a company-wide tagging policy that covers key dimensions: environment, team, project, customer, etc.

- Use automated policies (like AWS Organizations, Azure Policy) to enforce compliance.

- Audit regularly to catch gaps and misapplied tags.

Use third-party tooling to supplement tagging, fill attribution gaps, and reduce reliance on manual tagging discipline.

4. Build engineering cost awareness

Empower engineers to see the cost impact of their architecture and code decisions without needing to dig through billing reports.

See:

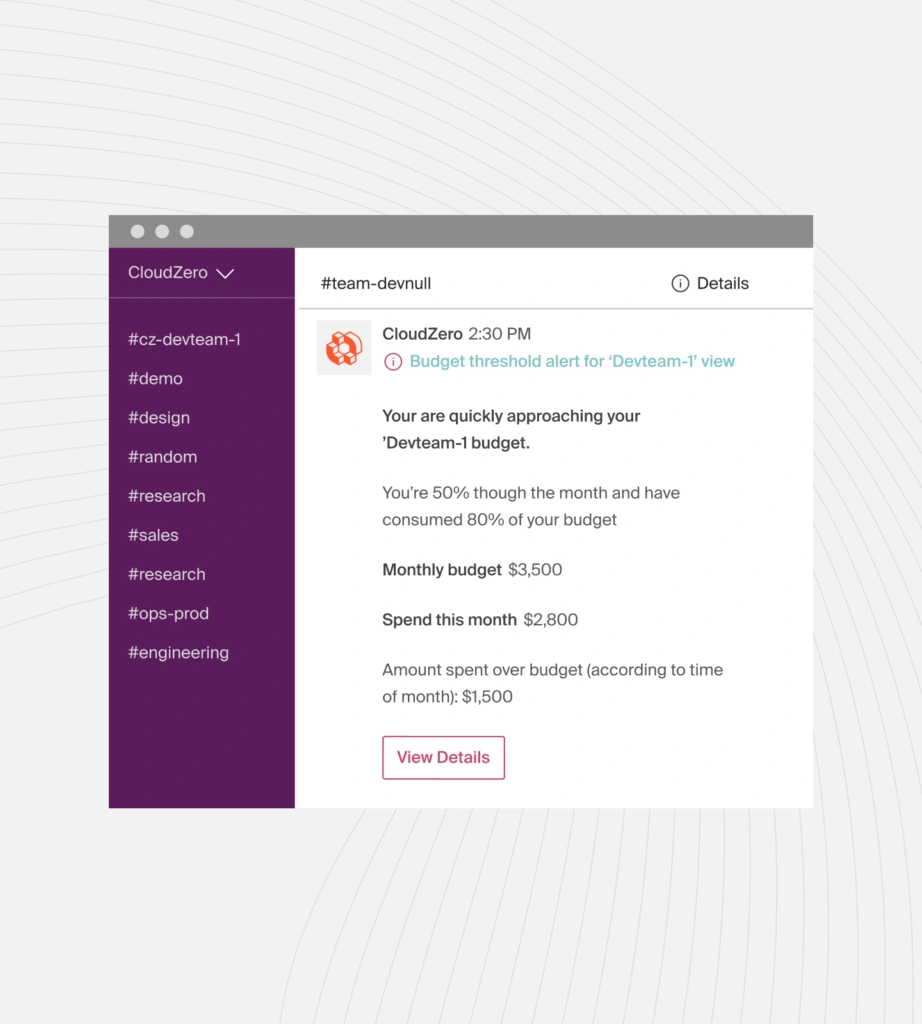

5. Monitor and act on cost anomalies early

Set up proactive alerts to detect unexpected changes in resource usage or spend at the service, team, or feature level. Pair anomaly detection with auto-tagging or filtering by team so the right people are alerted to investigate fast.

6. Review and optimize regularly

Make resource reviews part of your sprint retros or monthly ops check-ins. Look for:

- Underutilized instances

- Orphaned resources

- Surging costs by service or product line

Use a central dashboard to show trends over time and catch creeping inefficiencies before they escalate. Heck, we’ve used our own platform for this and caught over $1.7 million in annualized savings.

So, how do you get there? Make cloud resource management a shared language across finance, engineering, and leadership. You’ll scale without bleeding money.

Here’s where to start.

See What Others Miss And Act Where It Counts

Cloud costs are like shadows. They follow every decision your team makes. But when you can’t see what’s casting them, you’re left chasing surprises instead of steering strategy.

CloudZero flips on the lights.

With our platform, you don’t just see what your cloud costs. You also see why and what to cut without sacrificing performance or engineering velocity.

You can zoom in from total spend to how much a single feature, deployment, or customer is driving your bill.

And you can act on those insights in real time to protect your margins.

Don’t just take our word for it. Leading teams at Duolingo, Moody’s, Grammarly, and more trust this cloud cost intelligence approach. And we just helped Drift save $2.4 million and Upstart slash over $20 million. They acted on clarity.

Ready to stop chasing cloud costs and start leading with insight? Then  and see what happens when every cloud dollar has a purpose.

and see what happens when every cloud dollar has a purpose.