AI should speed up innovation, not inflate your cloud bill. But today, the biggest GenAI challenge for SaaS teams isn’t model quality; it’s cost. And increasingly, that cost comes from AI cost sprawl.

That’s not because anyone is doing something wrong, but because AI operates differently from the cloud services we’ve all spent a decade learning how to manage.

AI features and workflows are harder to meter, easier to overuse, and nearly impossible to govern without the right level of cost intelligence.

So, in the next few minutes, we’ll break down what’s driving AI cost sprawl, how it hides inside your cloud bill, and how leading SaaS teams are gaining cost control — without slowing experimentation or engineering velocity.

After all, the teams that master AI cost management today will build the most profitable AI capabilities tomorrow.

Read more on AI costs: AI Costs In 2025: A Guide To Pricing, Implementation, And Mistakes To Avoid

Understanding The AI Cost Crisis: Why AI Cost Sprawl Happens And Why It’s So Expensive

If it feels like your AI cloud costs are growing faster than your understanding of them, you’re not imagining it.

A recent study by Vanson Bourne (for Tangoe) found that cloud costs have increased by an average of 30% due to AI-related technologies, and 72% of leaders say their cloud spending is becoming increasingly unmanageable.

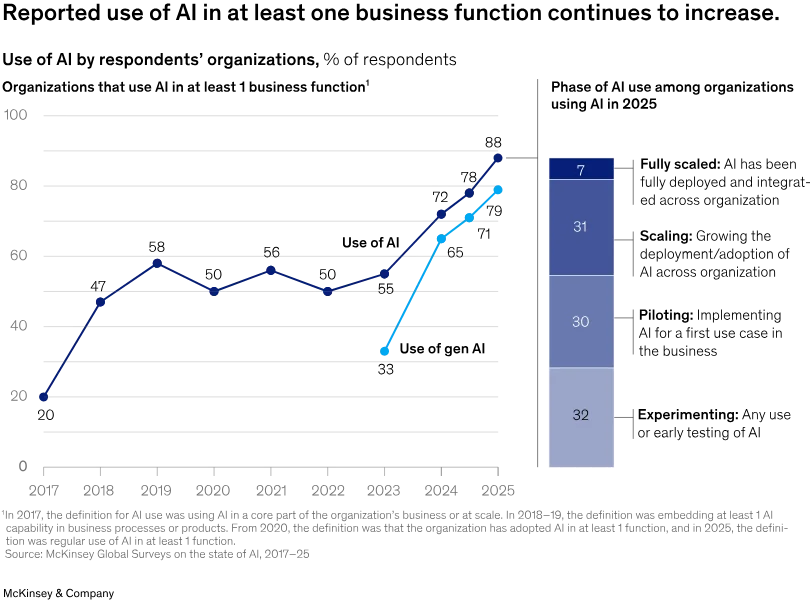

Adoption is also sprawling:

Image credit: McKinsey & Co’s State of AI

On a macro scale, Gartner forecasts nearly $2 trillion in global AI spending in 2026, with more to come.

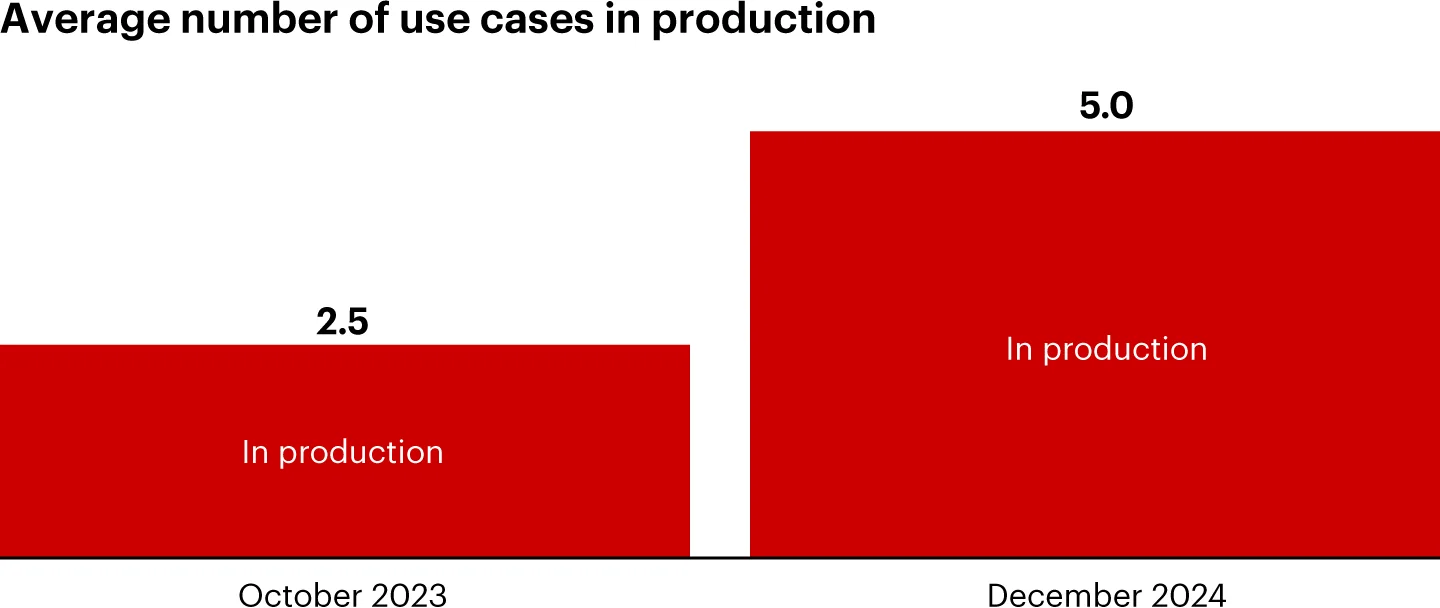

Zoom in from the macro to the team level, and CloudZero’s State of AI Costs report is even more revealing:

- Average monthly AI spend jumped from about $63,000 in 2024 to $85,500 in 2025, a 36% year-over-year increase.

- The share of companies planning to spend over $100,000 per month on AI tools and infrastructure more than doubled, from 20% to 45%.

That’s real money. And for most SaaS orgs, those AI cloud costs are landing on the same P&L as everything else that affects gross margin.

AI costs are rising faster than cost visibility, too

AI spend ramping up that quickly wouldn’t be a problem if companies had tight cost controls, clear AI ROI, and strong cloud budget control.

From the same CloudZero report, we see something alarming instead:

- Only 51% of organizations strongly agree they can accurately track AI ROI, even though 91% feel confident in their ability to evaluate it.

- 57% still rely on spreadsheets to track AI costs.

- 15% admit they have no formal AI cost-tracking system at all.

Clearly, AI adoption is racing ahead, but AI cost governance and FinOps maturity are lagging.

Image: State of AI Cost Report

External research paints the same picture. Bain reports that generative AI budgets have roughly doubled since early 2024, now averaging about $10M annually for larger enterprises.

Part of the problem is missing what AI cost sprawl looks like.

The AI cost crisis in a nutshell:

AI cloud costs are rising sharply. Cost tracking is immature and fragmented. And traditional cloud budget control tools weren’t built for token-based, model-centric, multi-provider AI workloads.

What AI Cost Sprawl Looks Like Today Across Different Teams in SaaS

At a high level, sprawl shows up as:

- Too many models and AI vendors doing similar jobs

- Fragmented AI usage across teams and tools

- No single place to see who is using what, how much, and for which customer or feature.

Now let’s see it from the perspective of each of your core stakeholders.

1. AI cost crisis for engineering teams

Picture this:

Your core product team ships a new “AI assistant” inside your app. They start with OpenAI for chat. Add Anthropic for summarization because “it sounds more human”.

Introduce Gemini for code or document analysis because someone in the team is crazy about Google’s ecosystem. Pipe everything through a vector DB and an embedding API. Plus a couple of “just-testing” features running on a GPU-heavy cluster in your primary cloud

None of this is malicious or careless. It’s smart people moving fast.

But, that is also how AI cost sprawl creeps in:

- Redundant capabilities: multiple LLMs doing nearly identical tasks, each with its own pricing, rate limits, and quirks.

- No shared playbook: every squad chooses its own models, prompts, and token limits.

- Zero unit economics: no one can answer “what’s the cost per conversation, per deployment, or per user?”

From the engineering side, it feels like progress. But from the cloud bill (and finance/FinOps) side, this is classic AI cost sprawl::

- Fragmented AI charges across three or four providers

- Uncapped token usage in a few high-traffic workflows

- Long-running GPU workloads left idling in dev or staging

Practitioners in the FinOps Foundation’s Cost Estimation of AI Workloads paper flag exactly this scenario. It’s easy for teams to move from prototype to production without updating their cost assumptions, leading to surprise bills when volume hits.

What engineers actually lose:

- Time debugging why certain workloads are suddenly throttled or too expensive

- Freedom to experiment when finance starts pushing back on anything “AI”

- Trust that they can build with AI without getting blamed for surprise overages

2. AI cost crisis for FinOps and finance teams

Now picture this other scenario:

Your FP&A team is closing the month. Cloud spend is up 25%. And your GenAI line items are scattered across:

- Your primary cloud provider (GPU instances, managed AI services)

- Multiple third-party AI APIs

- New SaaS tools with “AI add-ons” priced per user or per token

Your AI-related costs show up as:

- “Other AI services”

- “ML platform usage”

- “API consumption”, and

- A mysterious spike in egress and storage.

This is not an imaginary scenario; it’s the financial side of AI cost sprawl. Capgemini reports that 68% of executives said their companies overspent on GenAI in particular, often because the costs were decentralized and poorly governed or visible.

In practice, this is what that feels like to finance, FinOps, and FP&A practitioners:

- They can’t tie AI cloud costs back to teams, features, or customers

- FinOps is stuck reverse-engineering invoices and usage reports

- Budget conversations devolve into “we should slow down AI” rather than “we should double down where AI is profitable.”

The FinOps Foundation’s work on optimizing GenAI usage calls out exactly this risk. That, without clear allocation and usage visibility, organizations “overspend and underdeliver” on GenAI because they can’t connect costs to value.

What FinOps/Finance actually end up losing is:

- Forecasting accuracy

- Credibility when they bring AI spend to the C-Suite table

- The ability to partner with engineering, instead of policing (a.k.a. blaming) them.

3. The AI cost crisis for CTOs, CIOs, and SaaS leadership

Your leadership rallies around AI as a strategic pillar. You announce AI features to the market. Sales teams lead with AI-powered capabilities. Product roadmaps are packed with “AI assistant,” “AI insights,” and “AI automation.”

On the surface, it looks great. Usage is up. Product velocity is high. Your customers are impressed.

But three quarters in, finance brings you a slide that’ll make you lose sleep for the next couple of weeks:

- AI-related cloud costs have grown faster than AI-related revenue

- Gross margin has sneakily dipped a few points

- Most of your AI costs are sitting in “shared infrastructure” and can’t be cleanly allocated to specific features or individual customers.

This is happening. Okoone’s summary of 2025 IT spending notes that AI now dominates IT priorities even as leaders face “soaring cloud costs, risky generative projects, and pressure from tech giants.”

What leadership stands to lose to AI cost sprawl and uncontrolled AI spending::

- Confidence that AI is profitable innovation, not a gamble or an enabler of shiny object syndrome

- The ability to defend AI investments to the board with hard numbers

- Being forced to cut costs indiscriminately instead of making targeted optimizations. Check out this guide to cloud cost optimization vs. cost cutting to see the difference.

Helpful Resources:

Why AI Cost Sprawl Undermines Innovation

Every dollar spent on redundant models, duplicate tools, or ungoverned inference — all hallmarks of AI cost sprawl — is a dollar you can’t spend on:

- Shipping new features

- Scaling profitable AI workflows

- Improving infrastructure performance

- Funding engineering headcount

- Investing in strategic R&D.

Beyond the invoices and dashboards, AI cost sprawl shows up in behavior and weakens your company culture:

- Engineers start to worry that every experiment is a potential budget problem.

- Product managers increasingly hesitate to ship new AI features without knowing if they’re affordable at scale.

- Finance and FP&A become more conservative, tightening budgets to “get things under control.”

- Executives see AI as both strategic and risky, which can stall bolder investments (and ROI).

Instead of AI unlocking innovation, AI cost sprawl erodes your margins, injects fear into decision-making, and slows down the very experimentation you invested in AI to accelerate.

Let’s change that.

Here’s How To Prevent AI Cost Sprawl Before It Even Starts (AI Cost Control Framework)

Consider and apply these practical strategies high-performing SaaS teams use to prevent AI cost sprawl.

1. Start with a unified AI cost model (the foundation of preventing AI cost sprawl)

It’s a tough day trying to prevent sprawl if your AI spend is a blurry slice of your general cloud bill. So, first, you need a single, consistent way to see your AI costs:

- By provider. Think of OpenAI, Anthropic, Gemini, Bedrock, Vertex, etc.

- By model such as, GPT-4.1, Claude 3.5 Sonnet, Gemini 1.5 Flash

- By feature or product (AI summarizer, chat assistant, code copilot)

- By team or BU, and ultimately

- By customer, segment, or unit of work, like ticket, document, session, etc.

In fact, the FinOps Foundation’s GenAI optimization guidance explicitly recommends modeling both inference and training costs by use case and model size.

Seem like an impossible task? Try CloudZero’s AI cost intelligence.

CloudZero ingests cost data from your apps, infra, and more sources, enriches it, and delivers an accurate, easy-to-digest view of your AI spend, down to cost per AI model, per SDLC stage, per AI service, and beyond. Automatically, once you’ve set it up (with one of our Certified FinOps Practitioners, if you want).

Take your quick tour of CloudZero here to see it yourself. Or,  to try it hands-on.

to try it hands-on.

This approach helps you create dedicated AI cost centers. It also empowers you to implement chargeback/showback for AI services, and standardize tagging and naming so your AI resources are traceable.

In practice, this means:

- Define a minimum tagging schema for anything AI-related:

ai=true, model_family=, feature=, team=, env=, customer_impact= - Group AI spend into FOCUS-style scopes. Think of “GenAI APIs,” “ML training,” “vector search”) instead of leaving it buried in “Other compute.”

- Start tracking simple unit metrics like cost per 1,000 tokens, cost per call, or cost per user session for each AI-heavy feature.

Once your AI is visible in a unified cost model, decisions stop being “AI is expensive” and start being “this feature on this model costs X per user. Is that worth it?”

2. Standardize AI architecture and model usage to reduce AI cost sprawl

The fastest way to create sprawl is to let every team pick any model, any vendor, and any stack. Anywhere. Anytime.

FinOps’ GenAI paper emphasizes that model size and complexity should match the use case. It also suggests routing simple tasks to smaller, cheaper models while reserving large models for complex reasoning.

You don’t need to centralize everything, but you do need guardrails, such as:

- An approved model catalog with small, medium, or large options per use case

- Clear guidance on when to use pre-trained APIs vs fine-tuned models vs fully custom training

- A default vector DB and orchestration stack, instead of five different ones

Practically, that looks like this:

- Create a model tiering policy:

- Tier 1: high-volume, low-complexity (use small/medium models by default)

- Tier 2: medium-volume, moderate complexity

- Tier 3: low-volume, high-complexity (allowed to use “expensive” frontier models)

- Standardize vector and orchestration choices

Pick one or two vector databases and orchestration frameworks (such as LangChain or Temporal) and discourage ad hoc alternatives unless justified.

- Maintain a central “model garden” internally

Document for each model the supported use cases, latency, quality notes, cost per 1K tokens (or similar). Also, give your engineers an easy way to compare “Sonnet vs GPT-4 vs Gemini Flash” on both quality and cost. CloudZero helps you see this in a single pane of glass, like this:

Takeaway: This approach alone can help you cut a surprising amount of AI cost sprawl by avoiding duplicate stacks and inconsistent, overly expensive model choices.

3. Use responsible AI experimentation frameworks

You’ll need something like an “AI sandbox”. Safe. Budgeted. Observable.

Regulatory and governance thinking is going in the same direction. The EU AI Act, for example, introduces AI sandboxes, which are controlled environments to experiment safely, while keeping compliance and oversight in place.

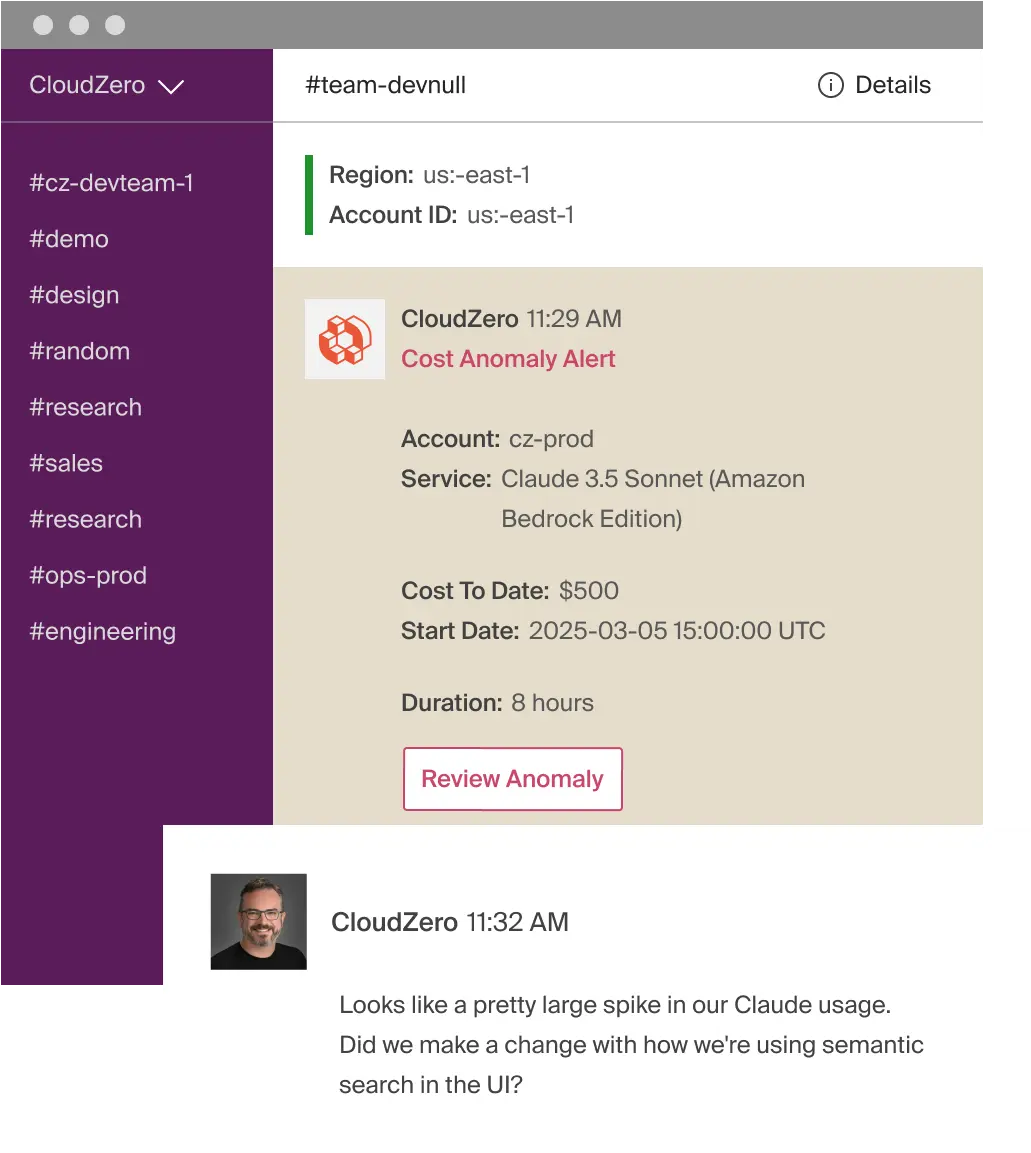

AWS has gone further and published a proactive AI cost management system for Amazon Bedrock that uses token usage metrics plus budget limits to rate-limit requests before they exceed predefined thresholds.

Practically, a good experimentation framework includes:

- Dedicated “experiment” environments

- Separate accounts/projects/subscriptions for AI R&D

- Lower default quotas and strict usage limits

- Run automatic cleanup and shutdown policies

- Tear down idle GPU clusters

- Auto-shutdown dev/staging at night/weekends

- Automatically expire experiment resources after X days if not renewed

- Try token-aware UX and rate limiting for GenAI:

- Limit the max input/output tokens per call

- Prompt previews so users understand the cost implications

- Use caching for common or repetitive queries

- Hard and soft budgets per experiment

For example, “This prototype gets $2K this month, soft limit at $1.5K with alerts, and hard limit at $2K.”

Using native tools can also help (AWS Budgets, GCP Billing, Azure Cost Management), but for powerful anomaly detection, use dedicated FinOps platforms like CloudZero.

Take CloudZero Advisor, for example.

Advisor is a conversational AI assistant powered by CloudZero Intelligence. It is now embedded in CloudZero’s core platform. This empowers you to ask complex questions in natural language and get instant, clear answers about your cloud and AI spending, all within the core platform.

See how it works in this video:

Takeaway: Do this, and you’ll end up with a system where your engineers can still spin up new ideas quickly, without a single experiment racking up a six-figure bill behind your back.

4. Improve procurement and vendor governance for AI

AI cost sprawl is often vendor sprawl in disguise. Multiple LLM providers, multiple AI SaaS tools with per-user AI add-ons, overlapping ML platforms — all with separate contracts and pricing tiers.

Helpful Resources:

Add these strategies to your play:

- Create dedicated AI cost centers and budgets. Separate line items for “core cloud,” “AI/ML infrastructure,” and “GenAI APIs.” This forces conversations about AI spend rather than letting it hide inside “compute.”

- Consolidate where possible. If three teams are using three different “AI copilot” SaaS tools, rationalize them. And if multiple teams are hitting multiple LLM APIs for similar tasks, standardize on a short list.

- Negotiate usage-based and committed discounts. Use historical and forecasted token usage to negotiate better rates or enterprise plans, the same way you do with cloud.

- Right-size AI infrastructure. Not every workload needs A100/H100–class GPUs. Many can run on cheaper instances or a CPU with small models.

- Include cost guardrails in vendor selection. Ask, “Does this provider support usage alerts, detailed metering, cost allocation by key, etc.?” And, “Do they provide granular usage logs, so we can map our spend to specific features and customers?”

Takeaway: The goal here is to stop your AI stack from turning into a junk drawer of overlapping tools you barely use.

5. Establish cross-functional AI cost ownership

For AI, a simple ownership model looks like this:

- Engineering owns usage efficiency: prompt quality, token limits, model selection, caching, compute right-sizing.

- FinOps / Cloud Cost owns visibility and allocation: tagging standards, dashboards, anomaly detection, cost runbooks.

- Product Management owns feature-level value: deciding if a feature’s AI cost is justified by its impact on retention, growth, or revenue.

- Finance / FP&A owns forecasting and budgeting: integrating AI cost curves into financial models and ensuring AI spend aligns with company goals.

- CTO / CIO / CPO own leadership of tradeoffs, like which AI initiatives get funding, which models are approved, and what “profitable AI” means in practice.

Takeaway: The key is that each AI initiative has both a technical owner and a business owner, and both see the same cost and value data. Then they can align.

6. Shift from “surprise” bills to predictive AI cost forecasting

Once your AI costs are visible and owned, you can move from “we’ll see what the bill is” to predictable, scenario-based planning.

Practically, you’ll want to:

- Build simple cost-per-X calculators for each AI feature. X here could be “request,” “document processed,” “test run,” “support ticket,” “code review,” and so on.

- Run traffic scenarios. Like, “What happens to inference cost if this feature reaches 10K, 100K, 1M MAUs?”

- Feed those projections into FP&A models so AI initiatives have the same rigor as any capital project.

- Use anomaly detection and alerts (CloudZero delivers real-time anomaly detection) to catch deviations early.

Takeaway: Your forecasting doesn’t have to be perfect. It needs to be good enough that you rarely say, “We had no idea it could get this expensive.”

7. Make cost-to-value tradeoffs a Day-One product conversation

The last piece is cultural — and it’s where AI cost sprawl becomes preventable.. AI feature ideas usually start with:

“Wouldn’t it be cool if…?”

“Our competitors just launched X…”

Now that you are implementing FinOps for AI, you also need to start with:

- “What’s the expected impact?”

- “What’s the expected unit cost?”

- “Is this feature profitable at scale?”

As a daily habit, this means that your AI feature proposals need to include:

- Target users and business outcome

- Expected request volume (a rough order of magnitude is fine)

- Estimated cost per unit and margin impact

- A plan for monitoring usage and spend

The folks at Product can review not just “Can we build this?” but also:

- “Is this AI model overkill for this use case?”

- “Is there a simpler, cheaper architecture that still delivers enough value?”

When features are live, your PMs and engineering leaders will want to regularly review:

- Cost per customer or per segment vs. revenue or value metrics

- Opportunities to downshift to cheaper models, add caching, or adjust UX to reduce waste

That’s how you prevent AI cost sprawl from ever taking root. And you don’t have to build a whole new system, or stack, to achieve this on a regular.

Turn That AI Cost Crisis Into Your Competitive Advantage

The truth is, preventing AI cost sprawl isn’t just about good governance or engineering discipline. It also requires real-time visibility into how your AI spend behaves, across models, providers, teams, features, and customers.

You want your engineers to experiment freely.

You want your product team to ship AI features with conviction.

You want finance to forecast accurately without being the department of “no.”

You get all three when your AI costs are 100% allocated, visible, and tied to value, like this:

And traditional cost tools simply weren’t built for this moment. Most weren’t built for:

- Token-level metering

- Multi-model routing

- API-based AI billing

- Blended GPU + LLM + vector DB architectures

- Per-feature and per-customer unit economics

- Real-time anomaly detection at the model or call pattern level

That’s why AI cost sprawl feels chaotic inside most organizations. The tooling just hasn’t caught up to the workloads.

With CloudZero, you get one of the first platforms designed specifically to make AI cost intelligence visible, trustworthy, and actionable — at the level modern teams actually need.

Don’t take our word for it. Try CloudZero yourself, completely risk-free. You’ll see why innovators like Duolingo, Skyscanner Grammarly, and Skyscanner trust us with over $15B in cloud spend under management. (And how we just helped PicPay save $18.6M in recent months.)

AI Cost Crisis FAQs

Why is AI suddenly so expensive? (AI cost sprawl explained)

GenAI workloads behave like consumption-based compute: every message, document, or query triggers inference. As usage scales, costs rise instantly. Research shows AI budgets doubling across enterprises, with many overspending due to poor visibility and fragmented governance.

Resource: CloudZero State of AI Costs Report

Why can’t traditional cloud cost tools manage AI spend?

Legacy tools weren’t built for token metering, multi-model routing, per-feature AI costs, or blended GPU + LLM + vector DB architectures. They lack the granularity needed for cost-per-token, per-request, and customer-unit economics.

Why does finance struggle to track AI costs?

Finance sees AI charges buried in “other compute,” API line items, vector search, egress, and SaaS AI add-ons. Without allocation by team, feature, or customer, finance can’t tie spend to value, and forecasting becomes unreliable.

How does AI cost sprawl affect gross margin? (AI cost + SaaS economics)

AI costs often grow faster than AI revenue. When high-volume workflows use expensive models or uncapped tokens, margins compress quietly. Without visibility, companies can’t see which features or customers drive AI COGS.

How do leading organizations prevent AI cost sprawl? (AI FinOps best practices)

High performers build a unified AI cost model, enforce model tiering, merge vendors, standardize vector DBs/orchestration, and create budgeted AI sandboxes. They also use automated anomaly detection and predictive forecasting.

Why do AI initiatives need shared ownership?

Engineering controls improve efficiency, product evaluates value, finance forecasts cost curves, and FinOps provides allocation and anomaly detection. When costs and value sit in one shared view, teams stop guessing and start aligning.

Read more: From FinOps For AI To AI-Native FinOps.