If cost optimization is your only reason for adopting Kubernetes and containers, you might be in for a rude surprise — many companies find that costs increase after moving to Kubernetes.

Even companies who adopt Kubernetes for other reasons, like time-to-market advantages, should follow basic cost control best practices to stay within the budget.

Optimizing cloud costs related to running Kubernetes doesn’t have to involve trade-offs for performance or availability. The key to following Kubernetes cost optimization best practices is to get visibility into how you are using cloud resources and reduce waste.

In most cases, organizations can reduce costs substantially before they have to think about making trade-offs.

Only some Kubernetes-related best practices directly relate to technology choices and architectural decisions. Cultural and organizational decisions, like how you talk about costs, integrate cost management into your workflow, and tackle cost issues, can be just as important for continued success.

What Is Kubernetes Cost Optimization

Kubernetes cost optimization manages and reduces the costs of running applications on Kubernetes clusters. It involves efficiently using resources like CPU, memory, and storage to avoid overprovisioning, which can lead to unnecessary costs.

Approaches such as right-sizing workloads, using cost-effective instances like spot instances, and autoscaling help ensure you only pay for what you need.

Here are more reasons why Kubernetes cost optimization matters

- Supports scalability by allowing infrastructure to adapt to changing demands

- Promotes financial transparency through its detailed visibility into spending

- Helps in planning and allocating budgets more accurately by predicting future costs

- Identifies and eliminates unused or underutilized resources to reduce waste

- Enhances performance by offering sufficient resources for optimal application performance

- Ensures that Kubernetes spending aligns with business goals

Non-Architectural Best Practices For Kubernetes Cost Optimization

1. Get deep visibility

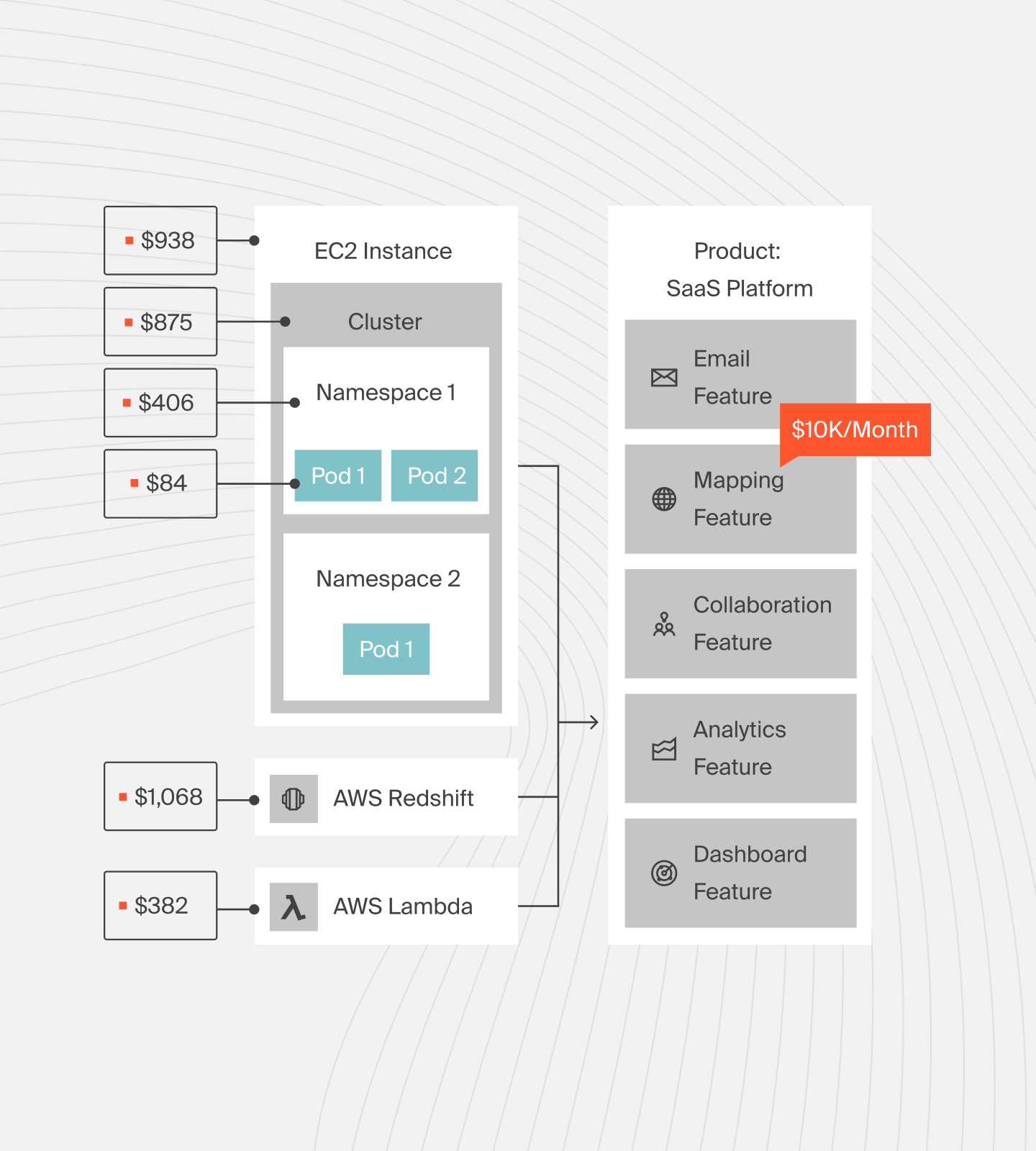

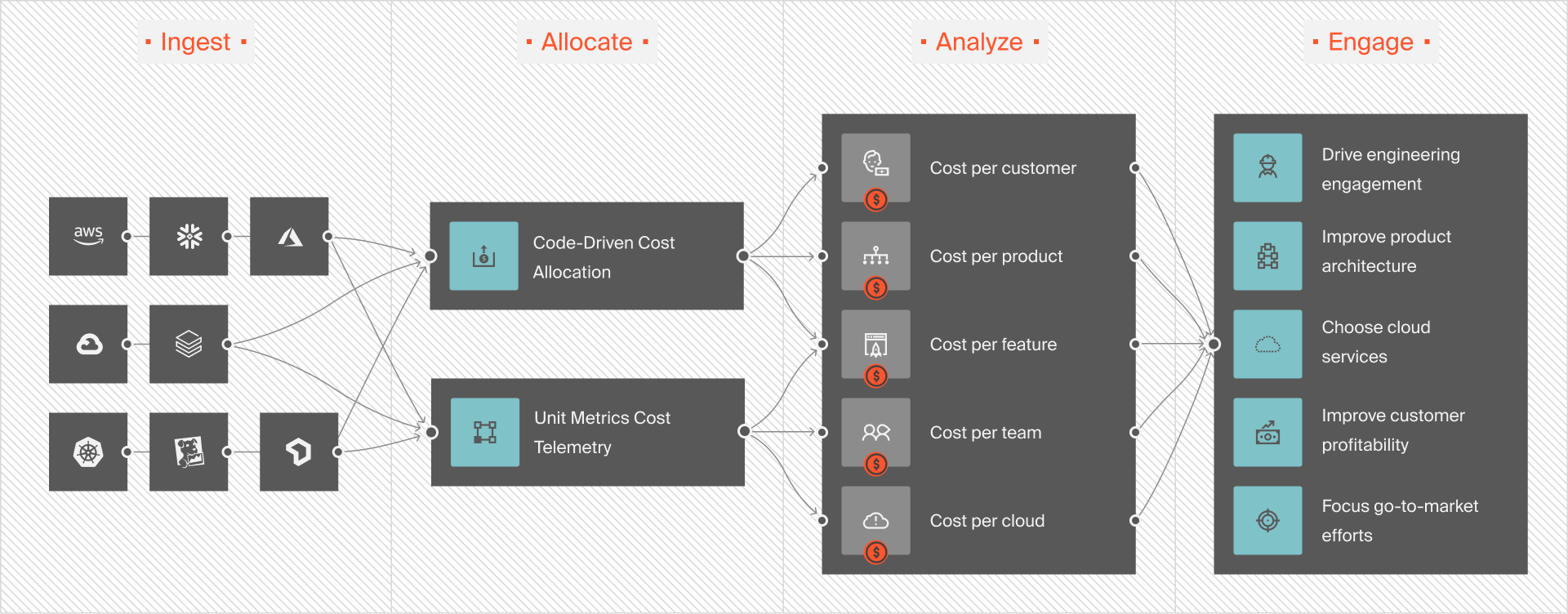

The first step to optimizing costs related to running Kubernetes is to use a platform such as CloudZero to get deep, granular visibility into how Kubernetes influences costs.

A look at how CloudZero enables teams to break down Kubernetes spend by cluster, namespace, label, and pod — allowing engineers to filter, zoom in, and explore the cost of any aspect of their Kubernetes infrastructure.

Understanding the total cost of running an application per day isn’t enough to start making changes that can bring costs down. Organizations should have access to the following information about their Kubernetes deployment:

- Memory, CPU, and disk usage

- What jobs are running at any given moment, and where they are running

- How traffic is moving throughout the system

- The costs of everything other than compute, including things like storage, data transfer, and networking

- A map of how things run on the cluster

- How much the application cost to run right now as well as trends in cost change

- Your complete cost picture on an hourly basis

With this information, organizations can make informed decisions about adjusting resource provisioning and/or changing the application architecture to reduce costs without impacting performance or availability.

CloudZero can help you drill down into each of these details and paint a complete picture of your cloud spending.

2. Measure before and after costs

Organizations should start considering costs as one of the operational metrics to track as part of the engineering process.

Just as it’s normal to measure performance and uptime before and after major and minor changes, measuring cost changes should be a part of the operational practice.

Similarly, just as organizations have service level objectives related to performance and availability, they should have internal guidelines related to how much it’s acceptable for an application to cost to run.

They should be able to measure, understand, and then accept or decline those costs after the application’s changes are made.

Each application has a different role and different priorities. The key is to become aware of how costs affect the decisions made regarding that application.

There’s no ‘good’ or ‘bad’ cost, necessarily, as long as the organization is allocating its resources in a way that matches priorities. Some applications might be very costly but also mission-critical and/or very profitable — simply knowing the raw dollar amount an application costs to run doesn’t provide enough information about whether or not it’s ‘worth it.’

3. Buy better

Carefully selecting discount plans can cut a significant percentage of costs overnight.

With time and experience, you will have a fairly solid understanding of which resources are most critical to your operations. Ideally, you should also keep an eye on which resources are stable rather than fluctuating.

Additionally, if you have successfully achieved granular visibility into your Kubernetes costs, you know which of these resources cost the most money and how much you typically spend on them.

That knowledge opens up the opportunity to make savvy purchasing decisions rather than sticking with your cloud provider’s base rate. In particular, you can start looking for reserved instances, savings plans, and spot instances. Which is best for your company depends on the nature of your business and your needs.

Reserved Instances and Savings Plans

These can both be great options if your usage remains relatively stable and you know with some degree of certainty how much you typically spend for that usage.

Reserved instances allow you to book your server space ahead of time in exchange for a discount.

Savings plans provide a discount based on time commitments; if you commit to three years of use, for example, you can save some significant money compared to purchasing standard, on-demand instances.

The difficulty comes in finding and managing the opportunities that best fit your company.

We highly recommend ProsperOps as a solution.

Using automatic, intelligent optimization algorithms — sometimes called Optimization 2.0 to distinguish them from the old days of manual optimization — ProsperOps will get the best results possible for your situation.

Spot Instances

Truthfully, not every business can harness the benefits of spot without making too steep a trade-off. Rather than purchasing a stable amount or duration of server space, you can bid for open “spots” of unused resources.

Just as you want to avoid wasting money on idle resources in your business, your cloud provider feels the same way. To avoid a total loss, they will often auction off these idle spaces to the highest bidder.

If you’re savvy, you might save as much as 90% on costs compared to on-demand instances. However, as soon as those resources are needed elsewhere — or if a higher bidder comes along — your work will be shut down and kicked off to make room for the higher-priority customer.

This makes spot instances a fantastic opportunity for businesses who don’t mind unexpected interruptions in some processes, and potentially a terrible choice for businesses that require constant, steady service.

The one exception to this rule is if you use a service called Xosphere to manage your spot instances and avoid disruptions intelligently.

We recommend Xosphere to any business that wants the best of both worlds: the savings opportunities of spot and great reliability.

Architectural Best Practices

4. Reduce nodes

Architecturally speaking, the most efficient way to lower your Kubernetes costs is to reduce the number of nodes you have running.

At the end of the day, there’s no way around that. You can take many of the other steps listed here and achieve some improvements, but the real cost savings comes from using fewer resources.

The goal is to use only the number of nodes needed without going too far in either direction, hindering performance or having extra idle resources.

To accomplish this, you can use three tools:

- A horizontal autoscaler allows you to control the number of pods to fit your current needs.

- A vertical autoscaler can moderate the requests and limits of those pods to make sure they are not too busy or too idle.

- A cluster autoscaler functions similarly, controlling the number and size of nodes instead of pods.

One open-source cluster autoscaler that you can use is Karpender, but there are several other options on the market if you have specific needs.

5. Reduce Traffic

Reducing or eliminating traffic between availability zones and regions means you’ll avoid unnecessary data transfer charges.

Regional charges are especially important. Sometimes, Kubernetes nodes encompass two or more geographical regions, and points within that node must communicate from a fairly long distance away. This can quickly rack up data transfer charges.

When possible, it can be better to separate nodes or clusters into their own regions, so that information transfers stay within one region instead of multiple.

If you have a pod that needs to use S3, for example, you might want to set up an S3 endpoint within the pod so that it doesn’t have to access the internet to find the resources it needs. Similarly, setting up AWS service endpoints can help you more effectively route your traffic and avoid sending your pods onto the internet.

While regional costs are the priority, availability zone costs also shouldn’t be overlooked. You can use just one namespace per availability zone, so you wind up with an assortment of single zone namespace deployments.

That way, communication between pods should remain within each individual availability zone and therefore would not accrue data transfer fees.

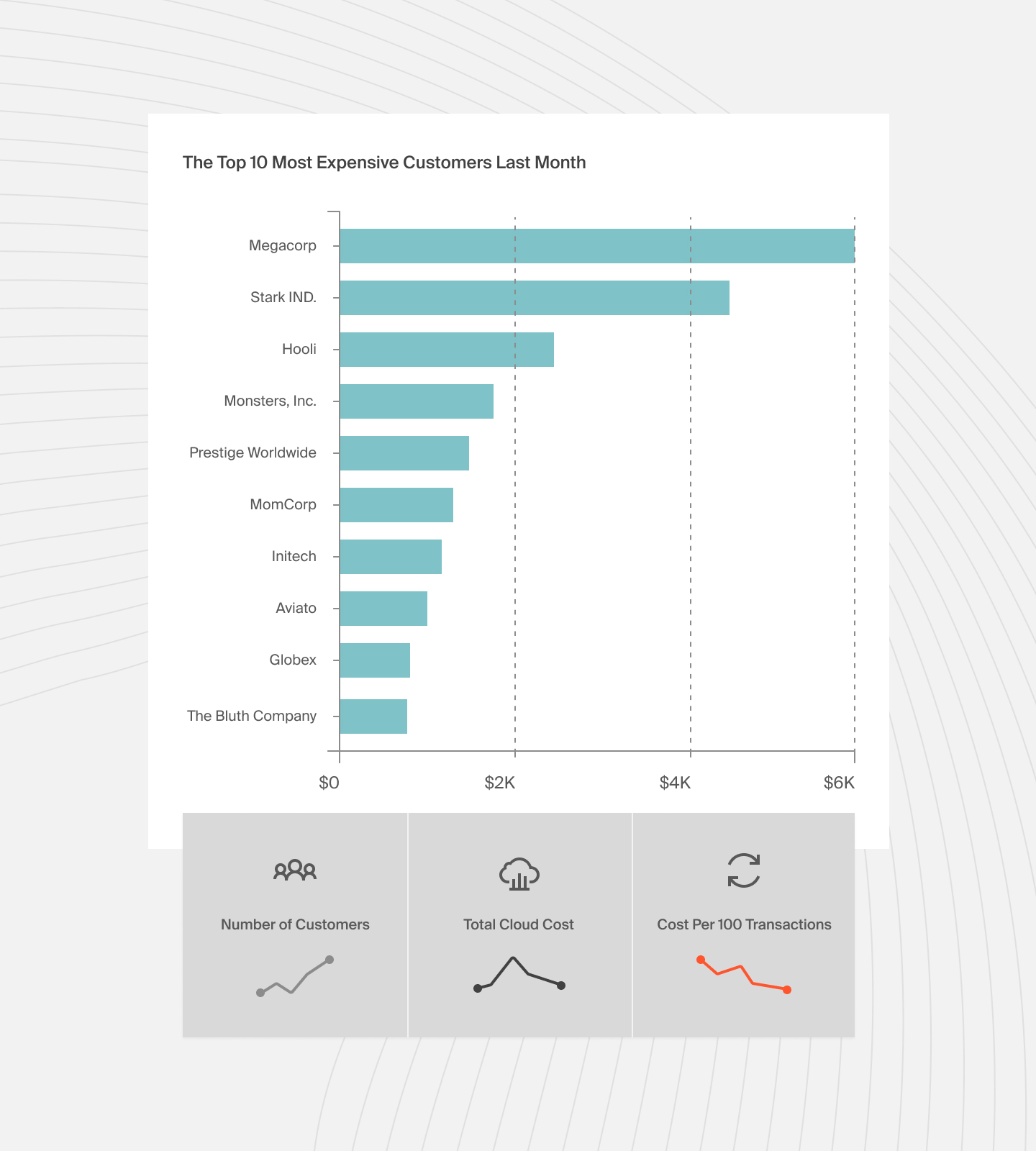

CloudZero can help you break apart your node costs and see what network data fees are costing your business. You’ll be able to visualize how each change to your network affects data costs for each region or availability zone so that you can optimize on a deep level.

A look at how CloudZero helps you identify your most expensive workloads and find opportunities for optimization.

And, if you need guidance, our FinOps specialists are always available to help you identify areas ripe for optimization and suggest solutions to fix the problems.

6. Reduce local storage

Every node has to have some type of storage, and every data point stored on a cloud server costs money. If you can maintain smaller local drives and upload your data to a database instead, you could shed some unnecessary fees.

Reducing stored data shouldn’t be the highest priority on your list of optimizations, but if you’ve made the sweeping changes and now you’re working toward fine-tuning, this can be a great way to achieve more control over your costs.

7. Review logging and monitoring practices

Similarly, every single character you write costs a little bit of money. Many developers unknowingly rack up costs by turning on a debug statement — and leaving it on — when an application is already live and in use.

These printed logs can wind up far more voluminous than the developers intend. In fact, CloudZero has seen cases where small pods use only one tenth of a node — and therefore shouldn’t cost very much — but the log is so massive it costs three times the amount of the pod’s actual operations.

Of course, developers sometimes need to use debugging statements and logs to review their code. Another option is to use third-party monitoring programs such as Dynatrace, New Relic, or Datadog to monitor applications.

The financial tradeoffs of using a third party service versus a standard log aren’t always clear, however; this is where cost visibility from CloudZero can make all the difference when deciding which is more worthwhile.

With CloudZero, users can see the fees from third parties and other resources aggregated into one place alongside the rest of the application costs. This gives engineers deep visibility into what it truly costs to run the application and how their changes affect the total.

8. Lock Yourself In

This might seem counterintuitive, but it’s often best to avoid building the application to be portable between clouds. Not everyone sees this as a “general” architectural best practice.

However, many experts see multi-cloud as particularly challenging to optimize costs. Not only can it lead to high network costs, but it prevents you from using the best-of-breed services your cloud provider has to offer.

Ideally, organizations will follow cost best practices from the beginning. In real life, understanding these best practices and combining them with deep visibility into the cost ramifications of the different parts of the application allow teams to continually improve the cost-effectiveness of applications, often without any other trade-offs.

Top 5 Kubernetes Cost Optimization Tools

Consider the following.

1. CloudZero

CloudZero links Kubernetes spending to business outcomes using unit cost metrics. Users can track metrics such as costs per customer, product, feature, and team. This shows how Kubernetes costs impact business performance, helping allocate costs effectively and justify investments.

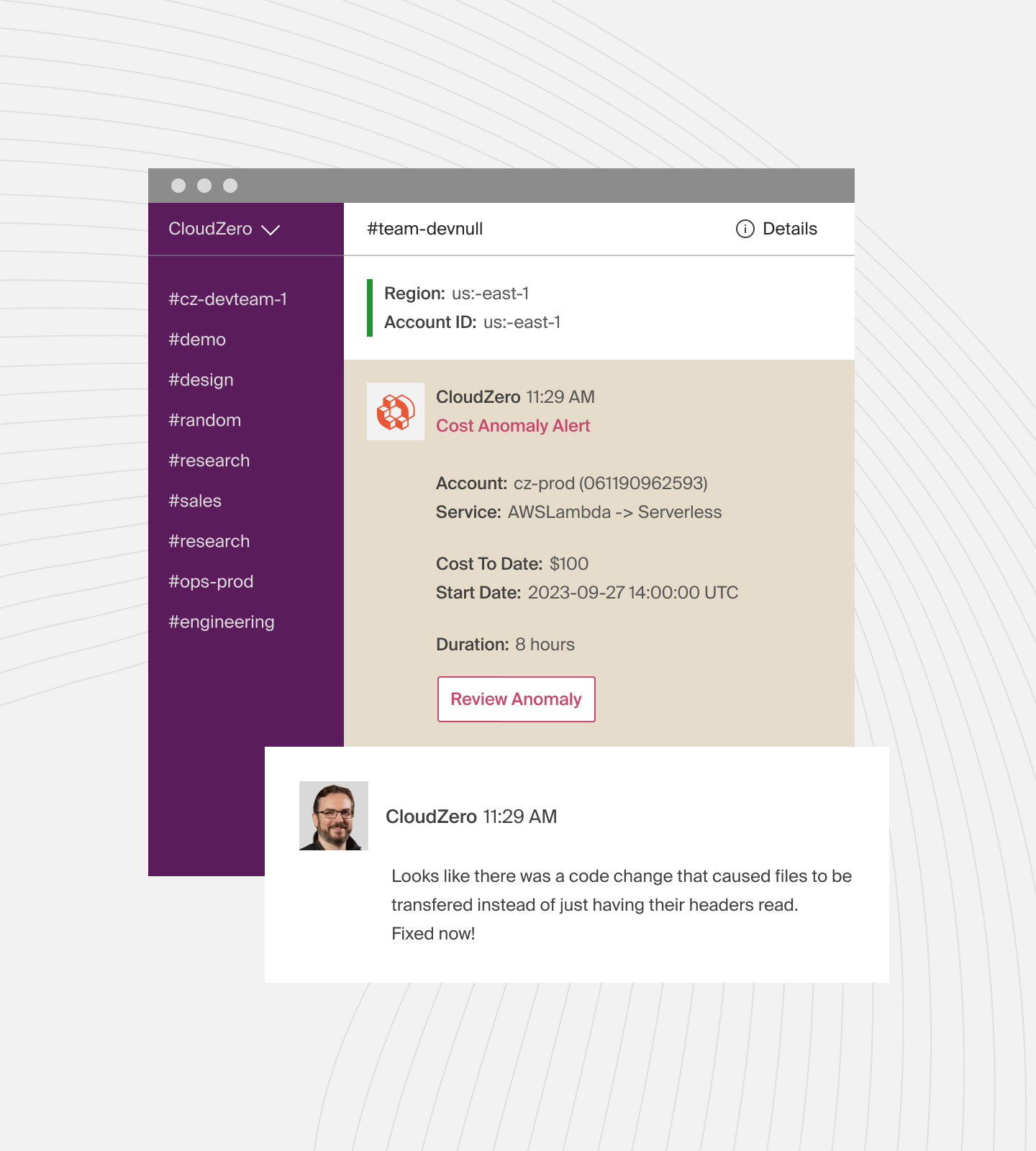

CloudZero uses machine learning algorithms to monitor Kubernetes costs. It detects unusual spending patterns and sends real-time alerts. This helps teams address issues before they escalate, controlling budgets and avoiding cost spikes.

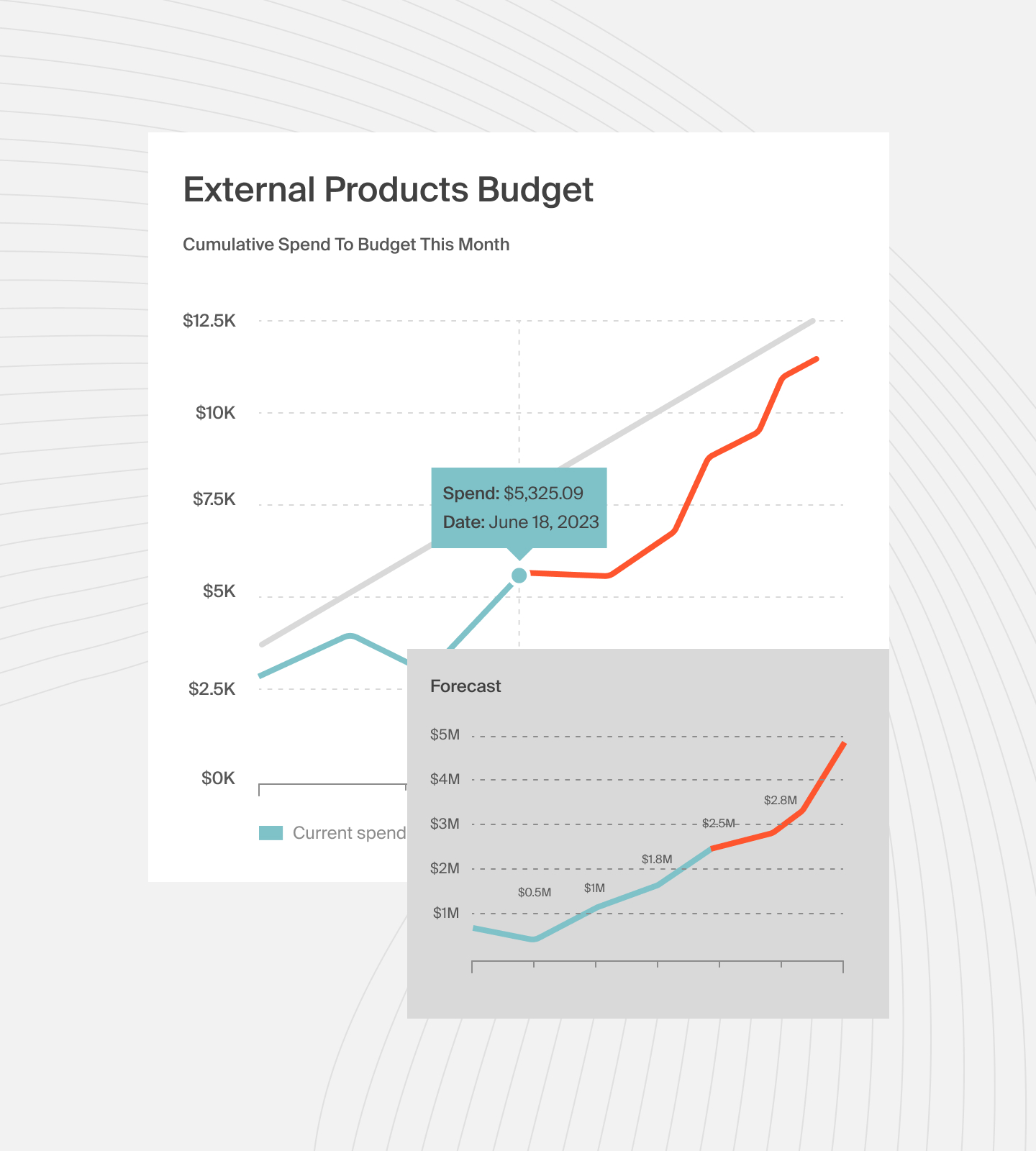

CloudZero also forecasts future Kubernetes costs using historical data. Analyzing past costs identifies trends for better budget planning and resource allocation. This keeps your Kubernetes environment cost-effective and aligned with business goals.

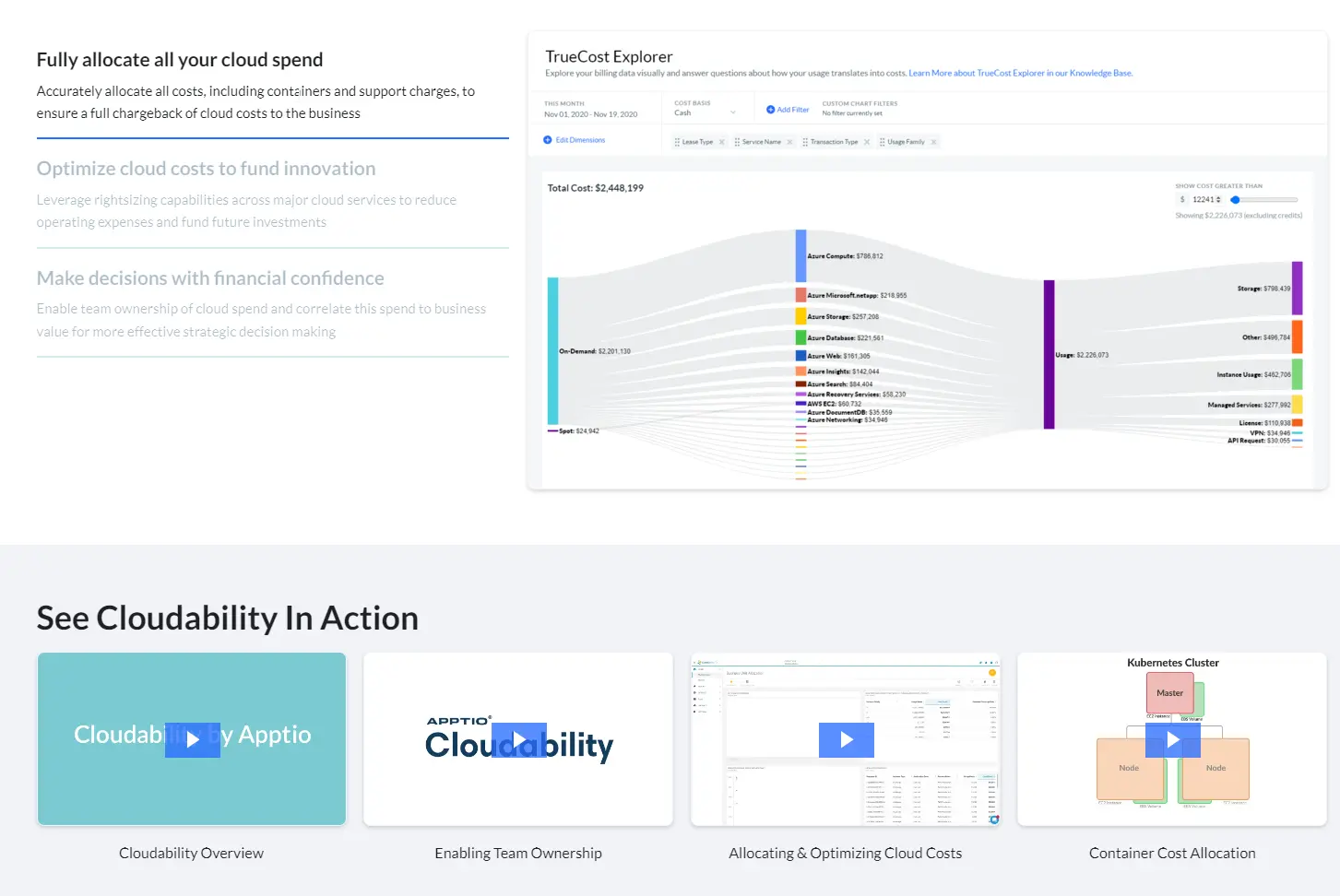

2. Cloudability

Cloudability gives visibility into Kubernetes costs by analyzing node resource usage, including CPU, memory, network, and disk. It evaluates pod-level settings to fairly allocate cluster costs across namespaces and labels fairly, helping users understand the cost of each Kubernetes cluster.

Cloudability integrates container costs into financial management. It includes Cluster Name, Namespace, and Labels in reports, dashboards, and the True Cost Explorer. Combining container-specific costs with regular billing builds a complete picture of cloud spend.

3. Spot by NetApp

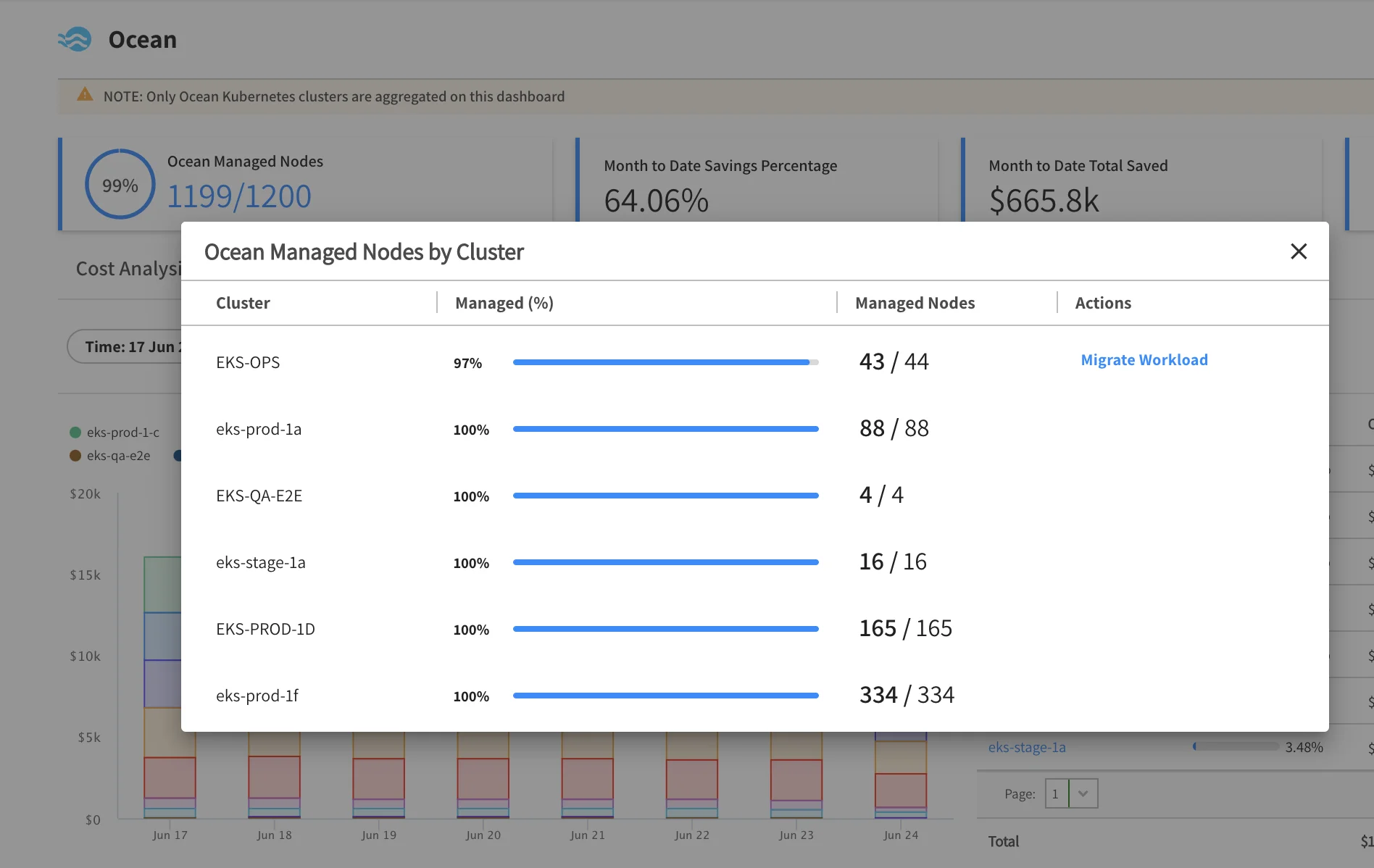

Spot by NetApp optimizes Kubernetes costs through its Ocean solution. It breaks down costs by namespace, labels, annotations, and resources. It supports real-time alerts and governance for efficient cost management.

Ocean analyzes cluster resource usage and offers rightsizing recommendations. This keeps Kubernetes environments cost-effective by focusing on impactful adjustments and tracking these changes over time.

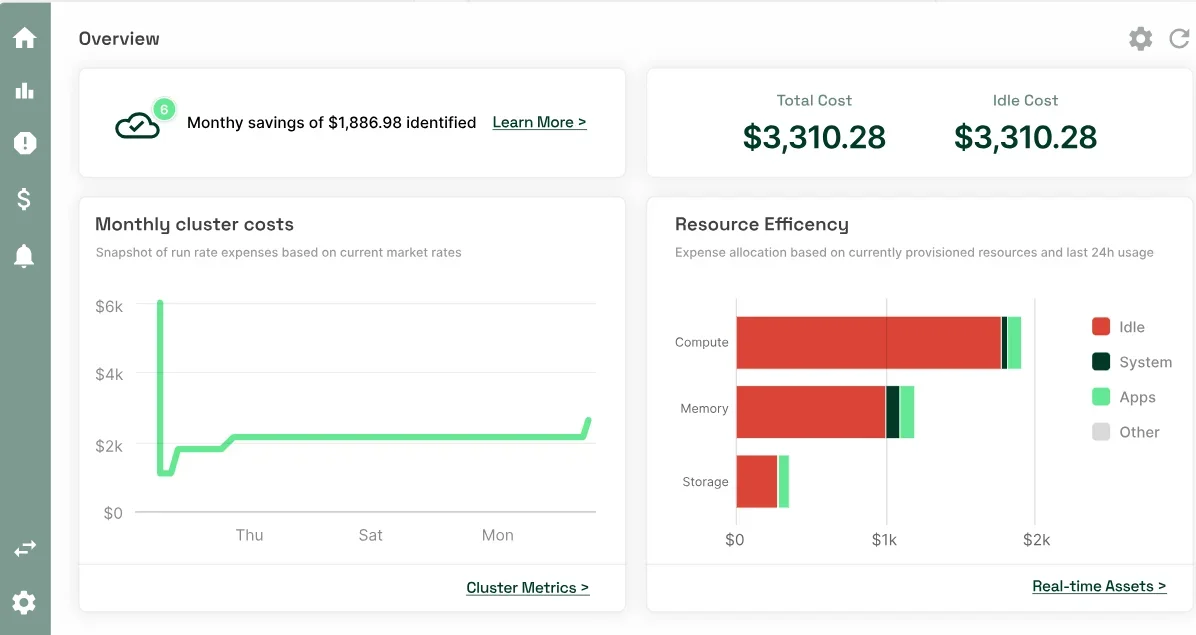

4. Kubecost

Kubecost tracks allocation in Kubernetes environments by namespace, deployment, and other metrics. This integrates in-cluster and cloud infrastructure costs, offering a unified view of spending.

Kubecost is fully self-hosted, giving users complete control over their data, which is essential for privacy and security. It uses open-source tools for smooth integration, improving visibility, and simplifying cost management. The platfrom also offers practical tips for reducing costs by 30-50%.

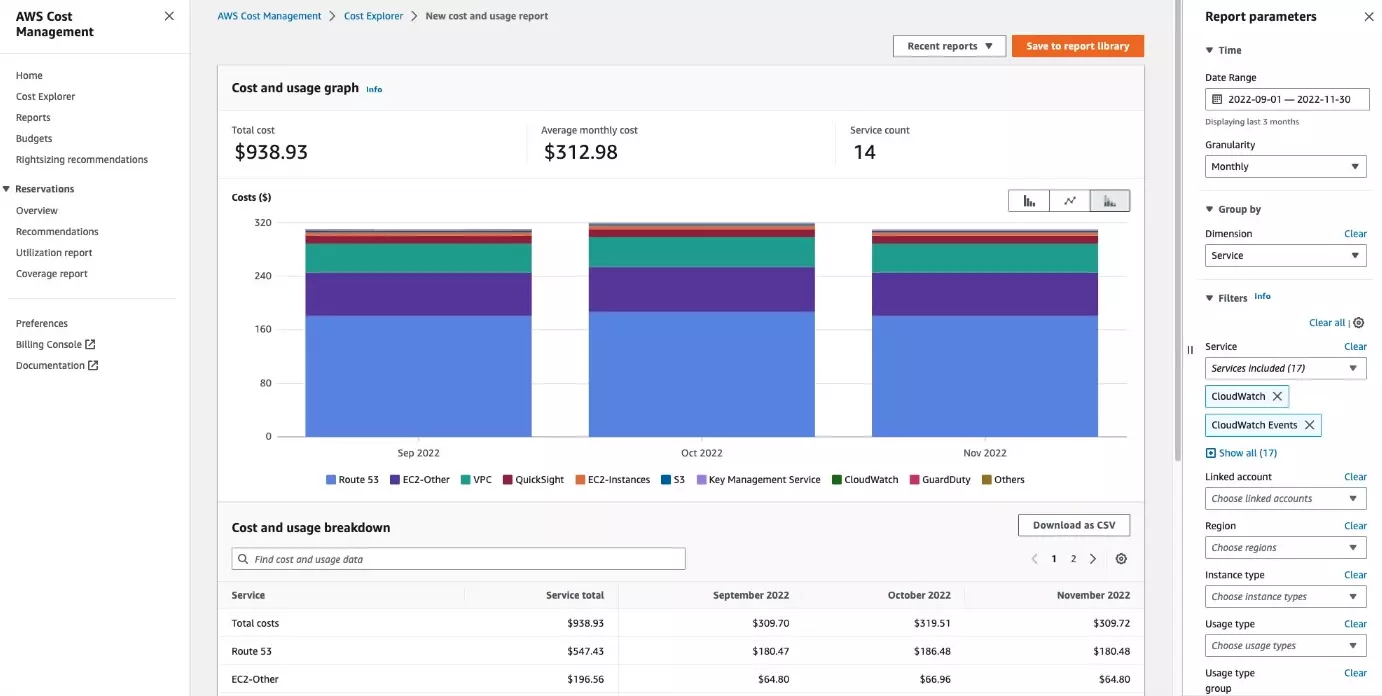

5. AWS Cost Explorer

With AWS Cost Explorer, you can create custom reports and dashboards to track Kubernetes costs. You can set up filters and group costs by tags, services, or usage types. These customized views enable you to track costs in real-time.

AWS Cost Explorer forecasts future costs using historical data. It helps predict Kubernetes costs and plan budgets. You can set limits and get alerts when spending is high. This proactive approach prevents overspending and optimizes resource allocation. It also integrates with Kubecost to offer even more granular insights, ensuring your EKS clusters run efficiently and cost-effectively.

Monitor Your Kubernetes Spend In The Context Of Your Business

Achieving in-depth cost intelligence isn’t as hard as you might think.

For more information, check out this resource explaining how the CloudZero platform works with Kubernetes, and read our container cost tracking documentation here.

today for a one-on-one demonstration of the CloudZero platform.

today for a one-on-one demonstration of the CloudZero platform.